Blog: PostgreSQL , p.3

SQL/JSON patches committed to PostgreSQL 15!

As PostgreSQL 15 moves to the feature freeze stage, we’d like to share the news about our team’s JSONB-related patches committed to version 15. This milestone marks years of work for many people, so we will name and thank them all in this blog post.

In previous articles we discussed how the system plans a query execution and how it collects statistics to select the best plan. The following articles, starting with this one, will focus on what a plan actually is, what it consists of and how it is executed.

In this article, I will demonstrate how the planner calculates execution costs. I will also discuss access methods and how they affect these costs, and use the sequential scan method as an illustration. Lastly, I will talk about parallel execution in PostgreSQL, how it works and when to use it.

I will use several seemingly complicated math formulas later in the article. You don't have to memorize any of them to get to the bottom of how the planner works; they are merely there to show where I get my numbers from.

Pluggable storage engines

The PostgreSQL's approach to storing data on disk will not be optimal for every possible type of load. Thankfully, you have options. Delivering on its promise of extensibility, PostgreSQL 12 and higher supports custom table access methods (storage engines), although it ships only with the stock one, heap:

...

Despite the ongoing tragic events, we continue the series. In the last article we reviewed the stages of query execution. Before we move on to plan node operations (data access and join methods), let's discuss the bread and butter of the cost optimizer: statistics.

As usual, I use the demo database for all my examples. You can download it and follow along.

You will see a lot of execution plans here today. We will discuss how the plans work in more detail in later articles. For now just pay attention to the numbers that you see in the first line of each plan, next to the word rows. These are row number estimates, or cardinality.

...

This time we decided to create a blog post on constraints using one of the most popular PGConf.Online talks explaining how you can try to gain peace of mind by using constraints in PostgreSQL.

Hello! I'm kicking off another article series about the internals of PostgreSQL. This one will focus on query planning and execution mechanics.

This series will cover: query execution stages (this article), statistics, sequential and index scans, nested-loop, hash, and merge joins.

Many thanks to Alexander Meleshko for the translation of this series into English.

This article borrows from our course QPT Query Optimization (available in English soon), but focuses mostly on the internal mechanisms of query execution, leaving the optimization aspect aside. Please also note that this article series is written with PostgreSQL 14 in mind.

Simple query protocol

The fundamental purpose of the PostgreSQL client-server protocol is twofold: it sends SQL queries to the server, and it receives the entire execution result in response. The query received by the server for execution goes through several stages.

Parsing

First, the query text is parsed, so that the server understands exactly what needs to be done.

Lexer and parser. The lexer is responsible for recognizing lexemes in the query string (such as SQL keywords, string and numeric literals, etc.), and the parser makes sure that the resulting set of lexemes is grammatically valid. The parser and lexer are implemented using the standard tools Bison and Flex.

The parsed query is represented as an abstract syntax tree.

...

Postgres Professional's support team has shared a real-world case of auditing the performance of our customer's 180-TB database deployment.

This is a summary of Postgres Professional’s more than 6 years field experience of providing HA/DR solutions to our customers and it covers both PostgreSQL and Postgres Pro databases.

While most of our customers are making progress on their journeys to the cloud, many of the HA/DR solutions they use from the on-premises world can be used in the cloud as well.

During PostgreSQL maintenance, resource-consuming queries occur inevitably. Therefore, it's vital for every Database Engineer, DBA, or Software Developer to detect and fix them as soon as possible. In this article, we'll list various extensions and monitoring tools used to collect information about queries and display them in a human-readable way.

Then we will cover typical query writing mistakes and explain how to correct them. We will also consider cases where extended statistics are used for more accurate row count estimates. Finally, you will see an example of how PostgreSQL planner excludes redundant filter clauses during its work.

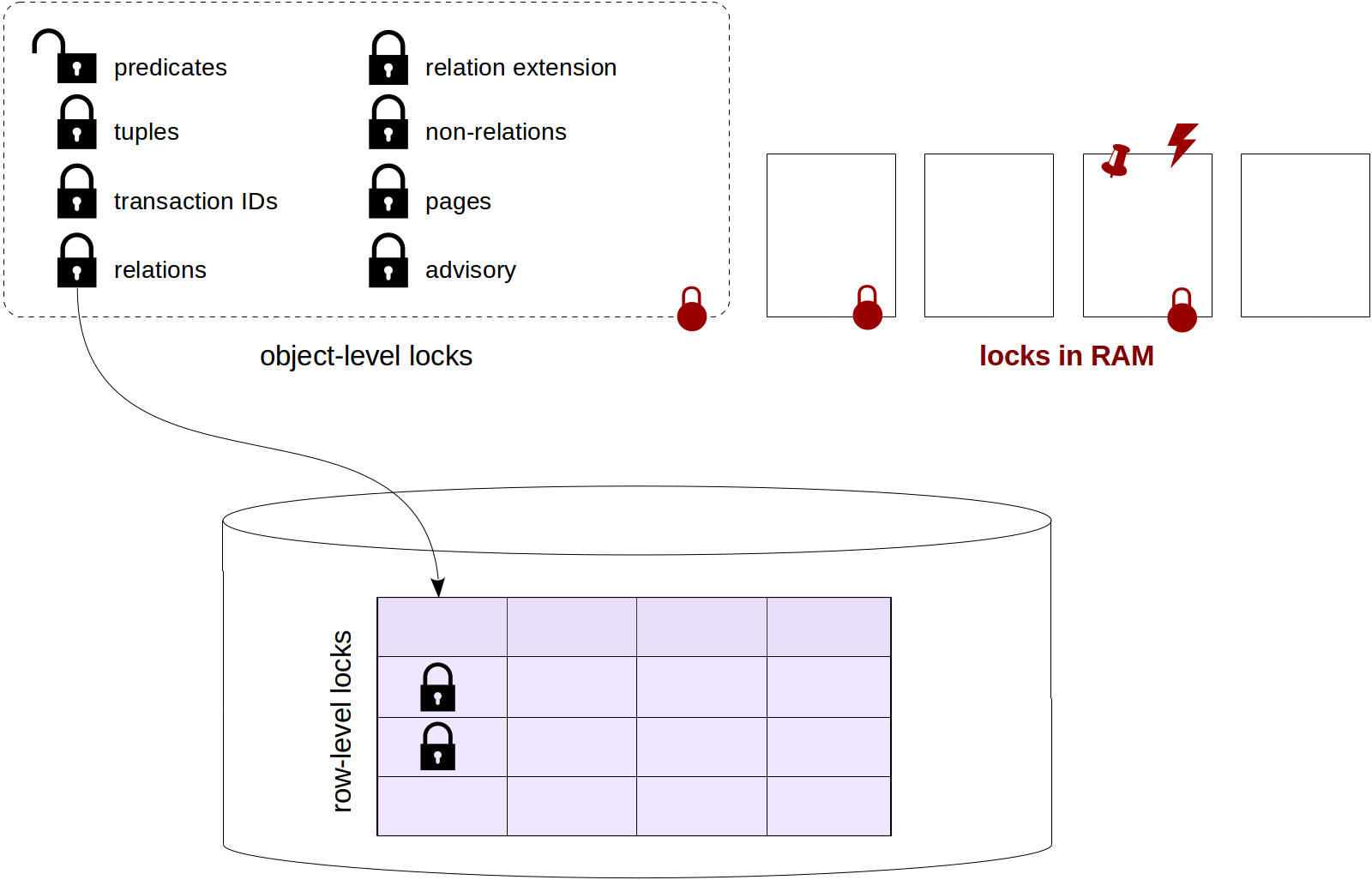

To remind you, we've already talked about relation-level locks, row-level locks, locks on other objects (including predicate locks) and interrelationships of different types of locks.

The following discussion of locks in RAM finishes this series of articles. We will consider spinlocks, lightweight locks and buffer pins, as well as events monitoring tools and sampling.

...

We've already discussed some object-level locks (specifically, relation-level locks), as well as row-level locks with their connection to object-level locks and also explored wait queues, which are not always fair.

We have a hodgepodge this time. We'll start with deadlocks (actually, I planned to discuss them last time, but that article was excessively long in itself), then briefly review object-level locks left and finally discuss predicate locks.

Last time, we discussed object-level locks and in particular relation-level locks. In this article, we will see how row-level locks are organized in PostgreSQL and how they are used together with object-level locks. We will also talk of wait queues and of those who jumps the queue.

The previous two series of articles covered isolation and multiversion concurrency control and logging.

In this series, we will discuss locks.

This series will consist of four articles:

- Relation-level locks (this article).

- Row-level locks.

- Locks on other objects and predicate locks.

- Locks in RAM.

In this blog post, we're explaining to those new to pg_profile why you might need this PostgreSQL extension in your daily work, on a real-world example.

So, we got acquainted with the structure of the buffer cache and in this context concluded that if all the RAM contents got lost due to failure, the write-ahead log (WAL) was required to recover. The size of the necessary WAL files and the recovery time are limited thanks to the checkpoint performed from time to time.

In the previous articles we already reviewed quite a few important settings that anyway relate to WAL. In this article (being the last in this series) we will discuss problems of WAL setup that are unaddressed yet: WAL levels and their purpose, as well as the reliability and performance of write-ahead logging.

We already got acquainted with the structure of the buffer cache — one of the main objects of the shared memory — and concluded that to recover after failure when all the RAM contents get lost, the write-ahead log (WAL) must be maintained.

The problem yet unaddressed, where we left off last time, is that we are unaware of where to start playing back WAL records during the recovery. To begin from the beginning, as the King from Lewis Caroll's Alice advised, is not an option: it is impossible to keep all the WAL records from the server start — this is potentially both a huge memory size and equally huge duration of the recovery. We need such a point that is gradually moving forward and that we can start the recovery at (and safely remove all the previous WAL records, accordingly). And this is the checkpoint, to be discussed below.