Thread: Add 64-bit XIDs into PostgreSQL 15

Long time wraparound was a really big pain for highly loaded systems. One source of performance degradation is the need to vacuum before every wraparound.

And there were several proposals to make XIDs 64-bit like [1], [2], [3] and [4] to name a few.

The approach [2] seems to have stalled on CF since 2018. But meanwhile it was successfully being used in our Postgres Pro fork all time since then. We have hundreds of customers using 64-bit XIDs. Dozens of instances are under load that require wraparound each 1-5 days with 32-bit XIDs.

It really helps the customers with a huge transaction load that in the case of 32-bit XIDs could experience wraparounds every day. So I'd like to propose this approach modification to CF.

PFA updated working patch v6 for PG15 development cycle.

It is based on a patch by Alexander Korotkov version 5 [5] with a few fixes, refactoring and was rebased to PG15.

Main changes:

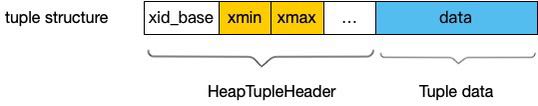

- Change TransactionId to 64bit

- Disk tuple format (HeapTupleHeader) is unchanged: xmin and xmax

remains 32bit

-- 32bit xid is named ShortTransactionId now.

-- Exception: see "double xmax" format below.

- Heap page format is changed to contain xid and multixact base value,

tuple's xmin and xmax are offsets from.

-- xid_base and multi_base are stored as a page special data. PageHeader

remains unmodified.

-- If after upgrade page has no free space for special data, tuples are

converted to "double xmax" format: xmin became virtual

FrozenTransactionId, xmax occupies the whole 64bit.

Page converted to new format when vacuum frees enough space.

- In-memory tuples (HeapTuple) were enriched with copies of xid_base and

multi_base from a page.

ToDo:

- replace xid_base/multi_base in HeapTuple with precalculated 64bit

xmin/xmax.

- attempt to split the patch into "in-memory" part (add xmin/xmax to

HeapTuple) and "storage" format change.

- try to implement the storage part as a table access method.

Your opinions are very much welcome!

[1] https://www.postgresql.org/message-id/flat/1611355191319-0.post%40n3.nabble.com#c884ac33243ded0a47881137c6c96f6b

[2] https://www.postgresql.org/message-id/flat/DA1E65A4-7C5A-461D-B211-2AD5F9A6F2FD%40gmail.com

[3] https://www.postgresql.org/message-id/flat/CAPpHfduQ7KuCHvg3dHx%2B9Pwp_rNf705bjdRCrR_Cqiv_co4H9A%40mail.gmail.com

[4] https://www.postgresql.org/message-id/flat/51957591572599112%40myt5-3a82a06244de.qloud-c.yandex.net

[5] https://www.postgresql.org/message-id/CAPpHfdseWf0QLWMAhLgiyP4u%2B5WUondzdQ_Yd-eeF%3DDuj%3DVq0g%40mail.gmail.com

Attachment

Hi Maxim,

I’m glad to see that you’re trying to carry the 64-bit XID work forward. I had not noticed that my earlier patch (also derived from Alexander Kortkov’s patch) was responded to back in September. Perhaps we can merge some of the code cleanup that it contained, such as using XID_FMT everywhere and creating a type for the kind of page returned by TransactionIdToPage() to make the code cleaner.

Is your patch functionally the same as the PostgresPro implementation? If so, I think it would be helpful for everyone’s understanding to read the PostgresPro documentation on VACUUM. See in particular section “Forced shrinking pg_clog and pg_multixact”

https://postgrespro.com/docs/enterprise/9.6/routine-vacuuming#vacuum-for-wraparound

best regards,

/Jim

Greetings, * Maxim Orlov (orlovmg@gmail.com) wrote: > Long time wraparound was a really big pain for highly loaded systems. One > source of performance degradation is the need to vacuum before every > wraparound. > And there were several proposals to make XIDs 64-bit like [1], [2], [3] and > [4] to name a few. > > The approach [2] seems to have stalled on CF since 2018. But meanwhile it > was successfully being used in our Postgres Pro fork all time since then. > We have hundreds of customers using 64-bit XIDs. Dozens of instances are > under load that require wraparound each 1-5 days with 32-bit XIDs. > It really helps the customers with a huge transaction load that in the case > of 32-bit XIDs could experience wraparounds every day. So I'd like to > propose this approach modification to CF. > > PFA updated working patch v6 for PG15 development cycle. > It is based on a patch by Alexander Korotkov version 5 [5] with a few > fixes, refactoring and was rebased to PG15. Just to confirm as I only did a quick look- if a transaction in such a high rate system lasts for more than a day (which certainly isn't completely out of the question, I've had week-long transactions before..), and something tries to delete a tuple which has tuples on it that can't be frozen yet due to the long-running transaction- it's just going to fail? Not saying that I've got any idea how to fix that case offhand, and we don't really support such a thing today as the server would just stop instead, but if I saw something in the release notes talking about PG moving to 64bit transaction IDs, I'd probably be pretty surprised to discover that there's still a 32bit limit that you have to watch out for or your system will just start failing transactions. Perhaps that's a worthwhile tradeoff for being able to generally avoid having to vacuum and deal with transaction wrap-around, but I have to wonder if there might be a better answer. Of course, also wonder about how we're going to document and monitor for this potential issue and what kind of corrective action will be needed (kill transactions older than a cerain amount of transactions..?). Thanks, Stephen

Attachment

On 1/4/22, 2:35 PM, "Stephen Frost" <sfrost@snowman.net> wrote:

>>

>> Not saying that I've got any idea how to fix that case offhand, and we

>> don't really support such a thing today as the server would just stop

>> instead, ...

>> Perhaps that's a

>> worthwhile tradeoff for being able to generally avoid having to vacuum

>> and deal with transaction wrap-around, but I have to wonder if there

>> might be a better answer.

>>

For the target use cases that PostgreSQL is designed for, it's a very worthwhile tradeoff in my opinion. Such

long-runningtransactions need to be killed.

Re: -- If after upgrade page has no free space for special data, tuples are

converted to "double xmax" format: xmin became virtual

FrozenTransactionId, xmax occupies the whole 64bit.

Page converted to new format when vacuum frees enough space.

I'm concerned about the maintainability impact of having 2 new on-disk page formats. It's already complex enough with

XIDsand multixact-XIDs.

If the lack of space for the two epochs in the special data area is a problem only in an upgrade scenario, why not

resolvethe problem before completing the upgrade process like a kind of post-process pg_repack operation that converts

all"double xmax" pages to the "double-epoch" page format? i.e. maybe the "double xmax" representation is needed as an

intermediaterepresentation during upgrade, but after upgrade completes successfully there are no pages with the

"double-xmax"representation. This would eliminate a whole class of coding errors and would make the code dealing with

64-bitXIDs simpler and more maintainable.

/Jim

On 2021/12/30 21:15, Maxim Orlov wrote: > Hi, hackers! > > Long time wraparound was a really big pain for highly loaded systems. One source of performance degradation is the needto vacuum before every wraparound. > And there were several proposals to make XIDs 64-bit like [1], [2], [3] and [4] to name a few. > > The approach [2] seems to have stalled on CF since 2018. But meanwhile it was successfully being used in our Postgres Profork all time since then. We have hundreds of customers using 64-bit XIDs. Dozens of instances are under load that requirewraparound each 1-5 days with 32-bit XIDs. > It really helps the customers with a huge transaction load that in the case of 32-bit XIDs could experience wraparoundsevery day. So I'd like to propose this approach modification to CF. > > PFA updated working patch v6 for PG15 development cycle. > It is based on a patch by Alexander Korotkov version 5 [5] with a few fixes, refactoring and was rebased to PG15. Thanks a lot! I'm really happy to see this proposal again!! Is there any documentation or README explaining this whole 64-bit XID mechanism? Could you tell me what happens if new tuple with XID larger than xid_base + 0xFFFFFFFF is inserted into the page? Such newtuple is not allowed to be inserted into that page? Or xid_base and xids of all existing tuples in the page are increased?Also what happens if one of those xids (of existing tuples) cannot be changed because the tuple still can be seenby very-long-running transaction? Regards, -- Fujii Masao Advanced Computing Technology Center Research and Development Headquarters NTT DATA CORPORATION

On Thu, 30 Dec 2021 at 13:19, Maxim Orlov <orlovmg@gmail.com> wrote: > > Hi, hackers! > > Long time wraparound was a really big pain for highly loaded systems. One source of performance degradation is the needto vacuum before every wraparound. > And there were several proposals to make XIDs 64-bit like [1], [2], [3] and [4] to name a few. Very good to see this revived. > PFA updated working patch v6 for PG15 development cycle. > It is based on a patch by Alexander Korotkov version 5 [5] with a few fixes, refactoring and was rebased to PG15. > > Main changes: > - Change TransactionId to 64bit This sounds like a good route to me. > - Disk tuple format (HeapTupleHeader) is unchanged: xmin and xmax > remains 32bit > -- 32bit xid is named ShortTransactionId now. > -- Exception: see "double xmax" format below. > - Heap page format is changed to contain xid and multixact base value, > tuple's xmin and xmax are offsets from. > -- xid_base and multi_base are stored as a page special data. PageHeader > remains unmodified. > -- If after upgrade page has no free space for special data, tuples are > converted to "double xmax" format: xmin became virtual > FrozenTransactionId, xmax occupies the whole 64bit. > Page converted to new format when vacuum frees enough space. > - In-memory tuples (HeapTuple) were enriched with copies of xid_base and > multi_base from a page. I think we need more Overview of Behavior than is available with this patch, perhaps in the form of a README, such as in src/backend/access/heap/README.HOT. Most people's comments are about what the opportunities and problems caused, and mine are definitely there also. i.e. explain the user visible behavior. Please explain the various new states that pages can be in and what the effects are, My understanding is this would be backwards compatible, so we can upgrade to it. Please confirm. Thanks -- Simon Riggs http://www.EnterpriseDB.com/

On Tue, Jan 4, 2022 at 10:22:50PM +0000, Finnerty, Jim wrote: > I'm concerned about the maintainability impact of having 2 new > on-disk page formats. It's already complex enough with XIDs and > multixact-XIDs. > > If the lack of space for the two epochs in the special data area is > a problem only in an upgrade scenario, why not resolve the problem > before completing the upgrade process like a kind of post-process > pg_repack operation that converts all "double xmax" pages to > the "double-epoch" page format? i.e. maybe the "double xmax" > representation is needed as an intermediate representation during > upgrade, but after upgrade completes successfully there are no pages > with the "double-xmax" representation. This would eliminate a whole > class of coding errors and would make the code dealing with 64-bit > XIDs simpler and more maintainable. Well, yes, we could do this, and it would avoid the complexity of having to support two XID representations, but we would need to accept that fast pg_upgrade would be impossible in such cases, since every page would need to be checked and potentially updated. You might try to do this while the server is first started and running queries, but I think we found out from the online checkpoint patch that having the server in an intermediate state while running queries is very complex --- it might be simpler to just accept two XID formats all the time than enabling the server to run with two formats for a short period. My big point is that this needs more thought. -- Bruce Momjian <bruce@momjian.us> https://momjian.us EDB https://enterprisedb.com If only the physical world exists, free will is an illusion.

On Thu, Dec 30, 2021 at 03:15:16PM +0300, Maxim Orlov wrote: > PFA updated working patch v6 for PG15 development cycle. > It is based on a patch by Alexander Korotkov version 5 [5] with a few fixes, > refactoring and was rebased to PG15. > > Main changes: > - Change TransactionId to 64bit > - Disk tuple format (HeapTupleHeader) is unchanged: xmin and xmax > remains 32bit > -- 32bit xid is named ShortTransactionId now. > -- Exception: see "double xmax" format below. > - Heap page format is changed to contain xid and multixact base value, > tuple's xmin and xmax are offsets from. > -- xid_base and multi_base are stored as a page special data. PageHeader > remains unmodified. > -- If after upgrade page has no free space for special data, tuples are > converted to "double xmax" format: xmin became virtual > FrozenTransactionId, xmax occupies the whole 64bit. > Page converted to new format when vacuum frees enough space. I think it is a great idea to allow the 64-XID to span the 32-bit xmin and xmax fields when needed. It would be nice if we can get focus on this feature so we are sure it gets into PG 15. Can we add this patch incrementally so people can more easily analyze it? -- Bruce Momjian <bruce@momjian.us> https://momjian.us EDB https://enterprisedb.com If only the physical world exists, free will is an illusion.

On Tue, Jan 4, 2022 at 05:49:07PM +0000, Finnerty, Jim wrote: > Hi Maxim, > I’m glad to see that you’re trying to carry the 64-bit XID work forward. I > had not noticed that my earlier patch (also derived from Alexander Kortkov’s > patch) was responded to back in September. Perhaps we can merge some of the > code cleanup that it contained, such as using XID_FMT everywhere and creating a > type for the kind of page returned by TransactionIdToPage() to make the code > cleaner. > > Is your patch functionally the same as the PostgresPro implementation? If > so, I think it would be helpful for everyone’s understanding to read the > PostgresPro documentation on VACUUM. See in particular section “Forced > shrinking pg_clog and pg_multixact” > > https://postgrespro.com/docs/enterprise/9.6/routine-vacuuming# > vacuum-for-wraparound Good point --- we still need vacuum freeze. It would be good to understand how much value we get in allowing vacuum freeze to be done less often --- how big can pg_xact/pg_multixact get before they are problems? -- Bruce Momjian <bruce@momjian.us> https://momjian.us EDB https://enterprisedb.com If only the physical world exists, free will is an illusion.

Hi! On Thu, Jan 6, 2022 at 3:02 AM Bruce Momjian <bruce@momjian.us> wrote: > > On Thu, Dec 30, 2021 at 03:15:16PM +0300, Maxim Orlov wrote: > > PFA updated working patch v6 for PG15 development cycle. > > It is based on a patch by Alexander Korotkov version 5 [5] with a few fixes, > > refactoring and was rebased to PG15. > > > > Main changes: > > - Change TransactionId to 64bit > > - Disk tuple format (HeapTupleHeader) is unchanged: xmin and xmax > > remains 32bit > > -- 32bit xid is named ShortTransactionId now. > > -- Exception: see "double xmax" format below. > > - Heap page format is changed to contain xid and multixact base value, > > tuple's xmin and xmax are offsets from. > > -- xid_base and multi_base are stored as a page special data. PageHeader > > remains unmodified. > > -- If after upgrade page has no free space for special data, tuples are > > converted to "double xmax" format: xmin became virtual > > FrozenTransactionId, xmax occupies the whole 64bit. > > Page converted to new format when vacuum frees enough space. > > I think it is a great idea to allow the 64-XID to span the 32-bit xmin > and xmax fields when needed. It would be nice if we can get focus on > this feature so we are sure it gets into PG 15. Can we add this patch > incrementally so people can more easily analyze it? I see at least the following major issues/questions in this patch. 1) Current code relies on the fact that TransactionId can be atomically read from/written to shared memory. With 32-bit systems and 64-bit TransactionId, that's not true anymore. Therefore, the patch has concurrency issues on 32-bit systems. We need to carefully review these issues and provide a fallback for 32-bit systems. I suppose nobody is thinking about dropping off 32-bit systems, right? Also, I wonder how critical for us is the overhead for 32-bit systems. They are going to become legacy, so overhead isn't so critical, right? 2) With this patch we still need to freeze to cut SLRUs. This is especially problematic with Multixacts, because systems heavily using row-level locks can consume an enormous amount of multixacts. That is especially problematic when we have 2x bigger multixacts. We probably can provide an alternative implementation for multixact vacuum, which doesn't require scanning all the heaps. That is a pretty amount of work though. The clog is much smaller and we can cut it more rarely. Perhaps, we could tolerate freezing to cut clog, couldn't we? 3) 2x bigger in-memory representation of TransactionId have overheads. In particular, it could mitigate the effect of recent advancements from Andres Freund. I'm not exactly sure we should/can do something with this. But I think this should be at least carefully analyzed. 4) SP-GiST index stores TransactionId on pages. Could we tolerate dropping SP-GiST indexes on a major upgrade? Or do we have to invent something smarter? 5) 32-bit limitation within the page mentioned upthread by Stephen Frost should be also carefully considered. Ideally, we should get rid of it, but I don't have particular ideas in this field for now. At least, we should make sure we did our best at error reporting and monitoring capabilities. I think the realistic goal for PG 15 development cycle would be agreement on a roadmap for all the items above (and probably some initial implementations). ------ Regards, Alexander Korotkov

On Thu, 30 Dec 2021 at 13:19, Maxim Orlov <orlovmg@gmail.com> wrote: > Your opinions are very much welcome! This is a review of the Int64 options patch, "v6-0001-Add-64-bit-GUCs-for-xids.patch" Applies cleanly, with some fuzz, compiles cleanly and passes make check. Patch eyeballs OK, no obvious defects. Tested using the attached test, so seems to work correctly. On review of docs, no additions or changes required. Perhaps add something to README? If so, minor doc patch attached. Otherwise, this sub-patch is READY FOR COMMITTER. -- Simon Riggs http://www.EnterpriseDB.com/

Attachment

(Maxim) Re: -- If after upgrade page has no free space for special data, tuples are

converted to "double xmax" format: xmin became virtual

FrozenTransactionId, xmax occupies the whole 64bit.

Page converted to new format when vacuum frees enough space.

A better way would be to prepare the database for conversion to the 64-bit XID format before the upgrade so that it

ensuresthat every page has enough room for the two new epochs (E bits).

1. Enforce the rule that no INSERT or UPDATE to an existing page will leave less than E bits of free space on a heap

page

2. Run an online and restartable task, analogous to pg_repack, that rewrites and splits any page that has less than E

bitsof free space. This needs to be run on all non-temp tables in all schemas in all databases. DDL operations are not

allowedon a target table while this operation runs, which is enforced by taking an ACCESS SHARE lock on each table

whilethe process is running. To mitigate the effects of this restriction, the restartable task can be restricted to run

onlyin certain hours. This could be implemented as a background maintenance task that runs for X hours as of a certain

timeof day and then kicks itself off again in 24-X hours, logging its progress.

When this task completes, the database is ready for upgrade to 64-bit XIDs, and there is no possibility that any page

hasinsufficient free space for the special data.

Would you agree that this approach would completely eliminate the need for a "double xmax" representation?

/Jim

On Thu, 6 Jan 2022 at 13:15, Finnerty, Jim <jfinnert@amazon.com> wrote: > > (Maxim) Re: -- If after upgrade page has no free space for special data, tuples are > converted to "double xmax" format: xmin became virtual > FrozenTransactionId, xmax occupies the whole 64bit. > Page converted to new format when vacuum frees enough space. > > A better way would be to prepare the database for conversion to the 64-bit XID format before the upgrade so that it ensuresthat every page has enough room for the two new epochs (E bits). Most software has a one-stage upgrade model. What you propose would have us install 2 things, with a step in-between, which makes it harder to manage. > 1. Enforce the rule that no INSERT or UPDATE to an existing page will leave less than E bits of free space on a heap page > > 2. Run an online and restartable task, analogous to pg_repack, that rewrites and splits any page that has less than E bitsof free space. This needs to be run on all non-temp tables in all schemas in all databases. DDL operations are not allowedon a target table while this operation runs, which is enforced by taking an ACCESS SHARE lock on each table whilethe process is running. To mitigate the effects of this restriction, the restartable task can be restricted to run onlyin certain hours. This could be implemented as a background maintenance task that runs for X hours as of a certain timeof day and then kicks itself off again in 24-X hours, logging its progress. > > When this task completes, the database is ready for upgrade to 64-bit XIDs, and there is no possibility that any page hasinsufficient free space for the special data. > > Would you agree that this approach would completely eliminate the need for a "double xmax" representation? I agree about the idea for scanning existing data blocks, but why not do this AFTER upgrade? 1. Upgrade, with important aspect not-enabled-yet, but everything else working - all required software is delivered in one shot, with fast upgrade 2. As each table is VACUUMed, we confirm/clean/groom data blocks so each table is individually confirmed as being ready. The pace that this happens at is under user control. 3. When all tables have been prepared, then restart to allow xid64 format usage -- Simon Riggs http://www.EnterpriseDB.com/

Re: Most software has a one-stage upgrade model. What you propose would

have us install 2 things, with a step in-between, which makes it

harder to manage.

The intended benefit would be that the code doesn't need to handle the possibility of 2 different XID representations

forthe indefinite future.

I agree that VACUUM would be the preferred tool to make room for the special data area so that there is no need to

installa separate tool, though, whether this work happens before or after the upgrade.

Re: 1. Upgrade, with important aspect not-enabled-yet, but everything else working - all required software is delivered

inone shot, with fast upgrade

Let's clarify what happens during upgrade. What format are the pages in immediately after the upgrade?

2. As each table is VACUUMed, we confirm/clean/groom data blocks so

each table is individually confirmed as being ready. The pace that

this happens at is under user control.

What are VACUUM's new responsibilities in this phase? VACUUM needs a new task that confirms when there exists no heap

pagefor a table that is not ready.

If upgrade put all the pages into either double-xmax or double-epoch representation, then VACUUM's responsibility could

beto split the double-xmax pages into the double-epoch representation and verify when there exists no double-xmax

pages.

3. When all tables have been prepared, then restart to allow xid64 format usage

Let's also clarify what happens at restart time.

If we were to do the upgrade before preparing space in advance, is there a way to ever remove the code that knows about

thedouble-xmax XID representation?

On Tue, Jan 4, 2022 at 9:40 PM Fujii Masao <masao.fujii@oss.nttdata.com> wrote: > Could you tell me what happens if new tuple with XID larger than xid_base + 0xFFFFFFFF is inserted into the page? Suchnew tuple is not allowed to be inserted into that page? I fear that this patch will have many bugs along these lines. Example: Why is it okay that convert_page() may have to defragment a heap page, without holding a cleanup lock? That will subtly break code that holds a pin on the buffer, when a tuple slot contains a C pointer to a HeapTuple in shared memory (though only if we get unlucky). Currently we are very permissive about what XID/backend can (or cannot) consume a specific piece of free space from a specific heap page in code like RelationGetBufferForTuple(). It would be very hard to enforce a policy like "your XID cannot insert onto this particular heap page" with the current FSM design. (I actually think that we should have a FSM that supports these requirements, but that's a big project -- a long term goal of mine [1].) > Or xid_base and xids of all existing tuples in the page are increased? Also what happens if one of those xids (of existingtuples) cannot be changed because the tuple still can be seen by very-long-running transaction? That doesn't work in the general case, I think. How could it, unless we truly had 64-bit XIDs in heap tuple headers? You can't necessarily freeze to fix the problem, because we can only freeze XIDs that 1.) committed, and 2.) are visible to every possible MVCC snapshot. (I think you were alluding to this problem yourself.) I believe that a good solution to the problem that this patch tries to solve needs to be more ambitious. I think that we need to return to first principles, rather than extending what we have already. Currently, we store XIDs in tuple headers so that we can determine the tuple's visibility status, based on whether the XID committed (or aborted), and where our snapshot sees the XID as "in the past" (in the case of an inserted tuple's xmin). You could say that XIDs from tuple headers exist so we can see differences *between* tuples. But these differences are typically not useful/interesting for very long. 32-bit XIDs are sometimes not wide enough, but usually they're "too wide": Why should we need to consider an old XID (e.g. do clog lookups) at all, barring extreme cases? Why do we need to keep any kind of metadata about transactions around for a long time? Postgres has not supported time travel in 25 years! If we eagerly cleaned-up aborted transactions with a special kind of VACUUM (which would remove aborted XIDs), we could also maintain a structure that indicates if all of the XIDs on a heap page are known "all committed" implicitly (no dirtying the page, no hint bits, etc) -- something a little like the visibility map, that is mostly set implicitly (not during VACUUM). That doesn't fix the wraparound problem itself, of course. But it enables a design that imposes the same problem on the specific old snapshot instead -- something like a "snapshot too old" error is much better than a system-wide wraparound failure. That approach is definitely very hard, and also requires a smart FSM along the lines described in [1], but it seems like the best way forward. As I pointed out already, freezing is bad because it imposes the requirement that everybody considers an affected XID committed and visible, which is brittle (e.g., old snapshots can cause wraparound failure). More generally, we rely too much on explicitly maintaining "absolute" metadata inline, when we should implicitly maintain "relative" metadata (that can be discarded quickly and without concern for old snapshots). We need to be more disciplined about what XIDs can modify what heap pages in the first place (in code like hio.c and the FSM) to make all this work. [1] https://www.postgresql.org/message-id/CAH2-Wz%3DzEV4y_wxh-A_EvKxeAoCMdquYMHABEh_kZO1rk3a-gw%40mail.gmail.com -- Peter Geoghegan

,On Thu, Jan 6, 2022 at 4:15 PM Finnerty, Jim <jfinnert@amazon.com> wrote: > (Maxim) Re: -- If after upgrade page has no free space for special data, tuples are > converted to "double xmax" format: xmin became virtual > FrozenTransactionId, xmax occupies the whole 64bit. > Page converted to new format when vacuum frees enough space. > > A better way would be to prepare the database for conversion to the 64-bit XID format before the upgrade so that it ensuresthat every page has enough room for the two new epochs (E bits). > > 1. Enforce the rule that no INSERT or UPDATE to an existing page will leave less than E bits of free space on a heap page > > 2. Run an online and restartable task, analogous to pg_repack, that rewrites and splits any page that has less than E bitsof free space. This needs to be run on all non-temp tables in all schemas in all databases. DDL operations are not allowedon a target table while this operation runs, which is enforced by taking an ACCESS SHARE lock on each table whilethe process is running. To mitigate the effects of this restriction, the restartable task can be restricted to run onlyin certain hours. This could be implemented as a background maintenance task that runs for X hours as of a certain timeof day and then kicks itself off again in 24-X hours, logging its progress. > > When this task completes, the database is ready for upgrade to 64-bit XIDs, and there is no possibility that any page hasinsufficient free space for the special data. > > Would you agree that this approach would completely eliminate the need for a "double xmax" representation? The "prepare" approach was the first tried. https://github.com/postgrespro/pg_pageprep But it appears to be very difficult and unreliable. After investing many months into pg_pageprep, "double xmax" approach appears to be very fast to implement and reliable. ------ Regards, Alexander Korotkov

On 2022/01/06 19:24, Simon Riggs wrote: > On Thu, 30 Dec 2021 at 13:19, Maxim Orlov <orlovmg@gmail.com> wrote: > >> Your opinions are very much welcome! > > This is a review of the Int64 options patch, > "v6-0001-Add-64-bit-GUCs-for-xids.patch" Do we really need to support both int32 and int64 options? Isn't it enough to replace the existing int32 option with int64one? Or how about using string-type option for very large number like 64-bit XID, like it's done for recovery_target_xid? Regards, -- Fujii Masao Advanced Computing Technology Center Research and Development Headquarters NTT DATA CORPORATION

Re: clog page numbers, as returned by TransactionIdToPage

- int pageno = TransactionIdToPage(xid); /* get page of parent */

+ int64 pageno = TransactionIdToPage(xid); /* get page of parent */

...

- int pageno = TransactionIdToPage(subxids[0]);

+ int64 pageno = TransactionIdToPage(subxids[0]);

int offset = 0;

int i = 0;

...

- int nextpageno;

+ int64 nextpageno;

Etc.

In all those places where you are replacing int with int64 for the kind of values returned by TransactionIdToPage(),

wouldyou mind replacing the int64's with a type name, such as ClogPageNumber, for improved code maintainability?

Re: The "prepare" approach was the first tried.

https://github.com/postgrespro/pg_pageprep

But it appears to be very difficult and unreliable. After investing

many months into pg_pageprep, "double xmax" approach appears to be

very fast to implement and reliable.

I'd still like a plan to retire the "double xmax" representation eventually. Previously I suggested that this could be

doneas a post-process, before upgrade is complete, but that could potentially make upgrade very slow.

Another way to retire the "double xmax" representation eventually could be to disallow "double xmax" pages in

subsequentmajor version upgrades (e.g. to PG16, if "double xmax" pages are introduced in PG15). This gives the luxury

oftime after a fast upgrade to convert all pages to contain the epochs, while still providing a path to more

maintainablecode in the future.

On Fri, Jan 07, 2022 at 03:53:51PM +0000, Finnerty, Jim wrote: > I'd still like a plan to retire the "double xmax" representation eventually. Previously I suggested that this could bedone as a post-process, before upgrade is complete, but that could potentially make upgrade very slow. > > Another way to retire the "double xmax" representation eventually could be to disallow "double xmax" pages in subsequentmajor version upgrades (e.g. to PG16, if "double xmax" pages are introduced in PG15). This gives the luxury oftime after a fast upgrade to convert all pages to contain the epochs, while still providing a path to more maintainablecode in the future. Yes, but how are you planning to rewrite it? Is vacuum enough? I suppose it'd need FREEZE + DISABLE_PAGE_SKIPPING ? This would preclude upgrading "across" v15. Maybe that'd be okay, but it'd be a new and atypical restriction. How would you enforce that it'd been run on v15 before upgrading to pg16 ? You'd need to track whether vacuum had completed the necessary steps in pg15. I don't think it'd be okay to make pg_upgrade --check to read every tuple. The "keeping track" part is what reminds me of the online checksum patch. It'd be ideal if there were a generic solution to this kind of task, or at least a "model" process to follow. -- Justin

On 07.01.22 06:18, Fujii Masao wrote: > On 2022/01/06 19:24, Simon Riggs wrote: >> On Thu, 30 Dec 2021 at 13:19, Maxim Orlov <orlovmg@gmail.com> wrote: >> >>> Your opinions are very much welcome! >> >> This is a review of the Int64 options patch, >> "v6-0001-Add-64-bit-GUCs-for-xids.patch" > > Do we really need to support both int32 and int64 options? Isn't it > enough to replace the existing int32 option with int64 one? I think that would create a lot of problems. You'd have to change every underlying int variable to int64, and then check whether that causes any issues where they are used (wrong printf format, assignments, overflows), and you'd have to check whether the existing limits are still appropriate. And extensions would be upset. This would be a big mess. > Or how about > using string-type option for very large number like 64-bit XID, like > it's done for recovery_target_xid? Seeing how many variables that contain transaction ID information actually exist, I think it could be worth introducing a new category as proposed. Otherwise, you'd have to write a lot of check and assign hooks. I do wonder whether signed vs. unsigned is handled correctly. Transaction IDs are unsigned, but all GUC handling is signed.

On Fri, Jan 7, 2022 at 03:53:51PM +0000, Finnerty, Jim wrote: > Re: The "prepare" approach was the first tried. > https://github.com/postgrespro/pg_pageprep But it appears to be > very difficult and unreliable. After investing many months into > pg_pageprep, "double xmax" approach appears to be very fast to > implement and reliable. > > I'd still like a plan to retire the "double xmax" representation > eventually. Previously I suggested that this could be done as a > post-process, before upgrade is complete, but that could potentially > make upgrade very slow. > > Another way to retire the "double xmax" representation eventually > could be to disallow "double xmax" pages in subsequent major version > upgrades (e.g. to PG16, if "double xmax" pages are introduced in > PG15). This gives the luxury of time after a fast upgrade to convert > all pages to contain the epochs, while still providing a path to more > maintainable code in the future. This gets into the entire issue we have discussed in the past but never resolved --- how do we manage state changes in the Postgres file format while the server is running? pg_upgrade and pg_checksums avoid the problem by doing such changes while the server is down, and other file formats have avoided it by allowing perpetual reading of the old format. Any such non-perpetual changes while the server is running must deal with recording the start of the state change, the completion of it, communicating such state changes to all running backends in a synchronous manner, and possible restarting of the state change. -- Bruce Momjian <bruce@momjian.us> https://momjian.us EDB https://enterprisedb.com If only the physical world exists, free will is an illusion.

On Fri, 7 Jan 2022 at 16:09, Justin Pryzby <pryzby@telsasoft.com> wrote: > > On Fri, Jan 07, 2022 at 03:53:51PM +0000, Finnerty, Jim wrote: > > I'd still like a plan to retire the "double xmax" representation eventually. Previously I suggested that this couldbe done as a post-process, before upgrade is complete, but that could potentially make upgrade very slow. > > > > Another way to retire the "double xmax" representation eventually could be to disallow "double xmax" pages in subsequentmajor version upgrades (e.g. to PG16, if "double xmax" pages are introduced in PG15). This gives the luxury oftime after a fast upgrade to convert all pages to contain the epochs, while still providing a path to more maintainablecode in the future. > > Yes, but how are you planning to rewrite it? Is vacuum enough? Probably not, but VACUUM is the place to add such code. > I suppose it'd need FREEZE + DISABLE_PAGE_SKIPPING ? Yes > This would preclude upgrading "across" v15. Maybe that'd be okay, but it'd be > a new and atypical restriction. I don't see that restriction. Anyone upgrading from before PG15 would apply the transform. Just because we introduce a transform in PG15 doesn't mean we can't apply that transform in later releases as well, to allow say PG14 -> PG16. -- Simon Riggs http://www.EnterpriseDB.com/

On Thu, Jan 6, 2022 at 3:45 PM Peter Geoghegan <pg@bowt.ie> wrote: > On Tue, Jan 4, 2022 at 9:40 PM Fujii Masao <masao.fujii@oss.nttdata.com> wrote: > > Could you tell me what happens if new tuple with XID larger than xid_base + 0xFFFFFFFF is inserted into the page? Suchnew tuple is not allowed to be inserted into that page? > > I fear that this patch will have many bugs along these lines. Example: > Why is it okay that convert_page() may have to defragment a heap page, > without holding a cleanup lock? That will subtly break code that holds > a pin on the buffer, when a tuple slot contains a C pointer to a > HeapTuple in shared memory (though only if we get unlucky). Yeah. I think it's possible that some approach along the lines of what is proposed here can work, but quality of implementation is a big issue. This stuff is not easy to get right. Another thing that I'm wondering about is the "double xmax" representation. That requires some way of distinguishing when that representation is in use. I'd be curious to know where we found the bits for that -- the tuple header isn't exactly replete with extra bit space. Also, if we have an epoch of some sort that is included in new page headers but not old ones, that adds branches to code that might sometimes be quite hot. I don't know how much of a problem that is, but it seems worth worrying about. For all of that, I don't particularly agree with Jim Finnerty's idea that we ought to solve the problem by forcing sufficient space to exist in the page pre-upgrade. There are some advantages to such approaches, but they make it really hard to roll out changes. You have to roll out the enabling change first, wait until everyone is running a release that supports it, and only then release the technology that requires the additional page space. Since we don't put new features into back-branches -- and an on-disk format change would be a poor place to start -- that would make rolling something like this out take many years. I think we'll be much happier putting all the complexity in the new release. -- Robert Haas EDB: http://www.enterprisedb.com

On Wed, Jan 5, 2022 at 9:53 PM Alexander Korotkov <aekorotkov@gmail.com> wrote: > I see at least the following major issues/questions in this patch. > 1) Current code relies on the fact that TransactionId can be > atomically read from/written to shared memory. With 32-bit systems > and 64-bit TransactionId, that's not true anymore. Therefore, the > patch has concurrency issues on 32-bit systems. We need to carefully > review these issues and provide a fallback for 32-bit systems. I > suppose nobody is thinking about dropping off 32-bit systems, right? I think that's right. Not yet, anyway. > Also, I wonder how critical for us is the overhead for 32-bit systems. > They are going to become legacy, so overhead isn't so critical, right? Agreed. > 2) With this patch we still need to freeze to cut SLRUs. This is > especially problematic with Multixacts, because systems heavily using > row-level locks can consume an enormous amount of multixacts. That is > especially problematic when we have 2x bigger multixacts. We probably > can provide an alternative implementation for multixact vacuum, which > doesn't require scanning all the heaps. That is a pretty amount of > work though. The clog is much smaller and we can cut it more rarely. > Perhaps, we could tolerate freezing to cut clog, couldn't we? Right. We can't let any of the SLRUs -- don't forget about stuff like pg_subtrans, which is a multiple of the size of clog -- grow without bound, even if it never forces a system shutdown. I'm not sure it's a good idea to think about introducing new freezing mechanisms at the same time as we're making other changes, though. Just removing the possibility of a wraparound shutdown without changing any of the rules about how and when we freeze would be a significant advancement. Other changes could be left for future work. > 3) 2x bigger in-memory representation of TransactionId have overheads. > In particular, it could mitigate the effect of recent advancements > from Andres Freund. I'm not exactly sure we should/can do something > with this. But I think this should be at least carefully analyzed. Seems fair. > 4) SP-GiST index stores TransactionId on pages. Could we tolerate > dropping SP-GiST indexes on a major upgrade? Or do we have to invent > something smarter? Probably depends on how much work it is. SP-GiST indexes are not mainstream, so I think we could at least consider breaking compatibility, but it doesn't seem like a thing to do lightly. > 5) 32-bit limitation within the page mentioned upthread by Stephen > Frost should be also carefully considered. Ideally, we should get rid > of it, but I don't have particular ideas in this field for now. At > least, we should make sure we did our best at error reporting and > monitoring capabilities. I don't think I understand the thinking here. As long as we retain the existing limit that the oldest running XID can't be more than 2 billion XIDs in the past, we can't ever need to throw an error. A new page modification that finds very old XIDs on the page can always escape trouble by pruning the page and freezing whatever old XIDs survive pruning. I would argue that it's smarter not to change the in-memory representation of XIDs to 64-bit in places like the ProcArray. As you mention in (4), that might hurt performance. But also, the benefit is minimal. Nobody is really sad that they can't keep transactions open forever. They are sad because the system has severe bloat and/or shuts down entirely. Some kind of change along these lines can fix the second of those problems, and that's progress. > I think the realistic goal for PG 15 development cycle would be > agreement on a roadmap for all the items above (and probably some > initial implementations). +1. Trying to rush something through to commit is just going to result in a bunch of bugs. We need to work through the issues carefully and take the time to do it well. -- Robert Haas EDB: http://www.enterprisedb.com

Greetings, * Robert Haas (robertmhaas@gmail.com) wrote: > On Wed, Jan 5, 2022 at 9:53 PM Alexander Korotkov <aekorotkov@gmail.com> wrote: > > 5) 32-bit limitation within the page mentioned upthread by Stephen > > Frost should be also carefully considered. Ideally, we should get rid > > of it, but I don't have particular ideas in this field for now. At > > least, we should make sure we did our best at error reporting and > > monitoring capabilities. > > I don't think I understand the thinking here. As long as we retain the > existing limit that the oldest running XID can't be more than 2 > billion XIDs in the past, we can't ever need to throw an error. A new > page modification that finds very old XIDs on the page can always > escape trouble by pruning the page and freezing whatever old XIDs > survive pruning. So we'll just fail such an old transaction? Or force a server restart? or..? What if we try to signal that transaction and it doesn't go away? > I would argue that it's smarter not to change the in-memory > representation of XIDs to 64-bit in places like the ProcArray. As you > mention in (4), that might hurt performance. But also, the benefit is > minimal. Nobody is really sad that they can't keep transactions open > forever. They are sad because the system has severe bloat and/or shuts > down entirely. Some kind of change along these lines can fix the > second of those problems, and that's progress. I brought up the concern that I did because I would be a bit sad if I couldn't have a transaction open for a day on a very high rate system of the type being discussed here. Would be fantastic if we had a solution to that issue, but I get that reducing the need to vacuum and such would be a really nice improvement even if we can't make long running transactions work. Then again, if we do actually change the in-memory bits- then maybe we could have such a long running transaction, provided it didn't try to make an update to a page with really old xids on it, which might be entirely reasonable in a lot of cases. I do still worry about how we explain what the limitation here is and how to avoid hitting it. Definitely seems like a 'gotcha' that people are going to complain about, though hopefully not as much of one as the current cases we hear about of vacuum falling behind and the system running out of xids. > > I think the realistic goal for PG 15 development cycle would be > > agreement on a roadmap for all the items above (and probably some > > initial implementations). > > +1. Trying to rush something through to commit is just going to result > in a bunch of bugs. We need to work through the issues carefully and > take the time to do it well. +1. Thanks, Stephen

Attachment

I'd be

curious to know where we found the bits for that -- the tuple header

isn't exactly replete with extra bit space.

Perhaps we can merge some of the code cleanup that it contained, such as using XID_FMT everywhere and creating a type for the kind of page returned by TransactionIdToPage() to make the code cleaner.

Is your patch functionally the same as the PostgresPro implementation?

Is there any documentation or README explaining this whole 64-bit XID mechanism?

Could you tell me what happens if new tuple with XID larger than xid_base + 0xFFFFFFFF is inserted into the page? Such new tuple is not allowed to be inserted into that page? Or xid_base and xids of all existing tuples in the page are increased? Also what happens if one of those xids (of existing tuples) cannot be changed because the tuple still can be seen by very-long-running transaction?

On Sat, 8 Jan 2022 at 08:21, Maxim Orlov <orlovmg@gmail.com> wrote: >> >> Perhaps we can merge some of the code cleanup that it contained, such as using XID_FMT everywhere and creating a typefor the kind of page returned by TransactionIdToPage() to make the code cleaner. > > > Agree, I think this is a good idea. Looks to me like the best next actions would be: 1. Submit a patch that uses XID_FMT everywhere, as a cosmetic change. This looks like it will reduce the main patch size considerably and make it much less scary. That can be cleaned up and committed while we discuss the main approach. 2. Write up the approach in a detailed README, so people can understand the proposal and assess if there are problems. A few short notes and a link back to old conversations isn't enough to allow wide review and give confidence on such a major patch. -- Simon Riggs http://www.EnterpriseDB.com/

Re: patch that uses XID_FMT everywhere ... to make the main patch much smaller

That's exactly what my previous patch did, plus the patch to support 64-bit GUCs.

Maxim, maybe it's still a good idea to isolate those two patches and submit them separately first, to reduce the size

ofthe rest of the patch?

On 1/12/22, 8:28 AM, "Simon Riggs" <simon.riggs@enterprisedb.com> wrote:

CAUTION: This email originated from outside of the organization. Do not click links or open attachments unless you

canconfirm the sender and know the content is safe.

On Sat, 8 Jan 2022 at 08:21, Maxim Orlov <orlovmg@gmail.com> wrote:

>>

>> Perhaps we can merge some of the code cleanup that it contained, such as using XID_FMT everywhere and

creatinga type for the kind of page returned by TransactionIdToPage() to make the code cleaner.

>

>

> Agree, I think this is a good idea.

Looks to me like the best next actions would be:

1. Submit a patch that uses XID_FMT everywhere, as a cosmetic change.

This looks like it will reduce the main patch size considerably and

make it much less scary. That can be cleaned up and committed while we

discuss the main approach.

2. Write up the approach in a detailed README, so people can

understand the proposal and assess if there are problems. A few short

notes and a link back to old conversations isn't enough to allow wide

review and give confidence on such a major patch.

--

Simon Riggs http://www.EnterpriseDB.com/

Maxim, maybe it's still a good idea to isolate those two patches and submit them separately first, to reduce the size of the rest of the patch?

Looks to me like the best next actions would be:

1. Submit a patch that uses XID_FMT everywhere, as a cosmetic change.

This looks like it will reduce the main patch size considerably and

make it much less scary. That can be cleaned up and committed while we

discuss the main approach.

2. Write up the approach in a detailed README, so people can

understand the proposal and assess if there are problems. A few short

notes and a link back to old conversations isn't enough to allow wide

review and give confidence on such a major patch.

We intend to do the following work on the patch soon:1. Write a detailed README2. Split the patch into several pieces including a separate part for XID_FMT. But if committers meanwhile choose to commit Jim's XID_FMT patch we also appreciate this and will rebase our patch accordingly.2A. Probably refactor it to store precalculated XMIN/XMAX in memory tuple representation instead of t_xid_base/t_multi_base2B. Split the in-memory part of a patch as a separate3. Construct some variants for leaving "double xmax" format as a temporary one just after upgrade for having only one persistent on-disk format instead of two.3A. By using SQL function "vacuum doublexmax;"OR3B. By freeing space on all heap pages for pd_special before pg-upgrade.OR3C. By automatically repacking all "double xmax" pages after upgrade (with a priority specified by common vacuum-related GUCs)4. Intentionally prohibit starting a new transaction with XID difference of more than 2^32 from the oldest currently running one. This is to enforce some dba's action for cleaning defunct transaction but not binding one: he/she can wait if they consider these old transactions not defunct.5. Investigate and add a solution for archs without 64-bit atomic values.5A. Provide XID 8-byte alignment for systems where 64-bit atomics is provided for 8-byte aligned values.5B. Wrap XID reading into PG atomic locks for remaining 32-bit ones (they are expected to be rare).

Attachment

Hi, On Fri, Jan 14, 2022 at 11:38:46PM +0400, Pavel Borisov wrote: > > PFA patch with README for 64xid proposal. It is 0003 patch of the same v6, > that was proposed earlier [1]. > As always, I very much appreciate your ideas on this readme patch, on > overall 64xid patch [1], and on the roadmap on its improvement quoted above. Thanks for adding this documentation! Unfortunately, the cfbot can't apply a patchset split in multiple emails, so for now you only get coverage for this new readme file, as this is what's being tested on the CI: https://github.com/postgresql-cfbot/postgresql/commit/f8f12ce29344bc7c72665c334b5eb40cee22becd Could you send the full patchset each time you make a modification? For now I'm simply attaching 0001, 0002 and 0003 to make sure that the cfbot will pick all current patches on its next run.

Attachment

I tried to pg_upgrade from a v13 instance like:

time make check -C src/bin/pg_upgrade oldsrc=`pwd`/13 oldbindir=`pwd`/13/tmp_install/usr/local/pgsql/bin

I had compiled and installed v13 into `pwd`/13.

First, test.sh failed, because of an option in initdb which doesn't exist in

the old version: -x 21000000000

I patched test.sh so the option is used only the "new" version.

The tab_core_types table has an XID column, so pg_upgrade --check complains and

refuses to run. If I drop it, then pg_upgrade runs, but then fails like this:

|Files /home/pryzbyj/src/postgres/src/bin/pg_upgrade/tmp_check/new_xids.txt and

/home/pryzbyj/src/postgres/src/bin/pg_upgrade/tmp_check/old_xids.txtdiffer

|See /home/pryzbyj/src/postgres/src/bin/pg_upgrade/tmp_check/xids.diff

|

|--- /home/pryzbyj/src/postgres/src/bin/pg_upgrade/tmp_check/new_xids.txt 2022-01-15 00:14:23.035294414 -0600

|+++ /home/pryzbyj/src/postgres/src/bin/pg_upgrade/tmp_check/old_xids.txt 2022-01-15 00:13:59.634945012 -0600

|@@ -1,5 +1,5 @@

| relfrozenxid | relminmxid

| --------------+------------

|- 3 | 3

|+ 15594 | 3

| (1 row)

Also, the patch needs to be rebased over Peter's vacuum changes.

Here's the changes I used for my test:

diff --git a/src/bin/pg_upgrade/test.sh b/src/bin/pg_upgrade/test.sh

index c6361e3c085..5eae42192b6 100644

--- a/src/bin/pg_upgrade/test.sh

+++ b/src/bin/pg_upgrade/test.sh

@@ -24,7 +24,8 @@ standard_initdb() {

# without increasing test runtime, run these tests with a custom setting.

# Also, specify "-A trust" explicitly to suppress initdb's warning.

# --allow-group-access and --wal-segsize have been added in v11.

- "$1" -N --wal-segsize 1 --allow-group-access -A trust -x 21000000000

+ "$@" -N --wal-segsize 1 --allow-group-access -A trust

+

if [ -n "$TEMP_CONFIG" -a -r "$TEMP_CONFIG" ]

then

cat "$TEMP_CONFIG" >> "$PGDATA/postgresql.conf"

@@ -237,7 +238,7 @@ fi

PGDATA="$BASE_PGDATA"

-standard_initdb 'initdb'

+standard_initdb 'initdb' -x 21000000000

pg_upgrade $PG_UPGRADE_OPTS --no-sync -d "${PGDATA}.old" -D "$PGDATA" -b "$oldbindir" -p "$PGPORT" -P "$PGPORT"

diff --git a/src/bin/pg_upgrade/upgrade_adapt.sql b/src/bin/pg_upgrade/upgrade_adapt.sql

index 27c4c7fd011..c5ce8bc95b2 100644

--- a/src/bin/pg_upgrade/upgrade_adapt.sql

+++ b/src/bin/pg_upgrade/upgrade_adapt.sql

@@ -89,3 +89,5 @@ DROP OPERATOR public.#%# (pg_catalog.int8, NONE);

DROP OPERATOR public.!=- (pg_catalog.int8, NONE);

DROP OPERATOR public.#@%# (pg_catalog.int8, NONE);

\endif

+

+DROP TABLE IF EXISTS tab_core_types;

On Wed, Jan 05, 2022 at 06:51:37PM -0500, Bruce Momjian wrote: > On Tue, Jan 4, 2022 at 10:22:50PM +0000, Finnerty, Jim wrote: [skipped] > > with the "double-xmax" representation. This would eliminate a whole > > class of coding errors and would make the code dealing with 64-bit > > XIDs simpler and more maintainable. > > Well, yes, we could do this, and it would avoid the complexity of having > to support two XID representations, but we would need to accept that > fast pg_upgrade would be impossible in such cases, since every page > would need to be checked and potentially updated. > > You might try to do this while the server is first started and running > queries, but I think we found out from the online checkpoint patch that > having the server in an intermediate state while running queries is very > complex --- it might be simpler to just accept two XID formats all the > time than enabling the server to run with two formats for a short > period. My big point is that this needs more thought. Probably, some table storage housekeeping would be wanted. Like a column in pg_class describing the current set of options of the table: checksums added, 64-bit xids added, type of 64-bit xids (probably some would want to add support for the pgpro up- grades), some set of defaults to not include a lot of them in all pageheaders -- like compressed xid/integer formats or extended pagesize. And separate tables that describe the transition state -- like when adding checksums, the desired state for the relation (checksums), and a set of ranges in the table files that are al- ready transitioned/checked. That probably will not introduce too much slowdown at least on reading, and will add the transition/upgrade mechanics. Aren't there were already some discussions about such a feature in the mailing lists? > > > -- > Bruce Momjian <bruce@momjian.us> https://momjian.us > EDB https://enterprisedb.com > > If only the physical world exists, free will is an illusion. > >

Attachment

Hi Pavel, > Please feel free to discuss readme and your opinions on the current patch and proposed changes [1]. Just a quick question about this design choice: > On-disk tuple format remains unchanged. 32-bit t_xmin and t_xmax store the > lower parts of 64-bit XMIN and XMAX values. Each heap page has additional > 64-bit pd_xid_base and pd_multi_base which are common for all tuples on a page. > They are placed into a pd_special area - 16 bytes in the end of a heap page. > Actual XMIN/XMAX for a tuple are calculated upon reading a tuple from a page > as follows: > > XMIN = t_xmin + pd_xid_base. > XMAX = t_xmax + pd_xid_base/pd_multi_base. Did you consider using 4 bytes for pd_xid_base and another 4 bytes for (pd_xid_base/pd_multi_base)? This would allow calculating XMIN/XMAX as: XMIN = (t_min_extra_bits << 32) | t_xmin XMAX = (t_max_extra_bits << 32) | t_xmax ... and save 8 extra bytes in the pd_special area. Or maybe I'm missing some context here? -- Best regards, Aleksander Alekseev

Did you consider using 4 bytes for pd_xid_base and another 4 bytes for

(pd_xid_base/pd_multi_base)? This would allow calculating XMIN/XMAX

as:

XMIN = (t_min_extra_bits << 32) | t_xmin

XMAX = (t_max_extra_bits << 32) | t_xmax

... and save 8 extra bytes in the pd_special area. Or maybe I'm

missing some context here?

Hi,

On 2022-01-24 16:38:54 +0400, Pavel Borisov wrote:

> +64-bit Transaction ID's (XID)

> +=============================

> +

> +A limited number (N = 2^32) of XID's required to do vacuum freeze to prevent

> +wraparound every N/2 transactions. This causes performance degradation due

> +to the need to exclusively lock tables while being vacuumed. In each

> +wraparound cycle, SLRU buffers are also being cut.

What exclusive lock?

> +"Double XMAX" page format

> +---------------------------------

> +

> +At first read of a heap page after pg_upgrade from 32-bit XID PostgreSQL

> +version pd_special area with a size of 16 bytes should be added to a page.

> +Though a page may not have space for this. Then it can be converted to a

> +temporary format called "double XMAX".

>

> +All tuples after pg-upgrade would necessarily have xmin = FrozenTransactionId.

Why would a tuple after pg-upgrade necessarily have xmin =

FrozenTransactionId? A pg_upgrade doesn't scan the tables, so the pg_upgrade

itself doesn't do anything to xmins.

I guess you mean that the xmin cannot be needed anymore, because no older

transaction can be running?

> +In-memory tuple format

> +----------------------

> +

> +In-memory tuple representation consists of two parts:

> +- HeapTupleHeader from disk page (contains all heap tuple contents, not only

> +header)

> +- HeapTuple with additional in-memory fields

> +

> +HeapTuple for each tuple in memory stores t_xid_base/t_multi_base - a copies of

> +page's pd_xid_base/pd_multi_base. With tuple's 32-bit t_xmin and t_xmax from

> +HeapTupleHeader they are used to calculate actual 64-bit XMIN and XMAX:

> +

> +XMIN = t_xmin + t_xid_base. (3)

> +XMAX = t_xmax + t_xid_base/t_multi_base. (4)

What identifies a HeapTuple as having this additional data?

> +The downside of this is that we can not use tuple's XMIN and XMAX right away.

> +We often need to re-read t_xmin and t_xmax - which could actually be pointers

> +into a page in shared buffers and therefore they could be updated by any other

> +backend.

Ugh, that's not great.

> +Upgrade from 32-bit XID versions

> +--------------------------------

> +

> +pg_upgrade doesn't change pages format itself. It is done lazily after.

> +

> +1. At first heap page read, tuples on a page are repacked to free 16 bytes

> +at the end of a page, possibly freeing space from dead tuples.

That will cause a *massive* torrent of writes after an upgrade. Isn't this

practically making pg_upgrade useless? Imagine a huge cluster where most of

the pages are all-frozen, upgraded using link mode.

What happens if the first access happens on a replica?

What is the approach for dealing with multixact files? They have xids

embedded? And currently the SLRUs will break if you just let the offsets SLRU

grow without bounds.

> +void

> +convert_page(Relation rel, Page page, Buffer buf, BlockNumber blkno)

> +{

> + PageHeader hdr = (PageHeader) page;

> + GenericXLogState *state = NULL;

> + Page tmp_page = page;

> + uint16 checksum;

> +

> + if (!rel)

> + return;

> +

> + /* Verify checksum */

> + if (hdr->pd_checksum)

> + {

> + checksum = pg_checksum_page((char *) page, blkno);

> + if (checksum != hdr->pd_checksum)

> + ereport(ERROR,

> + (errcode(ERRCODE_INDEX_CORRUPTED),

> + errmsg("page verification failed, calculated checksum %u but expected %u",

> + checksum, hdr->pd_checksum)));

> + }

> +

> + /* Start xlog record */

> + if (!XactReadOnly && XLogIsNeeded() && RelationNeedsWAL(rel))

> + {

> + state = GenericXLogStart(rel);

> + tmp_page = GenericXLogRegisterBuffer(state, buf, GENERIC_XLOG_FULL_IMAGE);

> + }

> +

> + PageSetPageSizeAndVersion((hdr), PageGetPageSize(hdr),

> + PG_PAGE_LAYOUT_VERSION);

> +

> + if (was_32bit_xid(hdr))

> + {

> + switch (rel->rd_rel->relkind)

> + {

> + case 'r':

> + case 'p':

> + case 't':

> + case 'm':

> + convert_heap(rel, tmp_page, buf, blkno);

> + break;

> + case 'i':

> + /* no need to convert index */

> + case 'S':

> + /* no real need to convert sequences */

> + break;

> + default:

> + elog(ERROR,

> + "Conversion for relkind '%c' is not implemented",

> + rel->rd_rel->relkind);

> + }

> + }

> +

> + /*

> + * Mark buffer dirty unless this is a read-only transaction (e.g. query

> + * is running on hot standby instance)

> + */

> + if (!XactReadOnly)

> + {

> + /* Finish xlog record */

> + if (XLogIsNeeded() && RelationNeedsWAL(rel))

> + {

> + Assert(state != NULL);

> + GenericXLogFinish(state);

> + }

> +

> + MarkBufferDirty(buf);

> + }

> +

> + hdr = (PageHeader) page;

> + hdr->pd_checksum = pg_checksum_page((char *) page, blkno);

> +}

Wait. So you just modify the page without WAL logging or marking it dirty on a

standby? I fail to see how that can be correct.

Imagine the cluster is promoted, the page is dirtied, and we write it

out. You'll have written out a completely changed page, without any WAL

logging. There's plenty other scenarios.

Greetings,

Andres Freund

> +The downside of this is that we can not use tuple's XMIN and XMAX right away.

> +We often need to re-read t_xmin and t_xmax - which could actually be pointers

> +into a page in shared buffers and therefore they could be updated by any other

> +backend.

Ugh, that's not great.

What happens if the first access happens on a replica?

What is the approach for dealing with multixact files? They have xids

embedded? And currently the SLRUs will break if you just let the offsets SLRU

grow without bounds.

Wait. So you just modify the page without WAL logging or marking it dirty on a

standby? I fail to see how that can be correct.

Imagine the cluster is promoted, the page is dirtied, and we write it

out. You'll have written out a completely changed page, without any WAL

logging. There's plenty other scenarios.

Attachment

Hi hackers, > In this part, I suppose you've found a definite bug. Thanks! There are a couple > of ways how it could be fixed: > > 1. If we enforce checkpoint at replica promotion then we force full-page writes after each page modification afterward. > > 2. Maybe it's worth using BufferDesc bit to mark the page as converted to 64xid but not yet written to disk? For example,one of four bits from BUF_USAGECOUNT. > BM_MAX_USAGE_COUNT = 5 so it will be enough 3 bits to store it. This will change in-memory page representation but willnot need WAL-logging which is impossible on a replica. > > What do you think about it? I'm having difficulties merging and/or testing v8-0002-Add-64bit-xid.patch since I'm not 100% sure which commit this patch was targeting. Could you please submit a rebased patch and/or share your development branch on GitHub? I agree with Bruce it would be great to deliver this in PG15. Please let me know if you believe it's unrealistic for any reason so I will focus on testing and reviewing other patches. For now, I'm changing the status of the patch to "Waiting on Author". -- Best regards, Aleksander Alekseev

Hi! Here is the rebased version.

On Wed, Mar 02, 2022 at 06:43:11PM +0400, Pavel Borisov wrote: > Hi hackers! > > Hi! Here is the rebased version. The patch doesn't apply - I suppose the patch is relative a forked postgres which already has other patches. http://cfbot.cputube.org/pavel-borisov.html Note also that I mentioned an issue with pg_upgrade. Handling that that well is probably the most important part of the patch. -- Justin

Hi, On 2022-03-02 16:25:33 +0300, Aleksander Alekseev wrote: > I agree with Bruce it would be great to deliver this in PG15. > Please let me know if you believe it's unrealistic for any reason so I will > focus on testing and reviewing other patches. I don't see 15 as a realistic target for this patch. There's huge amounts of work left, it has gotten very little review. I encourage trying to break down the patch into smaller incrementally useful pieces. E.g. making all the SLRUs 64bit would be a substantial and independently committable piece. Greetings, Andres Freund

Hi hackers, > The patch doesn't apply - I suppose the patch is relative a forked postgres No, the authors just used a little outdated `master` branch. I successfully applied it against 31d8d474 and then rebased to the latest master (62ce0c75). The new version is attached. Not 100% sure if my rebase is correct since I didn't invest too much time into reviewing the code. But at least it passes `make installcheck` locally. Let's see what cfbot will tell us. > I encourage trying to break down the patch into smaller incrementally useful > pieces. E.g. making all the SLRUs 64bit would be a substantial and > independently committable piece. Completely agree. And the changes like: +#if 0 /* XXX remove unit tests */ ... suggest that the patch is pretty raw in its current state. Pavel, Maxim, don't you mind me splitting the patchset, or would you like to do it yourself and/or maybe include more changes? I don't know how actively you are working on this. -- Best regards, Aleksander Alekseev

Attachment

> The patch doesn't apply - I suppose the patch is relative a forked postgres

No, the authors just used a little outdated `master` branch. I

successfully applied it against 31d8d474 and then rebased to the

latest master (62ce0c75). The new version is attached.

Not 100% sure if my rebase is correct since I didn't invest too much

time into reviewing the code. But at least it passes `make

installcheck` locally. Let's see what cfbot will tell us.

> I encourage trying to break down the patch into smaller incrementally useful

> pieces. E.g. making all the SLRUs 64bit would be a substantial and

> independently committable piece.

Completely agree. And the changes like:

+#if 0 /* XXX remove unit tests */

... suggest that the patch is pretty raw in its current state.

Pavel, Maxim, don't you mind me splitting the patchset, or would you

like to do it yourself and/or maybe include more changes? I don't know

how actively you are working on this.

Attachment

Hi Pavel! On Thu, Mar 3, 2022 at 2:35 PM Pavel Borisov <pashkin.elfe@gmail.com> wrote: > BTW messages with patches in this thread are always invoke manual spam moderation and we need to wait for ~3 hours beforethe message with patch becomes visible in the hackers thread. Now when I've already answered Alexander's letter withv10 patch the very message (and a patch) I've answered is still not visible in the thread and to CFbot. > > Can something be done in hackers' moderation engine to make new versions patches become visible hassle-free? Is your email address subscribed to the pgsql-hackers mailing list? AFAIK, moderation is only applied for non-subscribers. ------ Regards, Alexander Korotkov

> BTW messages with patches in this thread are always invoke manual spam moderation and we need to wait for ~3 hours before the message with patch becomes visible in the hackers thread. Now when I've already answered Alexander's letter with v10 patch the very message (and a patch) I've answered is still not visible in the thread and to CFbot.

>

> Can something be done in hackers' moderation engine to make new versions patches become visible hassle-free?

Is your email address subscribed to the pgsql-hackers mailing list?

AFAIK, moderation is only applied for non-subscribers.

Greetings, * Pavel Borisov (pashkin.elfe@gmail.com) wrote: > > > BTW messages with patches in this thread are always invoke manual spam > > moderation and we need to wait for ~3 hours before the message with patch > > becomes visible in the hackers thread. Now when I've already answered > > Alexander's letter with v10 patch the very message (and a patch) I've > > answered is still not visible in the thread and to CFbot. > > > > > > Can something be done in hackers' moderation engine to make new versions > > patches become visible hassle-free? > > > > Is your email address subscribed to the pgsql-hackers mailing list? > > AFAIK, moderation is only applied for non-subscribers. > > Yes, it is in the list. The problem is that patch is over 1Mb. So it > strictly goes through moderation. And this is unchanged for 2 months > already. Right, >1MB will be moderated, as will emails that are CC'd to multiple lists, and somehow this email thread ended up with two different addresses for -hackers, which isn't good. > I was advised to use .gz, which I will do next time. Better would be to break the patch down into reasonable and independent pieces for review and commit on separate threads as suggested previously and not to send huge patches to the list with the idea that someone is going to actually fully review and commit them. That's just not likely to end up working well anyway. Thanks, Stephen

Attachment

Hi hackers, > We've rebased patchset onto the current master. The result is almost the > same as Alexander's v10 (it is a shame it is still in moderation and not > visible in the thread). Anyway, this is the v11 patch. Reviews are very > welcome. Here is a rebased and slightly modified version of the patch. I extracted the introduction of XID_FMT macro to a separate patch. Also, I noticed that sometimes PRIu64 was used to format XIDs instead. I changed it to XID_FMT for consistency. v12-0003 can be safely delivered in PG15. > I encourage trying to break down the patch into smaller incrementally useful > pieces. E.g. making all the SLRUs 64bit would be a substantial and > independently committable piece. I'm going to address this in follow-up emails. -- Best regards, Aleksander Alekseev

Attachment

Hi hackers, > Here is a rebased and slightly modified version of the patch. > > I extracted the introduction of XID_FMT macro to a separate patch. Also, > I noticed that sometimes PRIu64 was used to format XIDs instead. I changed it > to XID_FMT for consistency. v12-0003 can be safely delivered in PG15. > > > I encourage trying to break down the patch into smaller incrementally useful > > pieces. E.g. making all the SLRUs 64bit would be a substantial and > > independently committable piece. > > I'm going to address this in follow-up emails. cfbot is not happy because several files are missing in v12. Here is a corrected and rebased version. I also removed the "#undef PRIu64" change from include/c.h since previously I replaced PRIu64 usage with XID_FMT. -- Best regards, Aleksander Alekseev

Attachment

Hi hackers, > I extracted the introduction of XID_FMT macro to a separate patch. Also, > I noticed that sometimes PRIu64 was used to format XIDs instead. I changed it > to XID_FMT for consistency. v12-0003 can be safely delivered in PG15. [...] > > > I encourage trying to break down the patch into smaller incrementally useful > > > pieces. E.g. making all the SLRUs 64bit would be a substantial and > > > independently committable piece. > > > > I'm going to address this in follow-up emails. > > cfbot is not happy because several files are missing in v12. Here is a > corrected and rebased version. I also removed the "#undef PRIu64" > change from include/c.h since previously I replaced PRIu64 usage with > XID_FMT. Here is a new version of the patchset. SLRU refactoring was moved to a separate patch. Both v14-0003 (XID_FMT macro) and v14-0004 (SLRU refactoring) can be delivered in PG15. One thing I couldn't understand so far is why SLRU_PAGES_PER_SEGMENT should necessarily be increased in order to make 64-bit XIDs work. I kept the current value (32) in v14-0004 but changed it to 2048 in ./v14-0005 (where we start using 64 bit XIDs) as it was in the original patch. Is this change really required? -- Best regards, Aleksander Alekseev

Attachment

Hi hackers, > Here is a new version of the patchset. SLRU refactoring was moved to a > separate patch. Both v14-0003 (XID_FMT macro) and v14-0004 (SLRU > refactoring) can be delivered in PG15. Here is a new version of the patchset. The changes compared to v14 are minimal. Most importantly, the GCC warning reported by cfbot was (hopefully) fixed. The patch order was also altered, v15-0001 and v15-0002 are targeting PG15 now, the rest are targeting PG16. Also for the record, I tested the patchset on Raspberry Pi 3 Model B+ in the hope that it will discover some new flaws. To my disappointment, it didn't. -- Best regards, Aleksander Alekseev

Attachment

Hi hackers, > > Here is a new version of the patchset. SLRU refactoring was moved to a > > separate patch. Both v14-0003 (XID_FMT macro) and v14-0004 (SLRU > > refactoring) can be delivered in PG15. > > Here is a new version of the patchset. The changes compared to v14 are > minimal. Most importantly, the GCC warning reported by cfbot was > (hopefully) fixed. The patch order was also altered, v15-0001 and > v15-0002 are targeting PG15 now, the rest are targeting PG16. > > Also for the record, I tested the patchset on Raspberry Pi 3 Model B+ > in the hope that it will discover some new flaws. To my > disappointment, it didn't. Here is the rebased version of the patchset. Also, I updated the commit messages for v16-0001 and v16-002 to make them look more like the rest of the PostgreSQL commit messages. They include the link to this discussion now as well. IMO v16-0001 and v16-0002 are in pretty good shape and are as much as we are going to deliver in PG15. I'm going to change the status of the CF entry to "Ready for Committer" somewhere this week unless someone believes v16-0001 and/or v16-0002 shouldn't be merged. -- Best regards, Aleksander Alekseev

Hi hackers, > IMO v16-0001 and v16-0002 are in pretty good shape and are as much as > we are going to deliver in PG15. I'm going to change the status of the > CF entry to "Ready for Committer" somewhere this week unless someone > believes v16-0001 and/or v16-0002 shouldn't be merged. Sorry for the missing attachment. Here it is. -- Best regards, Aleksander Alekseev

Attachment

Attachment

Hi! Here is updated version of the patch, based on Alexander's ver16.