Thread: PCI:SSF - Safe SQL Query & operators filter

Hi team,

I am leading PCI:SSF audit on our payment switch platform product and having a issue where our QSA just came with multiple ways how to escalate privileges and get a shell to the remote host through our built-in Reporting system which runs on PostgreSQL (12-14).

- select * from pg_read_file('/etc/passwd' , 0 , 1000000); -> it's possible to display content of '/etc/passwd/' file

- select version(); -> Result of DBMS version request.

- COPY (SELECT 'nc -lvvp 2346 -e /bin/bash') TO '/tmp/pentestlab'; -> it's possible to create files on the filesystem on behalf of 'postgres' user.

The main problem comes from obvious - our application's PostgreSQL user needs to have an Superuser role as it manages most of its (dedicated) database (creates tables, drops those, manages views, triggers ... ).

This all matches description from documentation as follows:

<https://www.postgresql.org/docs/14/predefined-roles.html>

The pg_read_server_files, pg_write_server_files and pg_execute_server_program roles are intended to allow administrators to have trusted, but non-superuser, roles which are able to access files and run programs on the database server as the user the database runs as. As these roles are able to access any file on the server file system, they bypass all database-level permission checks when accessing files directly and they could be used to gain superuser-level access, therefore great care should be taken when granting these roles to users.

</>

So these - pg_read_server_files, pg_write_server_files and pg_execute_server_program roles are inherited from the Superuser and are also super sensitive for us, but our application user apparently still needs to be superuser for multiple reasons. Would there be any way to go around this?

1/ What we need is to create a postgresql user who would be pg_database_owner but not superuser ... but apparently we still need our user to be superuser to be able to carry on with some operations (picking one of more then few) ....

CREATE OR REPLACE LANGUAGE plpython3u;

HINT: Must be superuser to create this extension.

2/ Second option is to introduce some sort of limitation of allowed SQL queries and operators for our Reporting engine, but all that white-listing / blacklisting comes with its own problems where we are DB agnostic...

I am sorry for a long email, but any ideas/pointers will be greatly appreciated.

Thank you & Kind Regards,

Jan

-- Jan Bilek - CTO at EFTlab Pty Ltd.

> On Nov 7, 2022, at 17:24, Jan Bilek <jan.bilek@eftlab.com.au> wrote: > Would there be any way to go around this? The typical configuration is to not permit the PostgreSQL superuser to log in remotely. The database can be managed by adifferent, non-superuser role, including schema migrations. > CREATE OR REPLACE LANGUAGE plpython3u; > HINT: Must be superuser to create this extension. The reason only a superuser can create this extension is the "u" at the end of the name: It is an untrusted PL that can bypassPostgreSQL's role system. If anyone could create functions in it, anyone could bypass roles.

On 11/8/22 11:29, Christophe Pettus wrote: > >> On Nov 7, 2022, at 17:24, Jan Bilek <jan.bilek@eftlab.com.au> wrote: >> Would there be any way to go around this? > The typical configuration is to not permit the PostgreSQL superuser to log in remotely. The database can be managed bya different, non-superuser role, including schema migrations. Well, superuser (our App) is already logged in and as it is designed very much as an "appliance" it simply does that job - manages its database. There might be a way to explore whether we can use internally another (limited) user just for reporting queries on a different connection session. But I am getting (security related) headaches just thinking about it. > >> CREATE OR REPLACE LANGUAGE plpython3u; >> HINT: Must be superuser to create this extension. > The reason only a superuser can create this extension is the "u" at the end of the name: It is an untrusted PL that canbypass PostgreSQL's role system. If anyone could create functions in it, anyone could bypass roles. Yes, agreed. Any ideas? -- Jan Bilek - CTO at EFTlab Pty Ltd.

> On Nov 7, 2022, at 17:43, Jan Bilek <jan.bilek@eftlab.com.au> wrote: > > Well, superuser (our App) is already logged in and as it is designed > very much as an "appliance" it simply does that job - manages its > database. Well... don't do that. :) The problem is analogous to having root log into a Linux box and run application commands. Itworks, but it opens a security hole, as you've discovered. > Yes, agreed. Any ideas? In this particular case (creating an untrusted PL and functions therein), you'll need to use a PostgreSQL superuser. Thisis a separate operation from routine application use, though. (I'll note that having functions in an untrusted PL ina PCI-sensitive system is not a great idea, as you'll need to audit them very closely to make sure that they can't do anythinguntoward outside the role system.)

The main problem comes from obvious - our application's PostgreSQL user needs to have an Superuser role as it manages most of its (dedicated) database (creates tables, drops those, manages views, triggers ... ).

So these - pg_read_server_files, pg_write_server_files and pg_execute_server_program roles are inherited from the Superuser

and are also super sensitive for us, but our application user apparently still needs to be superuser for multiple reasons.

1/ What we need is to create a postgresql user who would be pg_database_owner but not superuser

... but apparently we still need our user to be superuser to be able to carry on with some operations (picking one of more then few) ....

CREATE OR REPLACE LANGUAGE plpython3u;

HINT: Must be superuser to create this extension.

2/ Second option is to introduce some sort of limitation of allowed SQL queries

On Mon, Nov 7, 2022 at 8:25 PM Jan Bilek <jan.bilek@eftlab.com.au> wrote:

> ...

> select * from pg_read_file('/etc/passwd' , 0 , 1000000); -> it's possible to display content of '/etc/passwd/' file

> select version(); -> Result of DBMS version request.

Input filtering may help in the interim, until you get the roles and

privileges sorted out.

> COPY (SELECT 'nc -lvvp 2346 -e /bin/bash') TO '/tmp/pentestlab'; -> it's possible to create files on the filesystem

onbehalf of 'postgres' user.

Prepared Statement or Parameterized Query here. In this case, don't

execute data as code.

Jeff

On Tue, 2022-11-08 at 01:24 +0000, Jan Bilek wrote: > I am leading PCI:SSF audit on our payment switch platform product and having a issue where our QSA > just came with multiple ways how to escalate privileges and get a shell to the remote host through > our built-in Reporting system which runs on PostgreSQL (12-14). > So these - pg_read_server_files, pg_write_server_files and pg_execute_server_program roles are > inherited from the Superuser and are also super sensitive for us, but our application user apparently > still needs to be superuser for multiple reasons. Would there be any way to go around this? Your application user doesn't need to be a superuser. > 1/ What we need is to create a postgresql user who would be pg_database_owner but not superuser ... > but apparently we still need our user to be superuser to be able to carry on with some operations > (picking one of more then few) .... > CREATE OR REPLACE LANGUAGE plpython3u; > HINT: Must be superuser to create this extension. Your application user doesn't need to create PL/Python functions. It only has to use them. If your application has to access the operating system, it has to do that in a controlled fashion: 1. through well-written SECURITY DEFINER functions that belong to a highly privileged user 2. through PL/Python functions or other untrusted language functions that are created by a superuser > 2/ Second option is to introduce some sort of limitation of allowed SQL queries and operators > for our Reporting engine, but all that white-listing / blacklisting comes with its own > problems where we are DB agnostic... That shouldn't be necessary. If your application user has restricted privileges as it should, it is automatically limited in the damage it can do. Yours, Laurenz Albe

On 11/8/22 11:50, Christophe Pettus wrote:

>

>> On Nov 7, 2022, at 17:43, Jan Bilek <jan.bilek@eftlab.com.au> wrote:

>>

>> Well, superuser (our App) is already logged in and as it is designed

>> very much as an "appliance" it simply does that job - manages its

>> database.

> Well... don't do that. :) The problem is analogous to having root log into a Linux box and run application commands.

It works, but it opens a security hole, as you've discovered.

>

>> Yes, agreed. Any ideas?

> In this particular case (creating an untrusted PL and functions therein), you'll need to use a PostgreSQL superuser.

Thisis a separate operation from routine application use, though. (I'll note that having functions in an untrusted PL

ina PCI-sensitive system is not a great idea, as you'll need to audit them very closely to make sure that they can't do

anythinguntoward outside the role system.)

Thank you David, Laurentz & Christophe,

All excellent inputs.

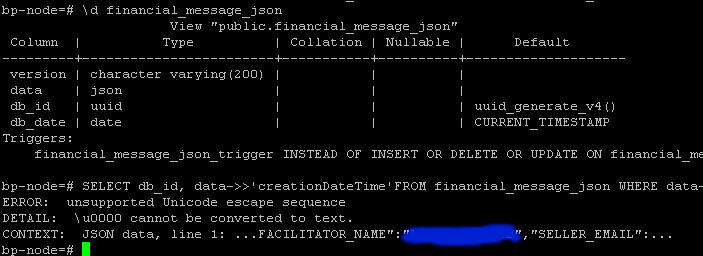

I've realized that our reporting feature is wrapped-bound in a

Transaction & Rollback. This actually came with an idea to Alter that

role as a part of transaction.

It works in an excellent way!

BEGIN TRANSACTION;

alter role CURRENT_USER with NOSUPERUSER;

select * from pg_read_file('/etc/passwd' , 0 , 1000000);

ROLLBACK TRANSACTION;

BEGIN

ALTER ROLE

ERROR: permission denied for function pg_read_file

ROLLBACK

bp-node=#

Even trying to break it seems to be difficult.

BEGIN TRANSACTION;

alter role CURRENT_USER with NOSUPERUSER;

alter role CURRENT_USER with SUPERUSER;

ROLLBACK TRANSACTION;

BEGIN

ALTER ROLE

ERROR: must be superuser to alter superuser roles or change superuser

attribute

ROLLBACK

I know it is not exactly what you suggested (and agreeing a lot with our

app user shouldn't be running as superuser), but as all other inputs

from our application come sanitized through bind and this is the only

way where user can send an explicit command in there - I think it should do!

Please let me know if you approve.

Thanks & Cheers,

Jan

--

Jan Bilek - CTO at EFTlab Pty Ltd.

On Tue, 2022-11-08 at 04:14 +0000, Jan Bilek wrote: > I know it is not exactly what you suggested (and agreeing a lot with our > app user shouldn't be running as superuser), but as all other inputs > from our application come sanitized through bind and this is the only > way where user can send an explicit command in there - I think it should do! > > Please let me know if you approve. I strongly disapprove, and any security audit you pass with such a setup is worthless. I repeat: the application does not need to connect with a superuser. I don't understand what you want to demonstrate with the code samples, or what you mean when you say that "the user can send an explicit command". Yours, Laurenz Albe

On 11/8/22 17:03, Laurenz Albe wrote: > On Tue, 2022-11-08 at 04:14 +0000, Jan Bilek wrote: > >> I know it is not exactly what you suggested (and agreeing a lot with our >> app user shouldn't be running as superuser), but as all other inputs >> from our application come sanitized through bind and this is the only >> way where user can send an explicit command in there - I think it should do! >> >> Please let me know if you approve. > I strongly disapprove, and any security audit you pass with such a setup > is worthless. I repeat: the application does not need to connect with > a superuser. > > I don't understand what you want to demonstrate with the code samples, or > what you mean when you say that "the user can send an explicit command". > > Yours, > Laurenz Albe Interesting. I agree that our app shouldn't need superuser, but that would mean that some ... you made me give it some serious though here. Installation itself is happening under elevated (root) rights. We are using the postgres account for moving in all what's needed (e.g. that plpython3u extension). Walking though our code for most of the day, I can't see why that superuser would be really needed. Those plpython3u functions are wrapped up under the hood already. I'm sending that in to check if our QA will find anything. Thanks for being stubborn about this! Cheers, Jan -- Jan Bilek - CTO at EFTlab Pty Ltd.

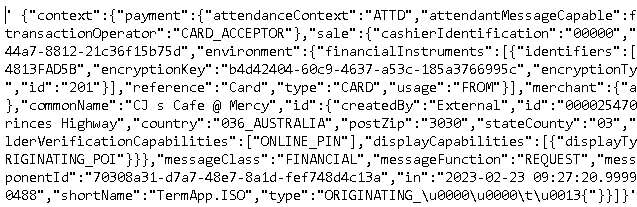

Our customer was able to sneak in an Unicode data into a column of a JSON Type and now that record fails on select.

Would you be able to suggest any way out of this? E.g. finding infringing row, updating its data ... ?

Thanks & Cheers,

Jan

-- Jan Bilek - CTO at EFTlab Pty Ltd.

Attachment

On Mon, 2023-02-27 at 06:28 +0000, Jan Bilek wrote:

> Our customer was able to sneak in an Unicode data into a column of a JSON Type and now that record fails on select.

> Would you be able to suggest any way out of this? E.g. finding infringing row, updating its data ... ?

I'd be curious to know how the customer managed to do that.

Perhaps there is a loophole in PostgreSQL that needs to be fixed.

First, find the table that contains the column.

Then you can try something like

DO

$$DECLARE

pkey bigint;

BEGIN

FOR pkey IN SELECT id FROM jsontab LOOP

BEGIN -- starts block with exception handler

PERFORM jsoncol -> 'creationDateTime'

FROM jsontab

WHERE id = pkey;

EXCEPTION

WHEN untranslatable_character THEN

RAISE NOTICE 'bad character in line with id = %', pkey;

END;

END LOOP;

END;$$;

Yours,

Laurenz Albe

--

Cybertec | https://www.cybertec-postgresql.com

> On 27/02/2023 13:13 CET Laurenz Albe <laurenz.albe@cybertec.at> wrote:

>

> I'd be curious to know how the customer managed to do that.

> Perhaps there is a loophole in PostgreSQL that needs to be fixed.

Probably via some data access layer and not directly via Postgres. It's easy

to reproduce with psycopg:

import psycopg

with psycopg.connect() as con:

con.execute('create temp table jsontab (jsoncol json)')

con.execute(

'insert into jsontab (jsoncol) values (%s)',

[psycopg.types.json.Json('\0')],

)

with con.execute('select jsoncol from jsontab') as cur:

print(cur.fetchall())

try:

with con.execute('select jsoncol::jsonb from jsontab') as cur:

pass

raise AssertionError("jsonb should fail")

except psycopg.errors.UntranslatableCharacter:

pass

Another reason to prefer jsonb over json to reject such inputs right away.

The documentation states that json does not validate inputs in constrast to

jsonb.

Of course the OP now has to deal with json. The data can be sanitized by

replacing all null character escape sequences:

update jsontab

set jsoncol = replace(jsoncol::text, '\u0000', '')::json

where strpos(jsoncol::text, '\u0000') > 0;

But the data access layer (or whatever got the json into the database) must be

fixed as well to reject or sanitize those inputs in the future.

--

Erik

Erik Wienhold <ewie@ewie.name> writes:

>> On 27/02/2023 13:13 CET Laurenz Albe <laurenz.albe@cybertec.at> wrote:

>> I'd be curious to know how the customer managed to do that.

>> Perhaps there is a loophole in PostgreSQL that needs to be fixed.

> Another reason to prefer jsonb over json to reject such inputs right away.

> The documentation states that json does not validate inputs in constrast to

> jsonb.

It's not that it doesn't validate, it's that the validation rules are

different. Per the manual [1]:

RFC 7159 permits JSON strings to contain Unicode escape sequences

denoted by \uXXXX. In the input function for the json type, Unicode

escapes are allowed regardless of the database encoding, and are

checked only for syntactic correctness (that is, that four hex digits

follow \u). However, the input function for jsonb is stricter: it

disallows Unicode escapes for characters that cannot be represented in

the database encoding. The jsonb type also rejects \u0000 (because

that cannot be represented in PostgreSQL's text type), and it insists

that any use of Unicode surrogate pairs to designate characters

outside the Unicode Basic Multilingual Plane be correct.

You can certainly quibble with our decisions here, but I think they

are reasonably consistent. json is for data that you'd like a syntax

check on (else you might as well store it as "text"), but no more than

a syntax check, because you're going to do the actual JSON processing

elsewhere and you don't want Postgres opining on what semi-standard

JSON constructs mean. If you're actually going to process the data

inside the database, jsonb is a better choice. The extra restrictions

in jsonb are to ensure that a string value represented in JSON can be

extracted into a valid string of our text datatype.

Storing data in json and then casting to jsonb on-the-fly seems like

about the worst possible combination of choices.

regards, tom lane

[1] https://www.postgresql.org/docs/current/datatype-json.html

On Mon, 2023-02-27 at 06:28 +0000, Jan Bilek wrote:Our customer was able to sneak in an Unicode data into a column of a JSON Type and now that record fails on select. Would you be able to suggest any way out of this? E.g. finding infringing row, updating its data ... ?I'd be curious to know how the customer managed to do that. Perhaps there is a loophole in PostgreSQL that needs to be fixed. First, find the table that contains the column. Then you can try something like DO $$DECLARE pkey bigint; BEGIN FOR pkey IN SELECT id FROM jsontab LOOP BEGIN -- starts block with exception handler PERFORM jsoncol -> 'creationDateTime' FROM jsontab WHERE id = pkey; EXCEPTION WHEN untranslatable_character THEN RAISE NOTICE 'bad character in line with id = %', pkey; END; END LOOP; END;$$; Yours, Laurenz Albe

Hi Laurenz,

Thank you and yes, that's how we managed to go through that - one of our devs found similar approach described here: https://stackoverflow.com/questions/31671634/handling-unicode-sequences-in-postgresql (see the null_if_invalid_string function there + credits to Hendrik) and we reapplied it. FYI with a bit of tinkering we've been able to retrieve following (corrupted) data:

(It comes from a PROD system so I don't have it in a text form for you to experiment on that.)

Anyway, your solution points in exactly same direction.

How'd customer managed to do that? Still no idea ... looks like they restarted TCP connection on our middle-ware, but any partial packets should be dropped as not matching TCP length header. Also records are deserialized on receive so that would fail. Still, that record had to make it somehow in the PostgreSQL. We are still looking.

Thanks & Cheers,

Jan

-- Jan Bilek - CTO at EFTlab Pty Ltd.

Attachment

On 2/28/23 01:17, Erik Wienhold wrote:

>> On 27/02/2023 13:13 CET Laurenz Albe <laurenz.albe@cybertec.at> wrote:

>>

>> I'd be curious to know how the customer managed to do that.

>> Perhaps there is a loophole in PostgreSQL that needs to be fixed.

> Probably via some data access layer and not directly via Postgres. It's easy

> to reproduce with psycopg:

>

> import psycopg

>

> with psycopg.connect() as con:

> con.execute('create temp table jsontab (jsoncol json)')

> con.execute(

> 'insert into jsontab (jsoncol) values (%s)',

> [psycopg.types.json.Json('\0')],

> )

>

> with con.execute('select jsoncol from jsontab') as cur:

> print(cur.fetchall())

>

> try:

> with con.execute('select jsoncol::jsonb from jsontab') as cur:

> pass

> raise AssertionError("jsonb should fail")

> except psycopg.errors.UntranslatableCharacter:

> pass

>

> Another reason to prefer jsonb over json to reject such inputs right away.

> The documentation states that json does not validate inputs in constrast to

> jsonb.

>

> Of course the OP now has to deal with json. The data can be sanitized by

> replacing all null character escape sequences:

>

> update jsontab

> set jsoncol = replace(jsoncol::text, '\u0000', '')::json

> where strpos(jsoncol::text, '\u0000') > 0;

>

> But the data access layer (or whatever got the json into the database) must be

> fixed as well to reject or sanitize those inputs in the future.

>

> --

> Erik

Hi Erik,

No, it didn't go through any foreign data access layer - it went in

straight through the Postgresql variable bind using pre-cached insert

statement using PostgreSQL 14.5, connected over UNIX sockets.

Strange thing happened afterwards - that locating that record was on &

off - I couldn't pin-point it in DB as it seemed to be failing on

multiple places ... until using that trick from Laurenz. Felt like a

PostgreSQL memory corruption, but system remained stable without any

complaints.

Thanks & Cheers,

Jan

--

Jan Bilek - CTO at EFTlab Pty Ltd.