On 2/27/23 22:13, Laurenz Albe wrote:

On Mon, 2023-02-27 at 06:28 +0000, Jan Bilek wrote:

Our customer was able to sneak in an Unicode data into a column of a JSON Type and now that record fails on select.

Would you be able to suggest any way out of this? E.g. finding infringing row, updating its data ... ?

I'd be curious to know how the customer managed to do that.

Perhaps there is a loophole in PostgreSQL that needs to be fixed.

First, find the table that contains the column.

Then you can try something like

DO $$DECLARE pkey bigint; BEGIN FOR pkey IN SELECT id FROM jsontab LOOP BEGIN -- starts block with exception handler PERFORM jsoncol -> 'creationDateTime' FROM jsontab WHERE id = pkey; EXCEPTION WHEN untranslatable_character THEN RAISE NOTICE 'bad character in line with id = %', pkey; END; END LOOP; END;$$;

Yours,

Laurenz Albe

Hi Laurenz,

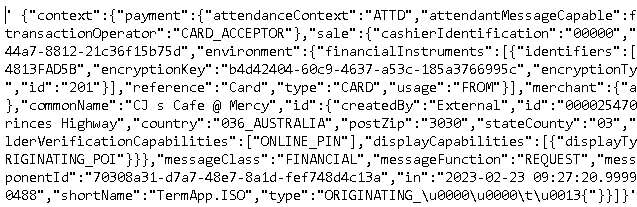

Thank you and yes, that's how we managed to go through that - one of our devs found similar approach described here: https://stackoverflow.com/questions/31671634/handling-unicode-sequences-in-postgresql (see the null_if_invalid_string function there + credits to Hendrik) and we reapplied it. FYI with a bit of tinkering we've been able to retrieve following (corrupted) data:

(It comes from a PROD system so I don't have it in a text form for you to experiment on that.)

Anyway, your solution points in exactly same direction.

How'd customer managed to do that? Still no idea ... looks like they restarted TCP connection on our middle-ware, but any partial packets should be dropped as not matching TCP length header. Also records are deserialized on receive so that would fail. Still, that record had to make it somehow in the PostgreSQL. We are still looking.

Thanks & Cheers,

Jan

--

Jan Bilek - CTO at EFTlab Pty Ltd.