replication primary writting infinite number of WAL files - Mailing list pgsql-general

| From | Les |

|---|---|

| Subject | replication primary writting infinite number of WAL files |

| Date | |

| Msg-id | CAKXe9UDZKGevV6t+Y0adUgy2-k-1gMdRUi4Baz=4BMKX1Oig_A@mail.gmail.com Whole thread Raw |

| Responses |

Re: replication primary writting infinite number of WAL files

Re: replication primary writting infinite number of WAL files Re: replication primary writting infinite number of WAL files |

| List | pgsql-general |

Hello,

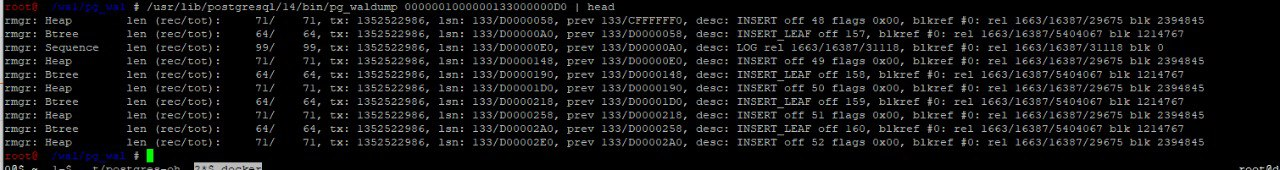

Yesterday we were faced with a problem that we do not understand and cannot solve ourselves. We have a postgresql cluster using repmgr, which has three members. The version of all instances (currently) is "PostgreSQL 14.5 (Debian 14.5-2.pgdg110+2) on x86_64-pc-linux-gnu, compiled by gcc (Debian 10.2.1-6) 10.2.1 20210110, 64-bit.". Under normal circumstances, the number of write operations is relatively low, with an average of 4-5 MB/sec total write speed on the disk associated with the data directory. Yesterday, the primary server suddenly started writing to the pg_wal directory at a crazy pace, 1.5GB/sec, but sometimes it went up to over 3GB/sec. The pg_wal started fattening up and didn't stop until it ran out of disk space. It happened so fast that we didn't have time to react. We then stopped all applications (postgresql clients) because we thought one of them was causing the problem. We then increased the disk size and restarted the primary. The primary finished recovery and started working "normally" for a little while, but then started filling up the disk again. In the meantime, we found out that the WAL files to one of the standbys were not going through. Originally, the (streaming replication) connection was lost due to a network error, later it slowed down and the standby could not keep up with the primary. We then unregistered and disconnected this standby and deleted its replication slot. This caused the primary to delete the unnecessary files from pg_wal and start working normally again. Upon further analysis of the database, we found that we did not see any mass data changes in any of the tables. The only exception is a sequence value that was moved millions of steps within a single minute. Of particular interest is that the sequence value did not increase afterwards; but even though we restarted the primary (without connecting normal clients), it continued to write endless WAL files until we deleted that standby replication slot. It is also interesting that there were two standbys, and dropping one of them "solved" the problem. The other standby could keep up with the writes, and it was also writing out 1.5GB/sec to its disk. Since we needed the third standby, we created a new one (using a fresh pg_basebackup) after completely deleting the old one. This new instance worked for about 12 hours. This morning, the error occurred again, in the same form. Based on our previous experience, we immediately deleted the standby and its replication slot, and the problem resolved itself (except that the standby had to be deleted again). Without rebooting or restarting anything else, the problem went away. I managed to save small part of the pg_wal before deleting the slot. We looked into this, we saw something like this:

We looked at the PostgreSQL release history, and we see some bug fixes in version 14.7 that might have something to do with this:

https://www.postgresql.org/docs/release/14.7/

> Ignore invalidated logical-replication slots while determining oldest catalog xmin (Sirisha Chamarthi) A replication slot could prevent cleanup of dead tuples in the system catalogs even after it becomes invalidated due to exceeding max_slot_wal_keep_size. Thus, failure of a replication consumer could lead to indefinitely-large catalog bloat.

This might not be the problem after all. We don't have enough knowledge and experience to determine the cause of the problem. It is a production system and we should somehow ensure that this won't happen in the future. Also, we would like to add another standby, but we do not want to do this right now, because it seems to be causing the problem (or at least it is strongly correlated).

Thank you,

Laszlo

Attachment

pgsql-general by date: