Fwd: Very slow query performance when using CTE - Mailing list pgsql-performance

| From | Chris Joysn |

|---|---|

| Subject | Fwd: Very slow query performance when using CTE |

| Date | |

| Msg-id | CAKRYhW3K1TbFxYrVvmGfun3cB0R1KCZytZmjk_hY8nFAxVRH=w@mail.gmail.com Whole thread Raw |

| In response to | Very slow query performance when using CTE (Chris Joysn <joysn71@gmail.com>) |

| Responses |

Re: Very slow query performance when using CTE

|

| List | pgsql-performance |

I tried to create multi variate statistics for the table in question:

CREATE STATISTICS st_simrun_component_metadata (dependencies) ON sim_run_id, key FROM sim_run_component_metadata;

ANALYZE sim_run_component_metadata;

When I run this query, no statistics are returned:

SELECT m.* FROM pg_statistic_ext join pg_statistic_ext_data on (oid = stxoid),

pg_mcv_list_items(stxdmcv) m WHERE stxname = 'st_simrun_component_metadata';

Is there something I might have missed?

What I do not understand is:

The fetch from the table is rather fast. some milliseconds. But a subsequent sort operations takes very long time, for the amount of records fetched.

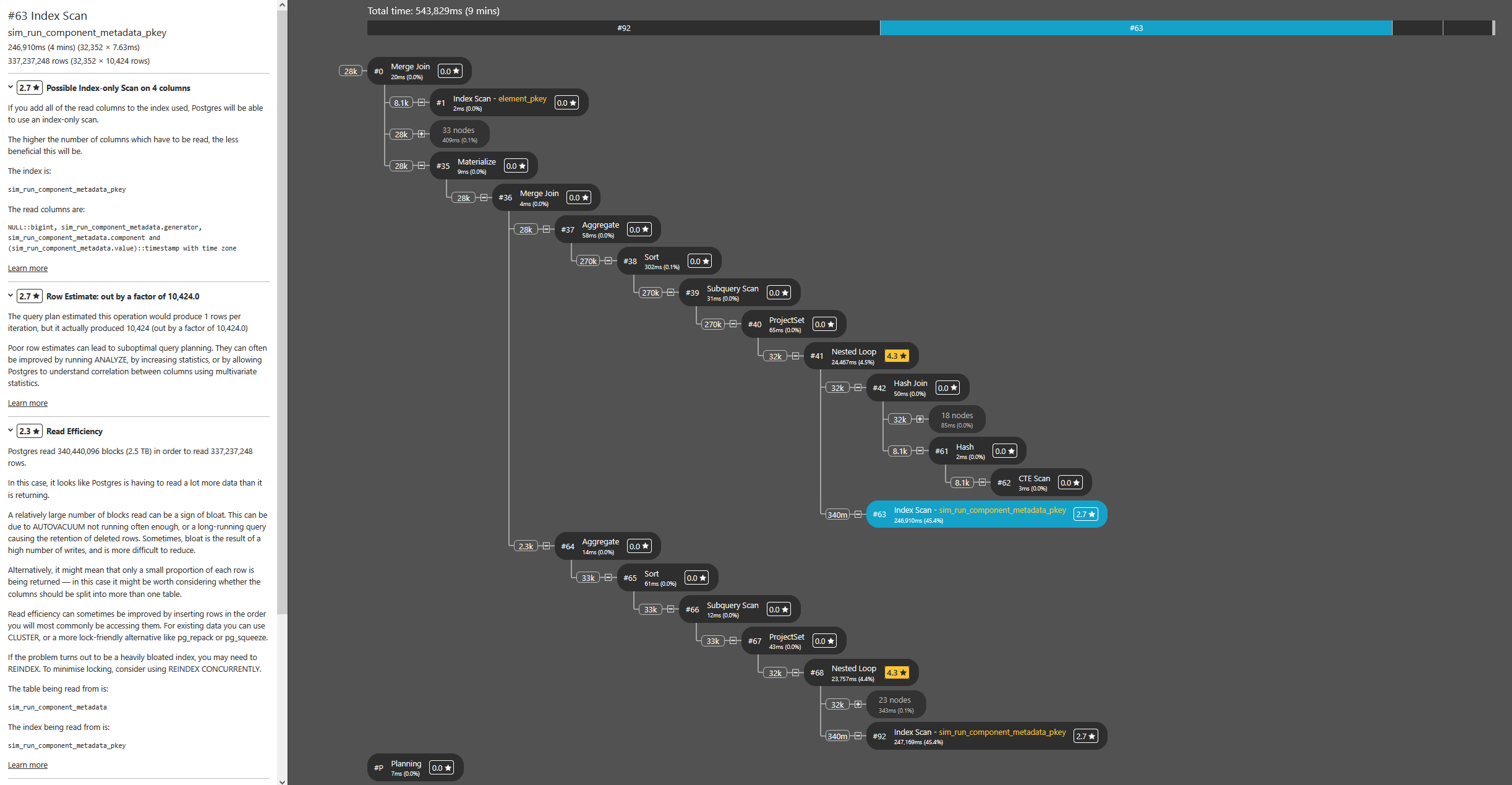

I used pgMustard to check the execution plans and give some hints. But, just like the estimated rows in the plan, it does not match the real amount of available data in the table:

On Wed, 2 Apr 2025 at 13:38, Renan Alves Fonseca <renanfonseca@gmail.com> wrote:

Clearly, the statistics collected for those tables are not good

enough. You can see the estimated frequency for the most common values

with the following query:

select attname, most_common_vals, most_common_freqs

from pg_stats

where tablename = 'samples' and attname in (values ('i'),('j'));

Replace 'samples' and 'i','j' by the table name and columns you want to inspect.

Then you can try to improve the quality of these statistics:

set default_statistics_target = 10000;

analyze [your-table];

I hope this solve the problem in the case of a simple WHERE

clause. Then, you can also create multivariate statistics to handle

complex WHERE clauses.

See https://www.postgresql.org/docs/current/multivariate-statistics-examples.html#MCV-LISTS

Best regards,

Renan

Chris Joysn <joysn71@gmail.com> writes:

> I analyzed all tables which are involved in the query, additionally I added an index to the table and columns which are

> relevant for the query on the CTE.

> I declared that CTE as not materialzed as well.

> The table in question has 1.7mio records, and the query fetches 10400 of those rows.

> But the amount of estimated rows is way off that numbers.

> The access of the table using index scan improved quite a lot compared to the previous approach, but sorting that

> intermediate result remains very costly:

> https://explain.dalibo.com/plan/3h20dce4ge93ca71

>

> btw: following environment:

> PostgreSQL 16.6 on x86_64-pc-linux-musl, compiled by gcc (Alpine 14.2.0) 14.2.0, 64-bit

> |name|current_setting|source|

> |----|---------------|------|

> |autovacuum_max_workers|10|configuration file|

> |autovacuum_naptime|10s|configuration file|

> |checkpoint_completion_target|0.9|configuration file|

> |client_encoding|UTF8|client|

> |DateStyle|ISO, MDY|client|

> |default_statistics_target|100|configuration file|

> |default_text_search_config|pg_catalog.english|configuration file|

> |default_toast_compression|lz4|configuration file|

> |dynamic_shared_memory_type|posix|configuration file|

> |effective_cache_size|48241MB|configuration file|

> |effective_io_concurrency|256|configuration file|

> |extra_float_digits|3|session|

> |jit|off|configuration file|

> |lc_messages|en_US.utf8|configuration file|

> |lc_monetary|en_US.utf8|configuration file|

> |lc_numeric|en_US.utf8|configuration file|

> |lc_time|en_US.utf8|configuration file|

> |listen_addresses|*|configuration file|

> |log_timezone|UTC|configuration file|

> |maintenance_work_mem|2047MB|configuration file|

> |max_connections|100|configuration file|

> |max_locks_per_transaction|1024|configuration file|

> |max_parallel_workers|16|configuration file|

> |max_parallel_workers_per_gather|4|configuration file|

> |max_wal_size|1GB|configuration file|

> |max_worker_processes|27|configuration file|

> |min_wal_size|512MB|configuration file|

> |random_page_cost|1.1|configuration file|

> |search_path|public, public, "$user"|session|

> |shared_buffers|16080MB|configuration file|

> |tcp_keepalives_idle|5|configuration file|

> |timescaledb.last_tuned|2025-02-10T08:58:31Z|configuration file|

> |timescaledb.last_tuned_version|0.18.0|configuration file|

> |timescaledb.max_background_workers|16|configuration file|

> |timescaledb.telemetry_level|basic|configuration file|

> |TimeZone|Europe/Vienna|client|

> |wal_buffers|16MB|configuration file|

> |work_mem|20582kB|configuration file|

>

> On Tue, 1 Apr 2025 at 22:03, Renan Alves Fonseca <renanfonseca@gmail.com> wrote:

>

> Chris Joysn <joysn71@gmail.com> writes:

>

> > Hello,

> > I have an issue when using CTEs. A query, which consists of multiple CTEs, runs usually rather fast (~5s on my

> > environment). But it turned out that using one CTE can lead to execution times of up to one minute.

> > That CTE is used two times within the query. In the CTE there are 2600 rows, compared to results of the other CTEs

> its a

> > fraction of the data.

> > When replacing this CTE and use the original table instead in the jions, the query performs nicely.

> > However, it is not always like this. Running the same query on a almost same set of data, quantity wise, may give

> indeed

> > good performance when using that CTE.

> > This is the slow performing query using CTE:

> > https://explain.dalibo.com/plan/45ce86d9cfge14c7

> > And this is the fast performing query without that CTE:

> > https://explain.dalibo.com/plan/4abgc4773gg349b4

> >

> > The query runs on the very same environment and data.

> > What can be the issue here and how can I address it?

>

> Hi,

>

> the planner gets really bad estimates on the number of rows that the

> first two CTE return. It is the same situation in both queries. It is

> just an accident that one of them works fine.

>

> We need to understand why these estimates are wrong. The protocol to fix

> this kind of issues is to apply ANALYZE so that the statistics get

> update. You can try that, but my guess is that we are dealing with a

> generic plan here. So, try to replace the value of $simRunId by the

> actual value and see if the plan changes.

>

> Best regards,

> Renan

Attachment

pgsql-performance by date: