Thread: a plpgsql bug

hello!

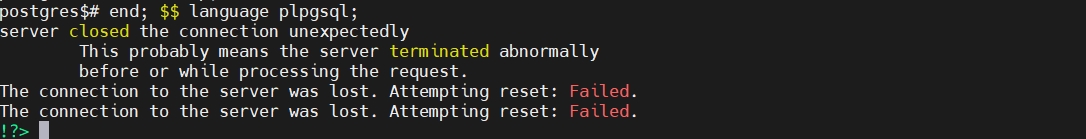

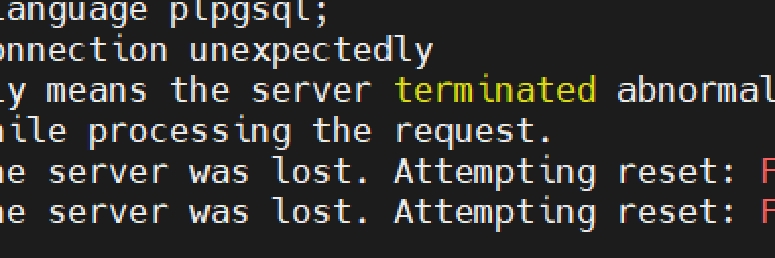

I found a problem in plpgsql. When there is a large loop in plpgsql, it is found that the change of search_path will cause memory exhaustion and thus disconnect the connection.

The test examples are as follows:

1 create a schema

create schema test_schema;

2 create a function create or replace function test_schema.test_f(id integer) returns integer as $$ declare var2 integer := 1; begin if id % 4 = 1 then return var2 + 2; elseif id % 4 = 2 then return var2 + 3; elseif id % 4 = 3 then return var2 + 4; else return var2; end if; end; $$ language plpgsql;

3 a loop in plpgsql,which wil result disconnection. do $$ declare var1 integer; begin for id in 1 .. 10000000 LOOP set search_path to test_schema; var1 = test_schema.test_f(id); set search_path to public; var1 = test_schema.test_f(id); end loop; end; $$ language plpgsql;

daidewei@highgo.com

Attachment

On Mon, Sep 18, 2023 at 11:46 PM daidewei@highgo.com <daidewei@highgo.com> wrote:

I found a problem in plpgsql. When there is a large loop in plpgsql, it is found that the change of search_path will cause memory exhaustion and thus disconnect the connection.

It is impossible to prevent queries from exhausting memory so the fact that this one does isn't an indication of a bug on its own. I'm having trouble imagining a reasonable use case for this pattern of changing search_path frequently in a loop within a single execution of a function. That said, I'm not in a position to judge how easy or difficult an improvement in this area may be.

David J.

"David G. Johnston" <david.g.johnston@gmail.com> writes:

> On Mon, Sep 18, 2023 at 11:46 PM daidewei@highgo.com <daidewei@highgo.com>

> wrote:

>> I found a problem in plpgsql. When there is a large loop in plpgsql,

>> it is found that the change of search_path will cause memory exhaustion and

>> thus disconnect the connection.

> It is impossible to prevent queries from exhausting memory so the fact that

> this one does isn't an indication of a bug on its own. I'm having trouble

> imagining a reasonable use case for this pattern of changing search_path

> frequently in a loop within a single execution of a function. That said,

> I'm not in a position to judge how easy or difficult an improvement in this

> area may be.

I poked into this a bit with valgrind. It seems that the problem

is that the changes to search_path thrash the "simple expression"

mechanism in plpgsql, such that it has to re-plan the various

expressions in the called function each time through. It's good about

tracking the actual cached plans and not leaking those, but what is

getting leaked into transaction-lifespan memory is the data structures

made by

expr->expr_simple_state =

ExecInitExprWithParams(expr->expr_simple_expr,

econtext->ecxt_param_list_info);

We could conceivably reclaim that data if we were willing to set up

yet another per-expression memory context to hold it. That seems

like rather a high overhead though.

The given test case is obviously a bit artificial, but I think it

may be a simplification of fairly plausible use-cases. The triggering

condition is that the same textual expression in a plpgsql function

gets executed repeatedly with different search_path settings, which

doesn't seem that unreasonable.

Perhaps another approach could be to assume that only a small number

of distinct search_path settings will be used in any one transaction,

and cache a separate plan and estate for each one. That would have

the nice side-effect of avoiding the replanning overhead, but then

we'd have to figure out how to manage the cache and keep it from

blowing out.

regards, tom lane

I find another example which doesn't change search_path,but use function 'SECURITY DEFINER'

1 create a user

./psql -dpostgres

create user test_user;

grant all on database postgres to test_user;

2 use the new user to logon and create function

./psql -dpostgres -U test_user

create schema test_user;

create or replace function test_user.test_m(id integer) returns integer as

$$

declare

var2 integer := 1;

begin

if id % 4 = 1 then

return var2 + 2;

elseif id % 4 = 2 then

return var2 + 3;

elseif id % 4 = 3 then

return var2 + 4;

else

return var2;

end if;

end; $$ language plpgsql;

create or replace function test_user.test_f(id integer) returns integer SECURITY DEFINER as

$$

declare

var1 integer := 1;

begin

var1 := test_user.test_m(23);

return var1;

end; $$ language plpgsql;

3 execute

./psql -dpostgres

do $$

declare

var1 integer;

begin

for id in 1 .. 10000000 LOOP

var1 = test_user.test_m(id);

var1 = test_user.test_f(id);

end loop;

end; $$ language plpgsql;

daidewei@highgo.com

"David G. Johnston" <david.g.johnston@gmail.com> writes:> On Mon, Sep 18, 2023 at 11:46 PM daidewei@highgo.com <daidewei@highgo.com>> wrote:>> I found a problem in plpgsql. When there is a large loop in plpgsql,>> it is found that the change of search_path will cause memory exhaustion and>> thus disconnect the connection.> It is impossible to prevent queries from exhausting memory so the fact that> this one does isn't an indication of a bug on its own. I'm having trouble> imagining a reasonable use case for this pattern of changing search_path> frequently in a loop within a single execution of a function. That said,> I'm not in a position to judge how easy or difficult an improvement in this> area may be.I poked into this a bit with valgrind. It seems that the problemis that the changes to search_path thrash the "simple expression"mechanism in plpgsql, such that it has to re-plan the variousexpressions in the called function each time through. It's good abouttracking the actual cached plans and not leaking those, but what isgetting leaked into transaction-lifespan memory is the data structuresmade byexpr->expr_simple_state =ExecInitExprWithParams(expr->expr_simple_expr,econtext->ecxt_param_list_info);We could conceivably reclaim that data if we were willing to set upyet another per-expression memory context to hold it. That seemslike rather a high overhead though.The given test case is obviously a bit artificial, but I think itmay be a simplification of fairly plausible use-cases. The triggeringcondition is that the same textual expression in a plpgsql functiongets executed repeatedly with different search_path settings, whichdoesn't seem that unreasonable.Perhaps another approach could be to assume that only a small numberof distinct search_path settings will be used in any one transaction,and cache a separate plan and estate for each one. That would havethe nice side-effect of avoiding the replanning overhead, but thenwe'd have to figure out how to manage the cache and keep it fromblowing out.regards, tom lane

Attachment

maybe when function compiling,swith to its owner

daidewei@highgo.com

I find another example which doesn't change search_path,but use function 'SECURITY DEFINER'1 create a user./psql -dpostgrescreate user test_user;grant all on database postgres to test_user;2 use the new user to logon and create function./psql -dpostgres -U test_usercreate schema test_user;create or replace function test_user.test_m(id integer) returns integer as$$declarevar2 integer := 1;beginif id % 4 = 1 thenreturn var2 + 2;elseif id % 4 = 2 thenreturn var2 + 3;elseif id % 4 = 3 thenreturn var2 + 4;elsereturn var2;end if;end; $$ language plpgsql;create or replace function test_user.test_f(id integer) returns integer SECURITY DEFINER as$$declarevar1 integer := 1;beginvar1 := test_user.test_m(23);return var1;end; $$ language plpgsql;3 execute./psql -dpostgresdo $$declarevar1 integer;beginfor id in 1 .. 10000000 LOOPvar1 = test_user.test_m(id);var1 = test_user.test_f(id);end loop;end; $$ language plpgsql;daidewei@highgo.com"David G. Johnston" <david.g.johnston@gmail.com> writes:> On Mon, Sep 18, 2023 at 11:46 PM daidewei@highgo.com <daidewei@highgo.com>> wrote:>> I found a problem in plpgsql. When there is a large loop in plpgsql,>> it is found that the change of search_path will cause memory exhaustion and>> thus disconnect the connection.> It is impossible to prevent queries from exhausting memory so the fact that> this one does isn't an indication of a bug on its own. I'm having trouble> imagining a reasonable use case for this pattern of changing search_path> frequently in a loop within a single execution of a function. That said,> I'm not in a position to judge how easy or difficult an improvement in this> area may be.I poked into this a bit with valgrind. It seems that the problemis that the changes to search_path thrash the "simple expression"mechanism in plpgsql, such that it has to re-plan the variousexpressions in the called function each time through. It's good abouttracking the actual cached plans and not leaking those, but what isgetting leaked into transaction-lifespan memory is the data structuresmade byexpr->expr_simple_state =ExecInitExprWithParams(expr->expr_simple_expr,econtext->ecxt_param_list_info);We could conceivably reclaim that data if we were willing to set upyet another per-expression memory context to hold it. That seemslike rather a high overhead though.The given test case is obviously a bit artificial, but I think itmay be a simplification of fairly plausible use-cases. The triggeringcondition is that the same textual expression in a plpgsql functiongets executed repeatedly with different search_path settings, whichdoesn't seem that unreasonable.Perhaps another approach could be to assume that only a small numberof distinct search_path settings will be used in any one transaction,and cache a separate plan and estate for each one. That would havethe nice side-effect of avoiding the replanning overhead, but thenwe'd have to figure out how to manage the cache and keep it fromblowing out.regards, tom lane