Thread: pgbench bug / limitation

Hello Team,

I was using pgbench to run benchmarking tests on our postgres server and came across this issue which I feel is pgbench bug.

I had initialized the test DB with scale factor 200. And then started the test with clients (-c 150) but it never worked until I reduced the clients to 115.

Below is the error message.

OS: Windows 10

Version: pgbench (PostgreSQL) 12.2

Max Connection allowed on Server: 800

C:\Program Files\PostgreSQL\12\bin>pgbench.exe -i -s 200 -h <DBServerName> -p 9432 -U DBUser testdb

C:\Program Files\PostgreSQL\12\bin>pgbench.exe -c 122 -r -T 3600 -h <DBServerName> -p 9432 -U DBUser testdb

Password:

starting vacuum...end.

too many client connections for select()

C:\Program Files\PostgreSQL\12\bin>pgbench.exe -c 120 -r -T 3600 -h <DBServerName> -p 9432 -U DBUser testdb

Password:

starting vacuum...end.

too many client connections for select()

Thanks

Manish

"Jawarilal, Manish" <Manish.Jawarilal@dell.com> writes:

> OS: Windows 10

> C:\Program Files\PostgreSQL\12\bin>pgbench.exe -c 120 -r -T 3600 -h <DBServerName> -p 9432 -U DBUser testdb

> too many client connections for select()

Yeah ... pgbench can support fairly large connection counts on platforms

that have ppoll(), but Windows does not. Without ppoll() we fall back

to select() which may not allow more than 100 or so.

Having said that ... it looks like pgbench thinks it can override

Windows' default setting:

#ifdef WIN32

#define FD_SETSIZE 1024 /* must set before winsock2.h is included */

#endif

and some googling confirms that indeed that should work. How did you

build or come by this copy of pgbench?

regards, tom lane

Hello Tom,

Thanks a ton for such quick reply. Ok, so ppoll() would be available on Linux right..?

I just installed postgres 12.2 on windows & got it as part of the install.

What would you advise me to do..?

Thanks

Manish

-----Original Message-----

From: Tom Lane <tgl@sss.pgh.pa.us>

Sent: Thursday, May 28, 2020 11:02 PM

To: Jawarilal, Manish

Cc: pgsql-bugs@lists.postgresql.org

Subject: Re: pgbench bug / limitation

[EXTERNAL EMAIL]

"Jawarilal, Manish" <Manish.Jawarilal@dell.com> writes:

> OS: Windows 10

> C:\Program Files\PostgreSQL\12\bin>pgbench.exe -c 120 -r -T 3600 -h

> <DBServerName> -p 9432 -U DBUser testdb too many client connections

> for select()

Yeah ... pgbench can support fairly large connection counts on platforms that have ppoll(), but Windows does not.

Withoutppoll() we fall back to select() which may not allow more than 100 or so.

Having said that ... it looks like pgbench thinks it can override Windows' default setting:

#ifdef WIN32

#define FD_SETSIZE 1024 /* must set before winsock2.h is included */

#endif

and some googling confirms that indeed that should work. How did you build or come by this copy of pgbench?

regards, tom lane

"Jawarilal, Manish" <Manish.Jawarilal@dell.com> writes:

> I just installed postgres 12.2 on windows & got it as part of the install.

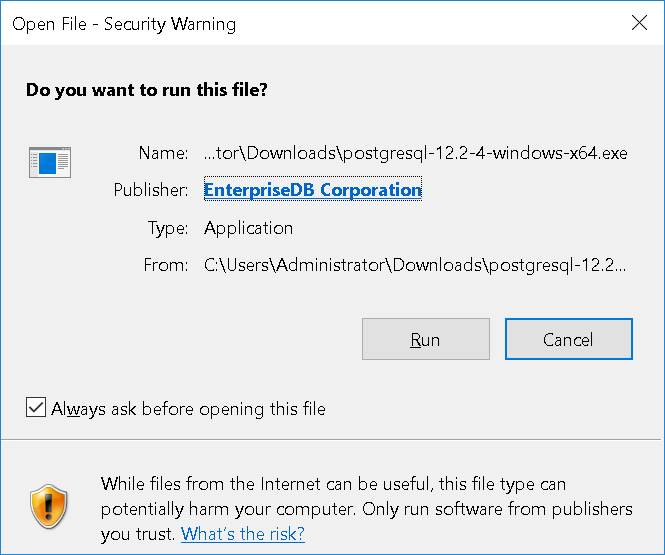

Well, I was hoping for more detail than that. Did you use EDB's

installer, or something else?

regards, tom lane

Hello Lane,

Sorry my bad, yes I downloaded from www.enterprisedb.com

Thanks

Manish

-----Original Message-----

From: Tom Lane <tgl@sss.pgh.pa.us>

Sent: Friday, May 29, 2020 8:15 PM

To: Jawarilal, Manish

Cc: pgsql-bugs@lists.postgresql.org

Subject: Re: pgbench bug / limitation

[EXTERNAL EMAIL]

"Jawarilal, Manish" <Manish.Jawarilal@dell.com> writes:

> I just installed postgres 12.2 on windows & got it as part of the install.

Well, I was hoping for more detail than that. Did you use EDB's installer, or something else?

regards, tom lane

Attachment

"Jawarilal, Manish" <Manish.Jawarilal@dell.com> writes: > Sorry my bad, yes I downloaded from www.enterprisedb.com<http://www.enterprisedb.com> Hmph. Seems like that FD_SETSIZE manipulation isn't doing what we expect it to, then. But Windows isn't my thing, so somebody else will have to investigate that. regards, tom lane

On Tue, 2 Jun 2020 at 03:59, Tom Lane <tgl@sss.pgh.pa.us> wrote:

> Hmph. Seems like that FD_SETSIZE manipulation isn't doing what we expect

> it to, then. But Windows isn't my thing, so somebody else will have to

> investigate that.

I had a look at this and it appears like we might be making some

assumptions about what socket() returns that perhaps we shouldn't be.

I added the following line to the top of add_socket_to_set():

printf("add_socket_to_set fd = %d\n", fd);

On Linux, using CFLAGS="-D PGBENCH_USE_SELECT", I see:

$ pgbench -c 122 -r -t 1 postgres

starting vacuum...end.

add_socket_to_set fd = 3

add_socket_to_set fd = 4

add_socket_to_set fd = 5

add_socket_to_set fd = 6

add_socket_to_set fd = 7

add_socket_to_set fd = 8

<trim>

However, on Windows, I get:

>pgbench -c 122 -r -T 3600 postgres

starting vacuum...end.

add_socket_to_set fd = 392

add_socket_to_set fd = 388

add_socket_to_set fd = 408

add_socket_to_set fd = 404

add_socket_to_set fd = 412

add_socket_to_set fd = 420

<trim>

So it seems to me that the range test in add_socket_to_set() is

incorrectly using the socket descriptor to determine if we've added

too many descriptors to the set. I can't glean too much from the

socket() documentation for either OS about what the return value is.

I didn't see anything which indicated anything about how the return

value is determined. My guess is that on windows it's shared over all

programs and it's not on Linux.

Shouldn't: if (fd < 0 || fd >= FD_SETSIZE) just become: if (idx > FD_SETSIZE) ?

David

David Rowley <dgrowleyml@gmail.com> writes:

> I added the following line to the top of add_socket_to_set():

> printf("add_socket_to_set fd = %d\n", fd);

> However, on Windows, I get:

> add_socket_to_set fd = 392

> add_socket_to_set fd = 388

> add_socket_to_set fd = 408

> add_socket_to_set fd = 404

> add_socket_to_set fd = 412

> add_socket_to_set fd = 420

Interesting.

> Shouldn't: if (fd < 0 || fd >= FD_SETSIZE) just become: if (idx > FD_SETSIZE) ?

Certainly not, because it's the fd not the idx that is being added

into the fd_set. I am not too sure about the underlying implementation

on Windows, but on Unix-like OSes, FD_SETSIZE *is* the size of that bit

array. What you suggest would allow memory stomps.

Given your results, I'm guessing that we are indeed managing to increase

the fd_set size to 1024, but that's not enough to allow order-of-1000

connections because there are other things competing for FD identifiers.

Maybe we should just crank up the forced value of FD_SETSIZE (to, say,

8192)?

regards, tom lane

David Rowley <dgrowleyml@gmail.com> writes:

> ... I can't glean too much from the

> socket() documentation for either OS about what the return value is.

> I didn't see anything which indicated anything about how the return

> value is determined.

BTW, as far as that goes, POSIX is perfectly clear about this:

2.14. File Descriptor Allocation

All functions that open one or more file descriptors shall, unless

specified otherwise, atomically allocate the lowest numbered available

(that is, not already open in the calling process) file descriptor at

the time of each allocation.

It doesn't surprise me a lot to hear that Microsoft couldn't be

bothered to adhere to the spec, though.

regards, tom lane

On Tue, 2 Jun 2020 at 12:21, Tom Lane <tgl@sss.pgh.pa.us> wrote:

>

> David Rowley <dgrowleyml@gmail.com> writes:

> > Shouldn't: if (fd < 0 || fd >= FD_SETSIZE) just become: if (idx > FD_SETSIZE) ?

>

> Certainly not, because it's the fd not the idx that is being added

> into the fd_set. I am not too sure about the underlying implementation

> on Windows, but on Unix-like OSes, FD_SETSIZE *is* the size of that bit

> array. What you suggest would allow memory stomps.

Looks like I didn't dig deep enough. The struct for the fs_set is defined as:

typedef struct fd_set {

u_int fd_count; /* how many are SET? */

SOCKET fd_array[FD_SETSIZE]; /* an array of SOCKETs */

} fd_set;

So, of course, we need to ensure we don't index beyond whatever FD_SET

is set to.

The implementation of FD_SET that I see in WinSock2.h is:

#define FD_SET(fd, set) do { \

u_int __i; \

for (__i = 0; __i < ((fd_set FAR *)(set))->fd_count; __i++) { \

if (((fd_set FAR *)(set))->fd_array[__i] == (fd)) { \

break; \

} \

} \

if (__i == ((fd_set FAR *)(set))->fd_count) { \

if (((fd_set FAR *)(set))->fd_count < FD_SETSIZE) { \

((fd_set FAR *)(set))->fd_array[__i] = (fd); \

((fd_set FAR *)(set))->fd_count++; \

} \

} \

} while(0, 0)

Which is not so great. I guess the POSIX description of using the

lowest available fd is due it expecting implementations to use a

bitmask, rather than an array, like the above, of which would be more

suited for any arbitrary large integer.

> Given your results, I'm guessing that we are indeed managing to increase

> the fd_set size to 1024, but that's not enough to allow order-of-1000

> connections because there are other things competing for FD identifiers.

> Maybe we should just crank up the forced value of FD_SETSIZE (to, say,

> 8192)?

On Windows, I tried building pgbench with FD_SETSIZE set to 128. On

running I get:

starting vacuum...end.

add_socket_to_set fd = 372

pgbench: fatal: too many client connections for select()

So it does appear that the return value of socket() is not limited by

whatever FD_SETSIZE is set to. I guess that means making it larger

would reduce the chances of this error occurring. However, I was

unable to find any indication of the range of values that we can

expect socket() to return on Windows. Going by the fd_set struct I

pasted above, we certainly can't make FD_SETSIZE too large.

David

David Rowley <dgrowleyml@gmail.com> writes:

> Looks like I didn't dig deep enough. The struct for the fs_set is defined as:

> typedef struct fd_set {

> u_int fd_count; /* how many are SET? */

> SOCKET fd_array[FD_SETSIZE]; /* an array of SOCKETs */

> } fd_set;

Oh, how interesting. So that's a completely different implementation

than what Unix systems use. On my Linux box, for example, <sys/select.h>

has (slightly edited for clarity):

#define __NFDBITS (8 * (int) sizeof (__fd_mask))

/* fd_set for select and pselect. */

typedef struct

{

__fd_mask __fds_bits[__FD_SETSIZE / __NFDBITS];

} fd_set;

so that FD_SETSIZE is constraining the largest FD *value* that can be

represented in the bit-array. Windows' implementation constrains the

number of FDs, but not their numerical values.

It looks like we have to have a different test for fd_set overrun

on Windows than on other platforms.

> The implementation of FD_SET that I see in WinSock2.h is:

> #define FD_SET(fd, set) do { \

> u_int __i; \

> for (__i = 0; __i < ((fd_set FAR *)(set))->fd_count; __i++) { \

> if (((fd_set FAR *)(set))->fd_array[__i] == (fd)) { \

> break; \

> } \

> } \

> if (__i == ((fd_set FAR *)(set))->fd_count) { \

> if (((fd_set FAR *)(set))->fd_count < FD_SETSIZE) { \

> ((fd_set FAR *)(set))->fd_array[__i] = (fd); \

> ((fd_set FAR *)(set))->fd_count++; \

> } \

> } \

> } while(0, 0)

> Which is not so great.

That's putting it mildly :-(. This is O(N^2) in the number of values

that get put into the set --- and since add_socket_to_set is part of

the event loop in pgbench, that means the performance problem is

going to be easily visible with lots of sockets.

I had been wondering earlier whether we should add another,

Windows-specific implementation of add_socket_to_set and friends.

It's starting to look like we should, because there's nothing that's

failing to suck about how Microsoft has done this. Non standards

compliant *and* abysmally performant is a bad combination.

BTW, I see that POSIX says (in <sys/select.h>)

FD_SETSIZE

Maximum number of file descriptors in an fd_set structure.

but the select(2) page says

The behavior of these macros is undefined if the fd argument is less

than 0 or greater than or equal to FD_SETSIZE, or if fd is not a valid

file descriptor, or if any of the arguments are expressions with

side-effects.

So a language-lawyer could make a case that Microsoft's implementation

is within the letter of the spec. Still, we have to keep the range

check as it stands for everyone else, so that doesn't help us.

regards, tom lane

Attached is a blind attempt at working around the issue based on what you

show below, that I cannot test.

> typedef struct fd_set {

> u_int fd_count; /* how many are SET? */

> SOCKET fd_array[FD_SETSIZE]; /* an array of SOCKETs */

> } fd_set;

>

> #define FD_SET(fd, set) do { \

> u_int __i; \

> for (__i = 0; __i < ((fd_set FAR *)(set))->fd_count; __i++) { \

> if (((fd_set FAR *)(set))->fd_array[__i] == (fd)) { \

> break; \

> } \

> } \

> if (__i == ((fd_set FAR *)(set))->fd_count) { \

> if (((fd_set FAR *)(set))->fd_count < FD_SETSIZE) { \

> ((fd_set FAR *)(set))->fd_array[__i] = (fd); \

> ((fd_set FAR *)(set))->fd_count++; \

> } \

> } \

> } while(0, 0)

--

Fabien.

Attachment

On Tue, 2 Jun 2020 at 23:27, Fabien COELHO <coelho@cri.ensmp.fr> wrote: > Attached is a blind attempt at working around the issue based on what you > show below, that I cannot test. I suppose we might be able to do something like that. It does expose us to the implementation of Microsoft's fd_set struct, but surely the shape of that must be pretty much fixed since if it were to be changed then software that's already compiled would break. I tested the patch on a Windows machine and it seems to work. I'm not much a fan of the naming of the new macro though. Wouldn't it be better to reverse the logic and call it IS_VALID_FD? David

Hello David, > On Tue, 2 Jun 2020 at 23:27, Fabien COELHO <coelho@cri.ensmp.fr> wrote: >> Attached is a blind attempt at working around the issue based on what you >> show below, that I cannot test. > > I suppose we might be able to do something like that. It does expose > us to the implementation of Microsoft's fd_set struct, but surely the > shape of that must be pretty much fixed since if it were to be changed > then software that's already compiled would break. Yep. I cannot seen how to do it without some assumption about the underlying implementation. I agree that it breaks the very principle of the interface, which is to hide fdset implementation details. > I tested the patch on a Windows machine Thanks. > and it seems to work. Good. > I'm not much a fan of the naming of the new macro though. Wouldn't it > be better to reverse the logic and call it IS_VALID_FD? I hesitated. Here it is with a inversion/renaming, and a check that fd is positive on windows. I renamed to "FD_IS_VALID" which seems to read better. -- Fabien.

Attachment

David Rowley <dgrowleyml@gmail.com> writes:

> On Tue, 2 Jun 2020 at 23:27, Fabien COELHO <coelho@cri.ensmp.fr> wrote:

>> Attached is a blind attempt at working around the issue based on what you

>> show below, that I cannot test.

> I suppose we might be able to do something like that. It does expose

> us to the implementation of Microsoft's fd_set struct, but surely the

> shape of that must be pretty much fixed since if it were to be changed

> then software that's already compiled would break.

More to the point, this does nothing to get around the O(N^2) cost of

adding N FDs to the fd_set with Microsoft's implementation; so I do not

think it's really going to fix the problem for people who want to use

lots of connections on Windows.

The idea that I vaguely had was to build our own array of socket FDs

(bypassing the unnecessary de-duplication logic in FD_SET) and then call

WaitForMultipleObjects() or similar directly. This would of course

be quite Windows-specific, which'd be annoying, but it seems like

getting out of using select() on Windows wouldn't be a bad thing.

Trying to make the same code work with two basically different models

of what a fd_set is seems like a recipe for pain. This would also get

us out from under the hack of redefining FD_SETSIZE.

Alternatively, if we're going to get in bed with the internals of

Windows' fd_set implementation anyway, we could implement our own

version of FD_SET that doesn't do the de-duplication.

regards, tom lane

Hi, On June 5, 2020 9:45:47 AM PDT, Tom Lane <tgl@sss.pgh.pa.us> wrote: >The idea that I vaguely had was to build our own array of socket FDs >(bypassing the unnecessary de-duplication logic in FD_SET) and then >call >WaitForMultipleObjects() or similar directly. This would of course >be quite Windows-specific, which'd be annoying, but it seems like >getting out of using select() on Windows wouldn't be a bad thing. >Trying to make the same code work with two basically different models >of what a fd_set is seems like a recipe for pain. This would also get >us out from under the hack of redefining FD_SETSIZE. IIRC WaitForMultiple* only supports 64 objects or such. Which might be problematic here. Andres -- Sent from my Android device with K-9 Mail. Please excuse my brevity.

Andres Freund <andres@anarazel.de> writes:

> On June 5, 2020 9:45:47 AM PDT, Tom Lane <tgl@sss.pgh.pa.us> wrote:

>> The idea that I vaguely had was to build our own array of socket FDs

>> (bypassing the unnecessary de-duplication logic in FD_SET) and then

>> call WaitForMultipleObjects() or similar directly.

> IIRC WaitForMultiple* only supports 64 objects or such. Which might be problematic here.

Ugh, so it does. I'd also just noted that its timeout resolution is

only in msec, which is exactly why we want to use ppoll() not poll()

here on Unix-oid OS's. So WaitForMultipleObjects() is out.

I still suppose that select(2) is not a native API for Windows. Since

we know that it can be made to support more than 64 FDs, it must not

be built on top of WaitForMultipleObjects ... but then what *is* it

built on?

regards, tom lane

Hi, On 2020-06-05 13:32:35 -0400, Tom Lane wrote: > Andres Freund <andres@anarazel.de> writes: > > On June 5, 2020 9:45:47 AM PDT, Tom Lane <tgl@sss.pgh.pa.us> wrote: > >> The idea that I vaguely had was to build our own array of socket FDs > >> (bypassing the unnecessary de-duplication logic in FD_SET) and then > >> call WaitForMultipleObjects() or similar directly. > > > IIRC WaitForMultiple* only supports 64 objects or such. Which might be problematic here. > > Ugh, so it does. I'd also just noted that its timeout resolution is > only in msec, which is exactly why we want to use ppoll() not poll() > here on Unix-oid OS's. So WaitForMultipleObjects() is out. > > I still suppose that select(2) is not a native API for Windows. Since > we know that it can be made to support more than 64 FDs, it must not > be built on top of WaitForMultipleObjects ... but then what *is* it > built on? I don't know. I only remembered this limitation because at some point I was looking at making the latch code cope with large numbers of FDs (e.g. for an append over FDWs). IIRC one basically needs helper threads or something. I'm fairly sure one can easily scale to large numbers of sockets on windows by using completion based APIs, but that doesn't seem like a realistic thing to use for pgbench anytime soon. Greetings, Andres Freund

Andres Freund <andres@anarazel.de> writes:

> I'm fairly sure one can easily scale to large numbers of sockets on

> windows by using completion based APIs, but that doesn't seem like a

> realistic thing to use for pgbench anytime soon.

Agreed. Seems like the best answer is to get in bed with the Windows

representation of fd_set, since we cannot avoid knowing that it is

different from other platforms' versions. I suggest that we might as

well get all the way in and dodge the FD_SETSIZE limitation altogether,

as per the attached utterly-untested draft patch.

A remaining problem with this is that in theory, repeatedly applying

socket_has_input() after the wait could also be O(N^2) (unless FD_ISSET

is way smarter than I suspect it is). In practice, that's probably not

a huge problem since there would normally be only a few FDs in the

result fd_set. Still, I'd rather see us get out from under select()

altogether on Windows ... but maybe they *don't* have a better API

anywhere. In any case, this should be a good bit better than what we

have now.

regards, tom lane

diff --git a/src/bin/pgbench/pgbench.c b/src/bin/pgbench/pgbench.c

index 08a5947a9e..e37f833879 100644

--- a/src/bin/pgbench/pgbench.c

+++ b/src/bin/pgbench/pgbench.c

@@ -27,10 +27,6 @@

*

*/

-#ifdef WIN32

-#define FD_SETSIZE 1024 /* must set before winsock2.h is included */

-#endif

-

#include "postgres_fe.h"

#include <ctype.h>

@@ -51,7 +47,11 @@

#include <poll.h>

#endif

#else /* no ppoll(), so use select() */

+#ifdef WIN32

+#define POLL_USING_WINDOWS_SELECT

+#else

#define POLL_USING_SELECT

+#endif

#ifdef HAVE_SYS_SELECT_H

#include <sys/select.h>

#endif

@@ -108,6 +108,19 @@ typedef struct socket_set

#endif /* POLL_USING_SELECT */

+#ifdef POLL_USING_WINDOWS_SELECT

+#define SOCKET_WAIT_METHOD "select"

+

+/*----------

+ * On Windows, fd_set is a struct that's effectively

+ * u_int fd_count;

+ * SOCKET fd_array[FLEXIBLE_ARRAY_MEMBER];

+ *----------

+ */

+typedef fd_set socket_set;

+

+#endif /* POLL_USING_WINDOWS_SELECT */

+

/*

* Multi-platform pthread implementations

*/

@@ -6740,6 +6753,70 @@ socket_has_input(socket_set *sa, int fd, int idx)

#endif /* POLL_USING_SELECT */

+#ifdef POLL_USING_WINDOWS_SELECT

+

+static socket_set *

+alloc_socket_set(int count)

+{

+ socket_set *sa;

+

+ sa = (socket_set *) pg_malloc(offsetof(socket_set, fd_array) +

+ sizeof(SOCKET) * count);

+ sa->fd_count = 0;

+ return sa;

+}

+

+static void

+free_socket_set(socket_set *sa)

+{

+ pg_free(sa);

+}

+

+static void

+clear_socket_set(socket_set *sa)

+{

+ FD_ZERO(sa);

+}

+

+static void

+add_socket_to_set(socket_set *sa, int fd, int idx)

+{

+ /*

+ * We do not use FD_SET() here, first because it enforces a maximum array

+ * length of FD_SETSIZE which is not relevant, and second because it

+ * uselessly de-duplicates entries, requiring O(N^2) time to add N FDs to

+ * the set.

+ */

+ Assert(idx == sa->fd_count);

+ sa->fd_array[sa->fd_count++] = fd;

+}

+

+static int

+wait_on_socket_set(socket_set *sa, int64 usecs)

+{

+ if (usecs > 0)

+ {

+ struct timeval timeout;

+

+ timeout.tv_sec = usecs / 1000000;

+ timeout.tv_usec = usecs % 1000000;

+ /* Note that Windows ignores select's first argument */

+ return select(0, sa, NULL, NULL, &timeout);

+ }

+ else

+ {

+ return select(0, sa, NULL, NULL, NULL);

+ }

+}

+

+static bool

+socket_has_input(socket_set *sa, int fd, int idx)

+{

+ return (FD_ISSET(fd, sa) != 0);

+}

+

+#endif /* POLL_USING_WINDOWS_SELECT */

+

/* partial pthread implementation for Windows */

Hello Tom, > More to the point, this does nothing to get around the O(N^2) cost of > adding N FDs to the fd_set with Microsoft's implementation; so I do not > think it's really going to fix the problem for people who want to use > lots of connections on Windows. > Alternatively, if we're going to get in bed with the internals of > Windows' fd_set implementation anyway, we could implement our own > version of FD_SET that doesn't do the de-duplication. Yes. See attached, which I cannot test. -- Fabien.

Attachment

Hello Tom, > Agreed. Seems like the best answer is to get in bed with the Windows > representation of fd_set, since we cannot avoid knowing that it is > different from other platforms' versions. I suggest that we might as > well get all the way in and dodge the FD_SETSIZE limitation altogether, > as per the attached utterly-untested draft patch. ISTM that the patch your propose, a full reimplementation of the abstract poll/select fd set interface for windows, is overkill because it adds about 80 lines of code. ISTM that just redefining some macros is enough to get the same performance benefit without adding much to the code base, but maybe I'm missing something. At least, I do not think that getting rid of the FD_SETSIZE redefinition for windows is worth all that trouble. -- Fabien.

Fabien COELHO <coelho@cri.ensmp.fr> writes:

>> Agreed. Seems like the best answer is to get in bed with the Windows

>> representation of fd_set, since we cannot avoid knowing that it is

>> different from other platforms' versions. I suggest that we might as

>> well get all the way in and dodge the FD_SETSIZE limitation altogether,

>> as per the attached utterly-untested draft patch.

> ISTM that the patch your propose, a full reimplementation of the abstract

> poll/select fd set interface for windows, is overkill because it adds

> about 80 lines of code. ISTM that just redefining some macros is enough to

> get the same performance benefit without adding much to the code base, but

> maybe I'm missing something. At least, I do not think that getting rid of

> the FD_SETSIZE redefinition for windows is worth all that trouble.

I think it's worth doing because it puts the Windows implementation at

full feature parity with Unixen, ie, there's no hard-wired limit on the

number of connections. I'm also not that thrilled with trying to make

one code path work with two fundamentally different implementations.

We've found out that just because Microsoft claims their implementation

is compatible with POSIX doesn't make it so, and I'd rather not have

warts in our source code from trying to paper over that.

Lastly, I think it's entirely likely that someone will come up with a

Windows-native implementation at some point, so that we'd have the extra

code lines anyway.

regards, tom lane

Hello Tom, My 0.02 €, which might not convince you: >> ISTM that the patch your propose, a full reimplementation of the abstract >> poll/select fd set interface for windows, is overkill because it adds >> about 80 lines of code. ISTM that just redefining some macros is enough to >> get the same performance benefit without adding much to the code base, but >> maybe I'm missing something. At least, I do not think that getting rid of >> the FD_SETSIZE redefinition for windows is worth all that trouble. > > I think it's worth doing because it puts the Windows implementation at > full feature parity with Unixen, ie, there's no hard-wired limit on the > number of connections. That is a point, although I do not find it desirable: In most pgbench settings ISTM that one thread should handle a few clients, which means waiting at most for a few handles, so that performance are not limited by pgbench itself. > I'm also not that thrilled with trying to make one code path work with > two fundamentally different implementations. Yep, but with the same interface. > We've found out that just because Microsoft claims their implementation > is compatible with POSIX doesn't make it so, Sure! > and I'd rather not have warts in our source code from trying to paper > over that. Hmmm. > Lastly, I think it's entirely likely that someone will come up with a > Windows-native implementation at some point, so that we'd have the extra > code lines anyway. When reading WaitForMultipleObjects doc, the suggestion seems to create more threads to do the waiting, by chunks of 64, and then you wait for these threads, possibly recursively. In pgbench context, it would make much more sense to advise the user to create more threads for benchmarking from the beginning, instead of having one thread for benchmarking and possibly creating more just to wait for events, so I cannot see any windows implementation that would make sense and would be worth having, at least based on this interface. So it seems to me that we would be better off with a minimal patch to work around the FD_SET check, not bother with the FD_SET overhead, not fiddle with FD_SETSIZE at all (which given the underlying n² handling seems like a bad idea anyway), and advise the user to create more threads when the limit is reached, as the attached version does. I cannot foresee a setting where over 60 clients per thread (ISTM there is at most one wait per client) would make much sense, so let's not help the user run ineffective benchmarks. Maybe we should even issue a warning when #client/#thread is high. Even if there is a bench where a thread could really handle 1000 clients (implying 1000 pg processes server side), probably the user could suffer to run that bench with 16 threads. -- Fabien.

Attachment

> I cannot foresee a setting where over 60 clients per thread (ISTM there is at > most one wait per client) would make much sense, so let's not help the user > run ineffective benchmarks. Maybe we should even issue a warning when > #client/#thread is high. Even if there is a bench where a thread could really > handle 1000 clients (implying 1000 pg processes server side), probably the > user could suffer to run that bench with 16 threads. Here is an attempt at given advice in the doc & a warning when launching. -- Fabien.

Attachment

On Sat, 6 Jun 2020 at 06:10, Tom Lane <tgl@sss.pgh.pa.us> wrote: > > Andres Freund <andres@anarazel.de> writes: > > I'm fairly sure one can easily scale to large numbers of sockets on > > windows by using completion based APIs, but that doesn't seem like a > > realistic thing to use for pgbench anytime soon. > > Agreed. Seems like the best answer is to get in bed with the Windows > representation of fd_set, since we cannot avoid knowing that it is > different from other platforms' versions. I suggest that we might as > well get all the way in and dodge the FD_SETSIZE limitation altogether, > as per the attached utterly-untested draft patch. I compiled this on Visual Studio 2017 and tested it. I didn't encounter any problems. The only thing I see in Winsock2.h that relies on FD_SETSIZE being the same size as the fd_set array is the FD_SET macro. So, I think it should be safe if these differ, like they will with your patch. We'll just need to make sure we don't use FD_SET in the future. > A remaining problem with this is that in theory, repeatedly applying > socket_has_input() after the wait could also be O(N^2) (unless FD_ISSET > is way smarter than I suspect it is). The FD_ISSET() just calls a function, so I don't know what's going on under the hood. #define FD_ISSET(fd, set) __WSAFDIsSet((SOCKET)(fd), (fd_set FAR *)(set)) However, I don't see what else it could do other than loop over the array until it finds a match. David

Hello David,

>> I suggest that we might as well get all the way in and dodge the

>> FD_SETSIZE limitation altogether, as per the attached utterly-untested

>> draft patch.

>

> I compiled this on Visual Studio 2017 and tested it. I didn't

> encounter any problems.

>

> The only thing I see in Winsock2.h that relies on FD_SETSIZE being the

> same size as the fd_set array is the FD_SET macro. So, I think it

> should be safe if these differ, like they will with your patch. We'll

> just need to make sure we don't use FD_SET in the future.

>

>> A remaining problem with this is that in theory, repeatedly applying

>> socket_has_input() after the wait could also be O(N^2) (unless FD_ISSET

>> is way smarter than I suspect it is).

>

> The FD_ISSET() just calls a function, so I don't know what's going on

> under the hood.

>

> #define FD_ISSET(fd, set) __WSAFDIsSet((SOCKET)(fd), (fd_set FAR *)(set))

>

> However, I don't see what else it could do other than loop over the

> array until it finds a match.

Independently of the wisdom of handling many client connections with just

one pgbench thread, I'm really wondering what goes on under the hood on

windows implementation of select().

AFAICS from online docs, windows native interfaces for waiting on IOs are:

- WaitForMultipleObjects

with a MAXIMUM_WAIT_OBJECTS limit which is 64.

- WSAWaitForMultipleEvents

with a WSA_MAXIMUM_WAIT_EVENTS limit which is 64.

Then their (strange) implementation of POSIX select uses FD_SETSIZE which

is, you may have guessed, 64.

Although this is consistent, M$ doc indeeds suggest that FD_SETSIZE can be

extended, but then why would the underlying implementation do a better job

(handle more fds) than the native implementations? How can one really tell

that "it works"? Maybe it just waited for the first few objects? Maybe it

did some active scan on objects to check for their status? Maybe it forked

threads to do the waiting? Maybe something else?

Having some idea of what is really happening would help to know what is

best to do in pgbench.

I'd suggest the following test, with pgbench compiled with the extended

FD_SETSIZE for windows:

script sleep.sql:

SELECT pg_sleep(150 - :client_id);

The run something like the following under some debugger:

pgbench -c 128 -f "sleep.sql"

and look at where the process is when interrupted under select? Now I

cannot run this test, because I do not have access to a windows host.

--

Fabien.