Thread: How to know transaction count in hour basic

Dear Team,

How to check, how many transactions are committed in one hour?

In database level pg_stat_database xact_commit column is there,this is giving only count.

How to get count for hour basis and minutes and seconds basis, how many transactions are committed?

How to check?

Regards,

Mallikarjunarao,

+91-8142923383.

You would just have to accumulate these values in a time-series gauge, right?

—

Sent from mobile, with due apologies for brevity and errors.

On Oct 30, 2019, at 10:13 AM, mallikarjun t <mallit333@gmail.com> wrote:

Dear Team,How to check, how many transactions are committed in one hour?In database level pg_stat_database xact_commit column is there,this is giving only count.How to get count for hour basis and minutes and seconds basis, how many transactions are committed?How to check?Regards,Mallikarjunarao,+91-8142923383.

Dear Team,

I can't understand how to accumulate time series gauge?

Kindly explain.

Regards,

Mallikarjunarao,

+91-8142923383.

On Wed, Oct 30, 2019 at 8:20 PM Alex Balashov <abalashov@evaristesys.com> wrote:

You would just have to accumulate these values in a time-series gauge, right?—Sent from mobile, with due apologies for brevity and errors.On Oct 30, 2019, at 10:13 AM, mallikarjun t <mallit333@gmail.com> wrote:Dear Team,How to check, how many transactions are committed in one hour?In database level pg_stat_database xact_commit column is there,this is giving only count.How to get count for hour basis and minutes and seconds basis, how many transactions are committed?How to check?Regards,Mallikarjunarao,+91-8142923383.

It’s not really a concept one can explain. It’s just a mathematical operation to determine a rate of change over time.

—

Sent from mobile, with due apologies for brevity and errors.

On Oct 30, 2019, at 11:00 AM, mallikarjun t <mallit333@gmail.com> wrote:

Dear Team,I can't understand how to accumulate time series gauge?Kindly explain.Regards,Mallikarjunarao,+91-8142923383.On Wed, Oct 30, 2019 at 8:20 PM Alex Balashov <abalashov@evaristesys.com> wrote:You would just have to accumulate these values in a time-series gauge, right?—Sent from mobile, with due apologies for brevity and errors.On Oct 30, 2019, at 10:13 AM, mallikarjun t <mallit333@gmail.com> wrote:Dear Team,How to check, how many transactions are committed in one hour?In database level pg_stat_database xact_commit column is there,this is giving only count.How to get count for hour basis and minutes and seconds basis, how many transactions are committed?How to check?Regards,Mallikarjunarao,+91-8142923383.

Dear Team,

Begin;

select version();

commit;

This is one transaction committed. In this one query type is select query, I want how many update and insert and delete queries are committed in one hour?

That's the concept I want. Kindly help me?

Regards,

Mallikarjunarao,

+91-8142923383.

On Wed, Oct 30, 2019 at 8:31 PM Alex Balashov <abalashov@evaristesys.com> wrote:

It’s not really a concept one can explain. It’s just a mathematical operation to determine a rate of change over time.—Sent from mobile, with due apologies for brevity and errors.On Oct 30, 2019, at 11:00 AM, mallikarjun t <mallit333@gmail.com> wrote:Dear Team,I can't understand how to accumulate time series gauge?Kindly explain.Regards,Mallikarjunarao,+91-8142923383.On Wed, Oct 30, 2019 at 8:20 PM Alex Balashov <abalashov@evaristesys.com> wrote:You would just have to accumulate these values in a time-series gauge, right?—Sent from mobile, with due apologies for brevity and errors.On Oct 30, 2019, at 10:13 AM, mallikarjun t <mallit333@gmail.com> wrote:Dear Team,How to check, how many transactions are committed in one hour?In database level pg_stat_database xact_commit column is there,this is giving only count.How to get count for hour basis and minutes and seconds basis, how many transactions are committed?How to check?Regards,Mallikarjunarao,+91-8142923383.

You need to capture the value at the starting and ending period that you’re interested in and then calculate the delta.

I export all the stats tables out every minute and put them into history table on a different system — then query any period of interest. Sorta like Oracle’s stats pack.

There are also monitoring tools that do this all for you already.

i.e. This is the function I would use to get the information you’re looking for:

/*

Query Example:

select period_start

, period_end

, to_char(xact_commit + xact_rollback, numeric_format(10, 0)) as tran_cnt

, to_char(txid_cnt, numeric_format(10, 0)) as txid_cnt

, pg_size_pretty((blks_read + blks_hit) * 16 * 1024) as buf_read

, pg_size_pretty(blks_read * 16 * 1024) as fs_read

, to_char(blks_hit::numeric / (blks_read + blks_hit) * 100, '999D9999%') as buf_pct

, to_char(temp_files, numeric_format(15, 0)) as temp_files

, pg_size_pretty(temp_bytes) as tmp_tbl

, to_char(tup_returned, numeric_format(15, 0)) as tup_returned

, to_char(tup_inserted, numeric_format(15, 0)) as tup_inserted

, to_char(tup_updated, numeric_format(15, 0)) as tup_updated

, to_char(tup_deleted, numeric_format(15, 0)) as tup_deleted

from get_database_stat(‘dynamo', '2018-06-26'::date, '2018-06-30'::date, 60)

;

*/

create or replace function get_database_stat(_database name, _start_time timestamp(0) with time zone, _end_time timestamp(0) with time zone, _interval int default 1)

returns table (

period_start timestamp(0) with time zone,

period_end timestamp(0) with time zone,

xact_commit bigint,

xact_rollback bigint,

txid_cnt bigint,

blks_read bigint,

blks_hit bigint,

tup_returned bigint,

tup_fetched bigint,

tup_inserted bigint,

tup_updated bigint,

tup_deleted bigint,

conflicts bigint,

temp_files bigint,

temp_bytes bigint,

deadlocks bigint,

blk_read_time double precision,

blk_write_time double precision

) as $$

declare

_offset_factor int;

begin

_offset_factor := (_interval * 60) - ((extract(epoch from date_trunc('minutes', _start_time)))::int % (_interval * 60));

return query

select lag(s.hist_stat_time) over (partition by s.datname, s.stats_reset order by s.hist_stat_time) as period_start

, s.hist_stat_time as period_end

, (s.xact_commit - lag(s.xact_commit) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as xact_commit

, (s.xact_rollback - lag(s.xact_rollback) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as xact_rollback

, s.xact_txid - lag(s.xact_txid) over (partition by s.datname, s.stats_reset order by s.hist_stat_time) as txid_cnt

, (s.blks_read - lag(s.blks_read) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as blks_read

, (s.blks_hit - lag(s.blks_hit) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as blks_hit

, (s.tup_returned - lag(s.tup_returned) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as tup_returned

, (s.tup_fetched - lag(s.tup_fetched) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as tup_fetched

, (s.tup_inserted - lag(s.tup_inserted) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as tup_inserted

, (s.tup_updated - lag(s.tup_updated) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as tup_updated

, (s.tup_deleted - lag(s.tup_deleted) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as tup_deleted

, (s.conflicts - lag(s.conflicts) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as conflicts

, (s.temp_files - lag(s.temp_files) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as temp_files

, (s.temp_bytes - lag(s.temp_bytes) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as temp_bytes

, (s.deadlocks - lag(s.deadlocks) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as deadlocks

, (s.blk_read_time - lag(s.blk_read_time) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as blk_read_time

, (s.blk_write_time - lag(s.blk_write_time) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as blk_write_time

from hist_stat_database s

where s.datname = _database

and s.hist_stat_time >= _start_time

and s.hist_stat_time <= _end_time

and ((extract(epoch from date_trunc('minutes', s.hist_stat_time)))::int + _offset_factor) % (_interval * 60) = 0

order by s.hist_stat_time

offset 1

;

end;

$$ language plpgsql

stable

;

On Oct 30, 2019, at 10:13 AM, mallikarjun t <mallit333@gmail.com> wrote:Dear Team,How to check, how many transactions are committed in one hour?In database level pg_stat_database xact_commit column is there,this is giving only count.How to get count for hour basis and minutes and seconds basis, how many transactions are committed?How to check?Regards,Mallikarjunarao,+91-8142923383.

Dear Team,

Thanks for your support. I will check.

Regards,

Mallikarjunarao,

+91-8142923383.

On Wed, Oct 30, 2019 at 8:59 PM Rui DeSousa <rui@crazybean.net> wrote:

You need to capture the value at the starting and ending period that you’re interested in and then calculate the delta.I export all the stats tables out every minute and put them into history table on a different system — then query any period of interest. Sorta like Oracle’s stats pack.There are also monitoring tools that do this all for you already.i.e. This is the function I would use to get the information you’re looking for:/*Query Example:select period_start, period_end, to_char(xact_commit + xact_rollback, numeric_format(10, 0)) as tran_cnt, to_char(txid_cnt, numeric_format(10, 0)) as txid_cnt, pg_size_pretty((blks_read + blks_hit) * 16 * 1024) as buf_read, pg_size_pretty(blks_read * 16 * 1024) as fs_read, to_char(blks_hit::numeric / (blks_read + blks_hit) * 100, '999D9999%') as buf_pct, to_char(temp_files, numeric_format(15, 0)) as temp_files, pg_size_pretty(temp_bytes) as tmp_tbl, to_char(tup_returned, numeric_format(15, 0)) as tup_returned, to_char(tup_inserted, numeric_format(15, 0)) as tup_inserted, to_char(tup_updated, numeric_format(15, 0)) as tup_updated, to_char(tup_deleted, numeric_format(15, 0)) as tup_deletedfrom get_database_stat(‘dynamo', '2018-06-26'::date, '2018-06-30'::date, 60);*/create or replace function get_database_stat(_database name, _start_time timestamp(0) with time zone, _end_time timestamp(0) with time zone, _interval int default 1)returns table (period_start timestamp(0) with time zone,period_end timestamp(0) with time zone,xact_commit bigint,xact_rollback bigint,txid_cnt bigint,blks_read bigint,blks_hit bigint,tup_returned bigint,tup_fetched bigint,tup_inserted bigint,tup_updated bigint,tup_deleted bigint,conflicts bigint,temp_files bigint,temp_bytes bigint,deadlocks bigint,blk_read_time double precision,blk_write_time double precision) as $$declare_offset_factor int;begin_offset_factor := (_interval * 60) - ((extract(epoch from date_trunc('minutes', _start_time)))::int % (_interval * 60));return queryselect lag(s.hist_stat_time) over (partition by s.datname, s.stats_reset order by s.hist_stat_time) as period_start, s.hist_stat_time as period_end, (s.xact_commit - lag(s.xact_commit) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as xact_commit, (s.xact_rollback - lag(s.xact_rollback) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as xact_rollback, s.xact_txid - lag(s.xact_txid) over (partition by s.datname, s.stats_reset order by s.hist_stat_time) as txid_cnt, (s.blks_read - lag(s.blks_read) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as blks_read, (s.blks_hit - lag(s.blks_hit) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as blks_hit, (s.tup_returned - lag(s.tup_returned) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as tup_returned, (s.tup_fetched - lag(s.tup_fetched) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as tup_fetched, (s.tup_inserted - lag(s.tup_inserted) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as tup_inserted, (s.tup_updated - lag(s.tup_updated) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as tup_updated, (s.tup_deleted - lag(s.tup_deleted) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as tup_deleted, (s.conflicts - lag(s.conflicts) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as conflicts, (s.temp_files - lag(s.temp_files) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as temp_files, (s.temp_bytes - lag(s.temp_bytes) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as temp_bytes, (s.deadlocks - lag(s.deadlocks) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as deadlocks, (s.blk_read_time - lag(s.blk_read_time) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as blk_read_time, (s.blk_write_time - lag(s.blk_write_time) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as blk_write_timefrom hist_stat_database swhere s.datname = _databaseand s.hist_stat_time >= _start_timeand s.hist_stat_time <= _end_timeand ((extract(epoch from date_trunc('minutes', s.hist_stat_time)))::int + _offset_factor) % (_interval * 60) = 0order by s.hist_stat_timeoffset 1;end;$$ language plpgsqlstable;On Oct 30, 2019, at 10:13 AM, mallikarjun t <mallit333@gmail.com> wrote:Dear Team,How to check, how many transactions are committed in one hour?In database level pg_stat_database xact_commit column is there,this is giving only count.How to get count for hour basis and minutes and seconds basis, how many transactions are committed?How to check?Regards,Mallikarjunarao,+91-8142923383.

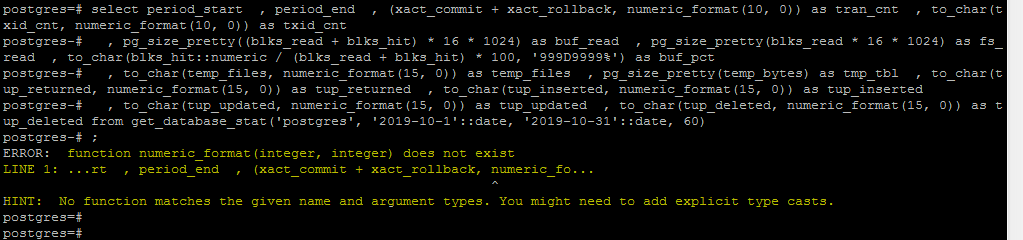

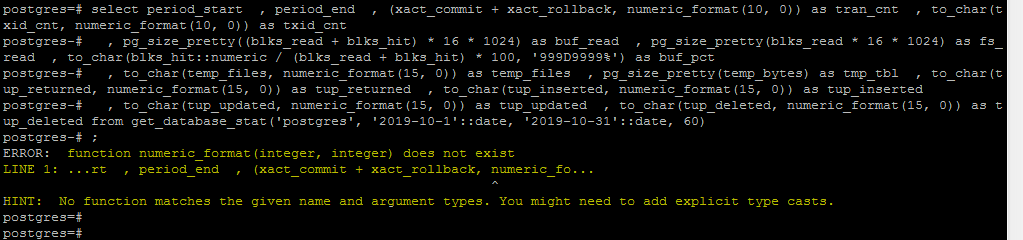

Dear Team,

How this function works, it is not working it is throwing error message ,

Kindly check that, and revert back.

Regards,

Mallikarjunarao,

+91-8142923383.

On Thu, Oct 31, 2019 at 5:04 PM mallikarjun t <mallit333@gmail.com> wrote:

Dear Team,Thanks for your support. I will check.Regards,Mallikarjunarao,+91-8142923383.On Wed, Oct 30, 2019 at 8:59 PM Rui DeSousa <rui@crazybean.net> wrote:You need to capture the value at the starting and ending period that you’re interested in and then calculate the delta.I export all the stats tables out every minute and put them into history table on a different system — then query any period of interest. Sorta like Oracle’s stats pack.There are also monitoring tools that do this all for you already.i.e. This is the function I would use to get the information you’re looking for:/*Query Example:select period_start, period_end, to_char(xact_commit + xact_rollback, numeric_format(10, 0)) as tran_cnt, to_char(txid_cnt, numeric_format(10, 0)) as txid_cnt, pg_size_pretty((blks_read + blks_hit) * 16 * 1024) as buf_read, pg_size_pretty(blks_read * 16 * 1024) as fs_read, to_char(blks_hit::numeric / (blks_read + blks_hit) * 100, '999D9999%') as buf_pct, to_char(temp_files, numeric_format(15, 0)) as temp_files, pg_size_pretty(temp_bytes) as tmp_tbl, to_char(tup_returned, numeric_format(15, 0)) as tup_returned, to_char(tup_inserted, numeric_format(15, 0)) as tup_inserted, to_char(tup_updated, numeric_format(15, 0)) as tup_updated, to_char(tup_deleted, numeric_format(15, 0)) as tup_deletedfrom get_database_stat(‘dynamo', '2018-06-26'::date, '2018-06-30'::date, 60);*/create or replace function get_database_stat(_database name, _start_time timestamp(0) with time zone, _end_time timestamp(0) with time zone, _interval int default 1)returns table (period_start timestamp(0) with time zone,period_end timestamp(0) with time zone,xact_commit bigint,xact_rollback bigint,txid_cnt bigint,blks_read bigint,blks_hit bigint,tup_returned bigint,tup_fetched bigint,tup_inserted bigint,tup_updated bigint,tup_deleted bigint,conflicts bigint,temp_files bigint,temp_bytes bigint,deadlocks bigint,blk_read_time double precision,blk_write_time double precision) as $$declare_offset_factor int;begin_offset_factor := (_interval * 60) - ((extract(epoch from date_trunc('minutes', _start_time)))::int % (_interval * 60));return queryselect lag(s.hist_stat_time) over (partition by s.datname, s.stats_reset order by s.hist_stat_time) as period_start, s.hist_stat_time as period_end, (s.xact_commit - lag(s.xact_commit) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as xact_commit, (s.xact_rollback - lag(s.xact_rollback) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as xact_rollback, s.xact_txid - lag(s.xact_txid) over (partition by s.datname, s.stats_reset order by s.hist_stat_time) as txid_cnt, (s.blks_read - lag(s.blks_read) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as blks_read, (s.blks_hit - lag(s.blks_hit) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as blks_hit, (s.tup_returned - lag(s.tup_returned) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as tup_returned, (s.tup_fetched - lag(s.tup_fetched) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as tup_fetched, (s.tup_inserted - lag(s.tup_inserted) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as tup_inserted, (s.tup_updated - lag(s.tup_updated) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as tup_updated, (s.tup_deleted - lag(s.tup_deleted) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as tup_deleted, (s.conflicts - lag(s.conflicts) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as conflicts, (s.temp_files - lag(s.temp_files) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as temp_files, (s.temp_bytes - lag(s.temp_bytes) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as temp_bytes, (s.deadlocks - lag(s.deadlocks) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as deadlocks, (s.blk_read_time - lag(s.blk_read_time) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as blk_read_time, (s.blk_write_time - lag(s.blk_write_time) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as blk_write_timefrom hist_stat_database swhere s.datname = _databaseand s.hist_stat_time >= _start_timeand s.hist_stat_time <= _end_timeand ((extract(epoch from date_trunc('minutes', s.hist_stat_time)))::int + _offset_factor) % (_interval * 60) = 0order by s.hist_stat_timeoffset 1;end;$$ language plpgsqlstable;On Oct 30, 2019, at 10:13 AM, mallikarjun t <mallit333@gmail.com> wrote:Dear Team,How to check, how many transactions are committed in one hour?In database level pg_stat_database xact_commit column is there,this is giving only count.How to get count for hour basis and minutes and seconds basis, how many transactions are committed?How to check?Regards,Mallikarjunarao,+91-8142923383.

Attachment

That is a simple helper function to create a numeric format string for to_char():

create or replace function numeric_format(_percision int, _scale int)

returns varchar(100)

as $$

declare

_grpCnt int;

_decCnt int;

begin

_grpCnt := ((_percision - _scale) / 3);

_decCnt := ((_percision - _scale) % 3);

return trim(leading ',' from (repeat('9', _decCnt) || repeat('G999', _grpCnt) || 'D' || repeat('9', _scale)));

end;

$$ language plpgsql

immutable returns null on null input

;

On Oct 31, 2019, at 10:05 AM, mallikarjun t <mallit333@gmail.com> wrote:Dear Team,How this function works, it is not working it is throwing error message ,<image.png>Kindly check that, and revert back.Regards,Mallikarjunarao,+91-8142923383.On Thu, Oct 31, 2019 at 5:04 PM mallikarjun t <mallit333@gmail.com> wrote:Dear Team,Thanks for your support. I will check.Regards,Mallikarjunarao,+91-8142923383.On Wed, Oct 30, 2019 at 8:59 PM Rui DeSousa <rui@crazybean.net> wrote:You need to capture the value at the starting and ending period that you’re interested in and then calculate the delta.I export all the stats tables out every minute and put them into history table on a different system — then query any period of interest. Sorta like Oracle’s stats pack.There are also monitoring tools that do this all for you already.i.e. This is the function I would use to get the information you’re looking for:/*Query Example:select period_start, period_end, to_char(xact_commit + xact_rollback, numeric_format(10, 0)) as tran_cnt, to_char(txid_cnt, numeric_format(10, 0)) as txid_cnt, pg_size_pretty((blks_read + blks_hit) * 16 * 1024) as buf_read, pg_size_pretty(blks_read * 16 * 1024) as fs_read, to_char(blks_hit::numeric / (blks_read + blks_hit) * 100, '999D9999%') as buf_pct, to_char(temp_files, numeric_format(15, 0)) as temp_files, pg_size_pretty(temp_bytes) as tmp_tbl, to_char(tup_returned, numeric_format(15, 0)) as tup_returned, to_char(tup_inserted, numeric_format(15, 0)) as tup_inserted, to_char(tup_updated, numeric_format(15, 0)) as tup_updated, to_char(tup_deleted, numeric_format(15, 0)) as tup_deletedfrom get_database_stat(‘dynamo', '2018-06-26'::date, '2018-06-30'::date, 60);*/create or replace function get_database_stat(_database name, _start_time timestamp(0) with time zone, _end_time timestamp(0) with time zone, _interval int default 1)returns table (period_start timestamp(0) with time zone,period_end timestamp(0) with time zone,xact_commit bigint,xact_rollback bigint,txid_cnt bigint,blks_read bigint,blks_hit bigint,tup_returned bigint,tup_fetched bigint,tup_inserted bigint,tup_updated bigint,tup_deleted bigint,conflicts bigint,temp_files bigint,temp_bytes bigint,deadlocks bigint,blk_read_time double precision,blk_write_time double precision) as $$declare_offset_factor int;begin_offset_factor := (_interval * 60) - ((extract(epoch from date_trunc('minutes', _start_time)))::int % (_interval * 60));return queryselect lag(s.hist_stat_time) over (partition by s.datname, s.stats_reset order by s.hist_stat_time) as period_start, s.hist_stat_time as period_end, (s.xact_commit - lag(s.xact_commit) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as xact_commit, (s.xact_rollback - lag(s.xact_rollback) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as xact_rollback, s.xact_txid - lag(s.xact_txid) over (partition by s.datname, s.stats_reset order by s.hist_stat_time) as txid_cnt, (s.blks_read - lag(s.blks_read) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as blks_read, (s.blks_hit - lag(s.blks_hit) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as blks_hit, (s.tup_returned - lag(s.tup_returned) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as tup_returned, (s.tup_fetched - lag(s.tup_fetched) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as tup_fetched, (s.tup_inserted - lag(s.tup_inserted) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as tup_inserted, (s.tup_updated - lag(s.tup_updated) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as tup_updated, (s.tup_deleted - lag(s.tup_deleted) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as tup_deleted, (s.conflicts - lag(s.conflicts) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as conflicts, (s.temp_files - lag(s.temp_files) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as temp_files, (s.temp_bytes - lag(s.temp_bytes) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as temp_bytes, (s.deadlocks - lag(s.deadlocks) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as deadlocks, (s.blk_read_time - lag(s.blk_read_time) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as blk_read_time, (s.blk_write_time - lag(s.blk_write_time) over (partition by s.datname, s.stats_reset order by s.hist_stat_time)) as blk_write_timefrom hist_stat_database swhere s.datname = _databaseand s.hist_stat_time >= _start_timeand s.hist_stat_time <= _end_timeand ((extract(epoch from date_trunc('minutes', s.hist_stat_time)))::int + _offset_factor) % (_interval * 60) = 0order by s.hist_stat_timeoffset 1;end;$$ language plpgsqlstable;On Oct 30, 2019, at 10:13 AM, mallikarjun t <mallit333@gmail.com> wrote:Dear Team,How to check, how many transactions are committed in one hour?In database level pg_stat_database xact_commit column is there,this is giving only count.How to get count for hour basis and minutes and seconds basis, how many transactions are committed?How to check?Regards,Mallikarjunarao,+91-8142923383.