Thread: psql 9.3 automatic recovery in progress

I got some issues with my DB under ubuntu 14.x.

PSQL 9.3, odoo 7.x.

This machine is under KVM with centos 6.x

It has a Raid1 with ssd drives only for this vm.

I detect some unexpected shutdows, see this lines:

2016-09-12 08:59:25 PDT ERROR: missing FROM-clause entry for table

"rp" at character 73

2016-09-12 08:59:25 PDT STATEMENT: select

pp.default_code,pc.product_code,pp.name_template,pc.product_name,rp.name

from product_product pp inner join product_customer_code pc on

pc.product_id=pp.id

2016-09-12 08:59:26 PDT LOG: connection received: host=192.168.2.153 port=59335

2016-09-12 08:59:26 PDT LOG: connection authorized: user=openerp

database=Mueblex

2016-09-12 09:00:01 PDT LOG: connection received: host=::1 port=43536

2016-09-12 09:00:01 PDT LOG: connection authorized: user=openerp

database=template1

2016-09-12 09:00:01 PDT LOG: server process (PID 23958) was

terminated by signal 9: Killed

2016-09-12 09:00:01 PDT DETAIL: Failed process was running: select

pp.default_code,pc.product_code,pp.name_template,pc.product_name,rp.name

from product_product pp inner join product_customer_code pc on

pc.product_id=pp.id

2016-09-12 09:00:01 PDT LOG: terminating any other active server processes

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT LOG: archiver process (PID 989) exited with exit code 1

2016-09-12 09:00:01 PDT LOG: all server processes terminated; reinitializing

2016-09-12 09:00:03 PDT LOG: database system was interrupted; last

known up at 2016-09-12 08:59:27 PDT

2016-09-12 09:00:07 PDT LOG: database system was not properly shut

down; automatic recovery in progress

2016-09-12 09:00:07 PDT LOG: redo starts at 29A/AF000028

2016-09-12 09:00:07 PDT LOG: record with zero length at 29A/BC001958

2016-09-12 09:00:07 PDT LOG: redo done at 29A/BC001928

2016-09-12 09:00:07 PDT LOG: last completed transaction was at log

time 2016-09-12 09:00:01.768271-07

2016-09-12 09:00:08 PDT LOG: MultiXact member wraparound protections

are now enabled

2016-09-12 09:00:08 PDT LOG: database system is ready to accept connections

2016-09-12 09:00:08 PDT LOG: autovacuum launcher started

2016-09-12 09:00:15 PDT LOG: connection received: host=127.0.0.1 port=45508

Latter another one:

2016-09-12 09:51:32 PDT ERROR: duplicate key value violates unique

constraint "upc_scan_plant_uniq"

2016-09-12 09:51:32 PDT DETAIL: Key (name, plant_id)=(2016-09-12, 6)

already exists.

2016-09-12 09:51:32 PDT STATEMENT: insert into "upc_scan"

(id,"plant_id","state","name",create_uid,create_date,write_uid,write_date)

values (1438,6,'draft','2016-09-12',87,(now() at time zone

'UTC'),87,(now() at time zone 'UTC'))

2016-09-12 09:51:40 PDT ERROR: duplicate key value violates unique

constraint "upc_scan_plant_uniq"

2016-09-12 09:51:40 PDT DETAIL: Key (name, plant_id)=(2016-09-12, 6)

already exists.

2016-09-12 09:51:40 PDT STATEMENT: insert into "upc_scan"

(id,"plant_id","state","name",create_uid,create_date,write_uid,write_date)

values (1439,6,'draft','2016-09-12',87,(now() at time zone

'UTC'),87,(now() at time zone 'UTC'))

2016-09-12 09:51:43 PDT ERROR: duplicate key value violates unique

constraint "upc_scan_plant_uniq"

2016-09-12 09:51:43 PDT DETAIL: Key (name, plant_id)=(2016-09-12, 6)

already exists.

2016-09-12 09:51:43 PDT STATEMENT: insert into "upc_scan"

(id,"plant_id","state","name",create_uid,create_date,write_uid,write_date)

values (1440,6,'draft','2016-09-12',87,(now() at time zone

'UTC'),87,(now() at time zone 'UTC'))

2016-09-12 10:00:01 PDT LOG: connection received: host=::1 port=43667

2016-09-12 10:00:01 PDT LOG: connection authorized: user=openerp

database=template1

2016-09-12 10:00:01 PDT LOG: server process (PID 30766) was

terminated by signal 9: Killed

2016-09-12 10:00:01 PDT DETAIL: Failed process was running: select

pp.default_code,pc.product_code,pp.name_template,pc.product_name,rp.name

from product_product pp inner join product_customer_code pc on

pc.product_id=pp.id

2016-09-12 10:00:01 PDT LOG: terminating any other active server processes

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT LOG: archiver process (PID 29336) exited with

exit code 1

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT LOG: all server processes terminated; reinitializing

2016-09-12 10:00:02 PDT LOG: database system was interrupted; last

known up at 2016-09-12 09:58:45 PDT

2016-09-12 10:00:06 PDT LOG: database system was not properly shut

down; automatic recovery in progress

2016-09-12 10:00:06 PDT LOG: redo starts at 29C/DC000028

2016-09-12 10:00:06 PDT LOG: unexpected pageaddr 29B/ED01A000 in log

segment 000000010000029C000000F2, offset 106496

2016-09-12 10:00:06 PDT LOG: redo done at 29C/F2018D90

2016-09-12 10:00:06 PDT LOG: last completed transaction was at log

time 2016-09-12 10:00:00.558978-07

2016-09-12 10:00:07 PDT LOG: MultiXact member wraparound protections

are now enabled

2016-09-12 10:00:07 PDT LOG: database system is ready to accept connections

2016-09-12 10:00:07 PDT LOG: autovacuum launcher started

2016-09-12 10:00:11 PDT LOG: connection received: host=127.0.0.1 port=45639

Other one:

2016-09-12 15:00:01 PDT LOG: server process (PID 22030) was

terminated by signal 9: Killed

2016-09-12 15:00:01 PDT DETAIL: Failed process was running: SELECT

"name", "model", "description", "month" FROM "etiquetas_temp"

2016-09-12 15:00:01 PDT LOG: terminating any other active server processes

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT LOG: archiver process (PID 3254) exited with

exit code 1

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:02 PDT LOG: all server processes terminated; reinitializing

2016-09-12 15:00:02 PDT LOG: database system was interrupted; last

known up at 2016-09-12 14:59:55 PDT

2016-09-12 15:00:06 PDT LOG: database system was not properly shut

down; automatic recovery in progress

2016-09-12 15:00:07 PDT LOG: redo starts at 2A8/69000028

2016-09-12 15:00:07 PDT LOG: record with zero length at 2A8/7201B4F8

2016-09-12 15:00:07 PDT LOG: redo done at 2A8/7201B4C8

2016-09-12 15:00:07 PDT LOG: last completed transaction was at log

time 2016-09-12 15:00:01.664762-07

2016-09-12 15:00:08 PDT LOG: MultiXact member wraparound protections

are now enabled

2016-09-12 15:00:08 PDT LOG: database system is ready to accept connections

2016-09-12 15:00:08 PDT LOG: autovacuum launcher started

What I can see is not because server load, maximum idle is 79%

free

total used free shared buffers cached

Mem: 82493268 82027060 466208 3157228 136084 77526460

-/+ buffers/cache: 4364516 78128752

Swap: 1000444 9540 990904

Little use of swap, I will add more memory next week.

In your experience this looks like HW issue?

Thanks for your time!!!

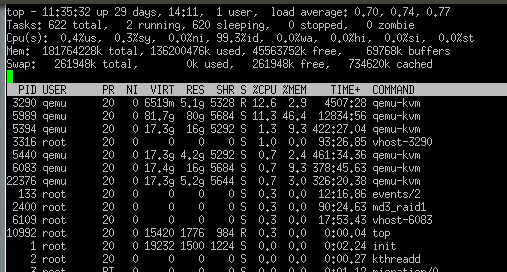

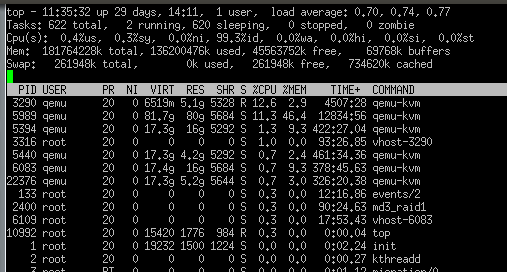

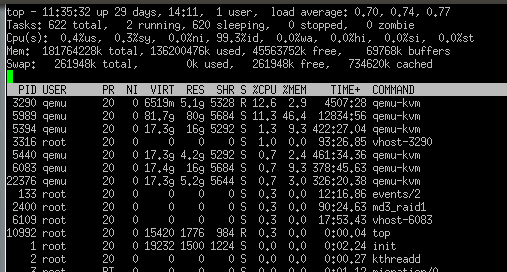

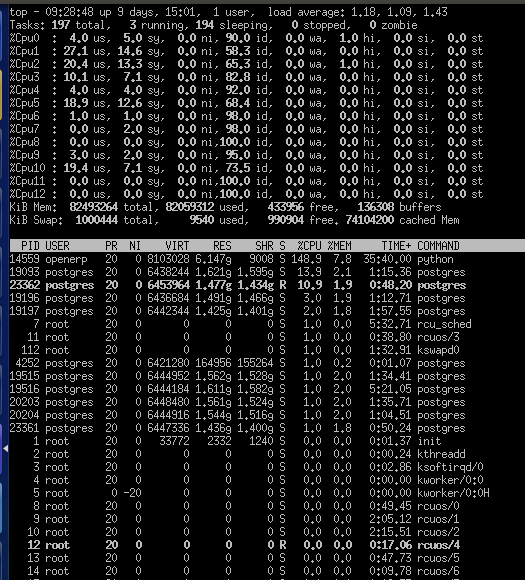

I want to add my server load normally, please see attachment, thanks.

On Mon, Oct 10, 2016 at 9:24 AM, Periko Support <pheriko.support@gmail.com> wrote:

I got some issues with my DB under ubuntu 14.x.

PSQL 9.3, odoo 7.x.

This machine is under KVM with centos 6.x

It has a Raid1 with ssd drives only for this vm.

I detect some unexpected shutdows, see this lines:

2016-09-12 08:59:25 PDT ERROR: missing FROM-clause entry for table

"rp" at character 73

2016-09-12 08:59:25 PDT STATEMENT: select

pp.default_code,pc.product_code,pp.name_template,pc. product_name,rp.name

from product_product pp inner join product_customer_code pc on

pc.product_id=pp.id

2016-09-12 08:59:26 PDT LOG: connection received: host=192.168.2.153 port=59335

2016-09-12 08:59:26 PDT LOG: connection authorized: user=openerp

database=Mueblex

2016-09-12 09:00:01 PDT LOG: connection received: host=::1 port=43536

2016-09-12 09:00:01 PDT LOG: connection authorized: user=openerp

database=template1

2016-09-12 09:00:01 PDT LOG: server process (PID 23958) was

terminated by signal 9: Killed

2016-09-12 09:00:01 PDT DETAIL: Failed process was running: select

pp.default_code,pc.product_code,pp.name_template,pc. product_name,rp.name

from product_product pp inner join product_customer_code pc on

pc.product_id=pp.id

2016-09-12 09:00:01 PDT LOG: terminating any other active server processes

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 09:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 09:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 09:00:01 PDT LOG: archiver process (PID 989) exited with exit code 1

2016-09-12 09:00:01 PDT LOG: all server processes terminated; reinitializing

2016-09-12 09:00:03 PDT LOG: database system was interrupted; last

known up at 2016-09-12 08:59:27 PDT

2016-09-12 09:00:07 PDT LOG: database system was not properly shut

down; automatic recovery in progress

2016-09-12 09:00:07 PDT LOG: redo starts at 29A/AF000028

2016-09-12 09:00:07 PDT LOG: record with zero length at 29A/BC001958

2016-09-12 09:00:07 PDT LOG: redo done at 29A/BC001928

2016-09-12 09:00:07 PDT LOG: last completed transaction was at log

time 2016-09-12 09:00:01.768271-07

2016-09-12 09:00:08 PDT LOG: MultiXact member wraparound protections

are now enabled

2016-09-12 09:00:08 PDT LOG: database system is ready to accept connections

2016-09-12 09:00:08 PDT LOG: autovacuum launcher started

2016-09-12 09:00:15 PDT LOG: connection received: host=127.0.0.1 port=45508

Latter another one:

2016-09-12 09:51:32 PDT ERROR: duplicate key value violates unique

constraint "upc_scan_plant_uniq"

2016-09-12 09:51:32 PDT DETAIL: Key (name, plant_id)=(2016-09-12, 6)

already exists.

2016-09-12 09:51:32 PDT STATEMENT: insert into "upc_scan"

(id,"plant_id","state","name",create_uid,create_date,write_ uid,write_date)

values (1438,6,'draft','2016-09-12',87,(now() at time zone

'UTC'),87,(now() at time zone 'UTC'))

2016-09-12 09:51:40 PDT ERROR: duplicate key value violates unique

constraint "upc_scan_plant_uniq"

2016-09-12 09:51:40 PDT DETAIL: Key (name, plant_id)=(2016-09-12, 6)

already exists.

2016-09-12 09:51:40 PDT STATEMENT: insert into "upc_scan"

(id,"plant_id","state","name",create_uid,create_date,write_ uid,write_date)

values (1439,6,'draft','2016-09-12',87,(now() at time zone

'UTC'),87,(now() at time zone 'UTC'))

2016-09-12 09:51:43 PDT ERROR: duplicate key value violates unique

constraint "upc_scan_plant_uniq"

2016-09-12 09:51:43 PDT DETAIL: Key (name, plant_id)=(2016-09-12, 6)

already exists.

2016-09-12 09:51:43 PDT STATEMENT: insert into "upc_scan"

(id,"plant_id","state","name",create_uid,create_date,write_ uid,write_date)

values (1440,6,'draft','2016-09-12',87,(now() at time zone

'UTC'),87,(now() at time zone 'UTC'))

2016-09-12 10:00:01 PDT LOG: connection received: host=::1 port=43667

2016-09-12 10:00:01 PDT LOG: connection authorized: user=openerp

database=template1

2016-09-12 10:00:01 PDT LOG: server process (PID 30766) was

terminated by signal 9: Killed

2016-09-12 10:00:01 PDT DETAIL: Failed process was running: select

pp.default_code,pc.product_code,pp.name_template,pc. product_name,rp.name

from product_product pp inner join product_customer_code pc on

pc.product_id=pp.id

2016-09-12 10:00:01 PDT LOG: terminating any other active server processes

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT LOG: archiver process (PID 29336) exited with

exit code 1

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 10:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 10:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 10:00:01 PDT LOG: all server processes terminated; reinitializing

2016-09-12 10:00:02 PDT LOG: database system was interrupted; last

known up at 2016-09-12 09:58:45 PDT

2016-09-12 10:00:06 PDT LOG: database system was not properly shut

down; automatic recovery in progress

2016-09-12 10:00:06 PDT LOG: redo starts at 29C/DC000028

2016-09-12 10:00:06 PDT LOG: unexpected pageaddr 29B/ED01A000 in log

segment 000000010000029C000000F2, offset 106496

2016-09-12 10:00:06 PDT LOG: redo done at 29C/F2018D90

2016-09-12 10:00:06 PDT LOG: last completed transaction was at log

time 2016-09-12 10:00:00.558978-07

2016-09-12 10:00:07 PDT LOG: MultiXact member wraparound protections

are now enabled

2016-09-12 10:00:07 PDT LOG: database system is ready to accept connections

2016-09-12 10:00:07 PDT LOG: autovacuum launcher started

2016-09-12 10:00:11 PDT LOG: connection received: host=127.0.0.1 port=45639

Other one:

2016-09-12 15:00:01 PDT LOG: server process (PID 22030) was

terminated by signal 9: Killed

2016-09-12 15:00:01 PDT DETAIL: Failed process was running: SELECT

"name", "model", "description", "month" FROM "etiquetas_temp"

2016-09-12 15:00:01 PDT LOG: terminating any other active server processes

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT LOG: archiver process (PID 3254) exited with

exit code 1

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:01 PDT WARNING: terminating connection because of

crash of another server process

2016-09-12 15:00:01 PDT DETAIL: The postmaster has commanded this

server process to roll back the current transaction and exit, because

another server process exited abnormally and possibly corrupted shared

memory.

2016-09-12 15:00:01 PDT HINT: In a moment you should be able to

reconnect to the database and repeat your command.

2016-09-12 15:00:02 PDT LOG: all server processes terminated; reinitializing

2016-09-12 15:00:02 PDT LOG: database system was interrupted; last

known up at 2016-09-12 14:59:55 PDT

2016-09-12 15:00:06 PDT LOG: database system was not properly shut

down; automatic recovery in progress

2016-09-12 15:00:07 PDT LOG: redo starts at 2A8/69000028

2016-09-12 15:00:07 PDT LOG: record with zero length at 2A8/7201B4F8

2016-09-12 15:00:07 PDT LOG: redo done at 2A8/7201B4C8

2016-09-12 15:00:07 PDT LOG: last completed transaction was at log

time 2016-09-12 15:00:01.664762-07

2016-09-12 15:00:08 PDT LOG: MultiXact member wraparound protections

are now enabled

2016-09-12 15:00:08 PDT LOG: database system is ready to accept connections

2016-09-12 15:00:08 PDT LOG: autovacuum launcher started

What I can see is not because server load, maximum idle is 79%

free

total used free shared buffers cached

Mem: 82493268 82027060 466208 3157228 136084 77526460

-/+ buffers/cache: 4364516 78128752

Swap: 1000444 9540 990904

Little use of swap, I will add more memory next week.

In your experience this looks like HW issue?

Thanks for your time!!!

Attachment

Periko Support <pheriko.support@gmail.com> writes:

> I got some issues with my DB under ubuntu 14.x.

> PSQL 9.3, odoo 7.x.

> 2016-09-12 09:00:01 PDT LOG: server process (PID 23958) was

> terminated by signal 9: Killed

Usually, SIGKILLs coming out of nowhere indicate that the Linux OOM killer

has decided to target some database process. You need to do something to

reduce memory pressure and/or disable memory overcommit so that that

doesn't happen.

regards, tom lane

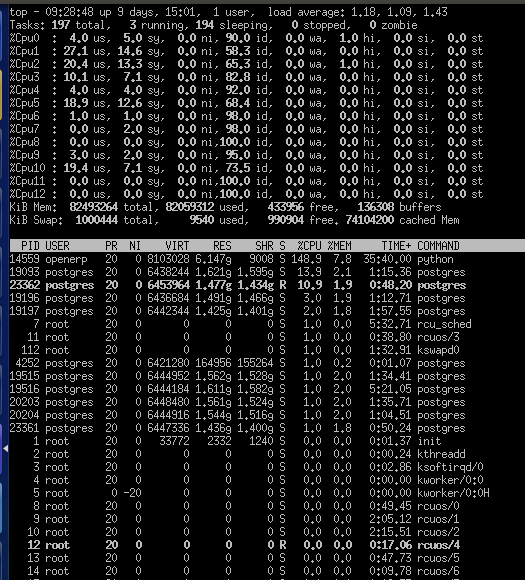

My current server has 82GB memory. Default settings but the only parameter I had chance is shared_buffers from 128MB to 6G. This server is dedicated to postgresql+odoo. Is the only parameter I can thing can reduce my memory utilization? Thanks Tom. On Mon, Oct 10, 2016 at 10:03 AM, Tom Lane <tgl@sss.pgh.pa.us> wrote: > Periko Support <pheriko.support@gmail.com> writes: >> I got some issues with my DB under ubuntu 14.x. >> PSQL 9.3, odoo 7.x. > >> 2016-09-12 09:00:01 PDT LOG: server process (PID 23958) was >> terminated by signal 9: Killed > > Usually, SIGKILLs coming out of nowhere indicate that the Linux OOM killer > has decided to target some database process. You need to do something to > reduce memory pressure and/or disable memory overcommit so that that > doesn't happen. > > regards, tom lane

Or add more memory to my server? On Mon, Oct 10, 2016 at 11:05 AM, Periko Support <pheriko.support@gmail.com> wrote: > My current server has 82GB memory. > > Default settings but the only parameter I had chance is shared_buffers > from 128MB to 6G. > > This server is dedicated to postgresql+odoo. > > Is the only parameter I can thing can reduce my memory utilization? > > Thanks Tom. > > > On Mon, Oct 10, 2016 at 10:03 AM, Tom Lane <tgl@sss.pgh.pa.us> wrote: >> Periko Support <pheriko.support@gmail.com> writes: >>> I got some issues with my DB under ubuntu 14.x. >>> PSQL 9.3, odoo 7.x. >> >>> 2016-09-12 09:00:01 PDT LOG: server process (PID 23958) was >>> terminated by signal 9: Killed >> >> Usually, SIGKILLs coming out of nowhere indicate that the Linux OOM killer >> has decided to target some database process. You need to do something to >> reduce memory pressure and/or disable memory overcommit so that that >> doesn't happen. >> >> regards, tom lane

Il 10/10/2016 18:24, Periko Support ha scritto: > 2016-09-12 09:00:01 PDT LOG: server process (PID 23958) was > terminated by signal 9: Killed > 2016-09-12 10:00:01 PDT LOG: server process (PID 30766) was > terminated by signal 9: Killed > 2016-09-12 15:00:01 PDT LOG: server process (PID 22030) was > terminated by signal 9: Killed > > These datetimes could be suspect. Every crash (kill) is done at "00"minutes and "01" minutes, that makes me ask "Isn't there something like cron running something that interfere with postgres?" Cheers, Moreno.

On Mon, Oct 10, 2016 at 2:14 PM, Moreno Andreo <moreno.andreo@evolu-s.it> wrote:

Il 10/10/2016 18:24, Periko Support ha scritto:2016-09-12 09:00:01 PDT LOG: server process (PID 23958) was

terminated by signal 9: Killed2016-09-12 10:00:01 PDT LOG: server process (PID 30766) was

terminated by signal 9: Killed2016-09-12 15:00:01 PDT LOG: server process (PID 22030) wasThese datetimes could be suspect. Every crash (kill) is done at "00"minutes and "01" minutes, that makes me ask "Isn't there something like cron running something that interfere with postgres?"

terminated by signal 9: Killed

Cheers,

Moreno.

--

Sent via pgsql-general mailing list (pgsql-general@postgresql.org)

To make changes to your subscription:

http://www.postgresql.org/mailpref/pgsql-general

The general philosphy is to start by setting shared_memory to 1/4 system memory, so

shared_buffers should be 20480 MB--

Melvin Davidson

I reserve the right to fantasize. Whether or not you

wish to share my fantasy is entirely up to you.

I reserve the right to fantasize. Whether or not you

wish to share my fantasy is entirely up to you.

Periko Support <pheriko.support@gmail.com> writes:

> My current server has 82GB memory.

You said this was running inside a VM, though --- maybe the VM is

resource-constrained?

In any case, turning off memory overcommit would be a good idea if

you're not concerned about running anything but Postgres.

regards, tom lane

On 10/10/2016 11:14 AM, Moreno Andreo wrote: > > Il 10/10/2016 18:24, Periko Support ha scritto: >> 2016-09-12 09:00:01 PDT LOG: server process (PID 23958) was >> terminated by signal 9: Killed > >> 2016-09-12 10:00:01 PDT LOG: server process (PID 30766) was >> terminated by signal 9: Killed > >> 2016-09-12 15:00:01 PDT LOG: server process (PID 22030) was >> terminated by signal 9: Killed >> >> > These datetimes could be suspect. Every crash (kill) is done at > "00"minutes and "01" minutes, that makes me ask "Isn't there something > like cron running something that interfere with postgres?" While we on the subject, the datetimes are almost a month old. Does that mean this problem was just noticed or are the datetimes wrong? > > Cheers, > Moreno. > > > > -- Adrian Klaver adrian.klaver@aklaver.com

Andreo u got a good observation here.

I got a script that run every hour why?

Odoo got some issues with IDLE connections, if we don't check our current psql connections after a while the system eat all connections and a lot of them are IDLE and stop answering users, we create a script that runs every hour, this is:

""" Script is used to kill database connection which are idle from last 15 minutes """

#!/usr/bin/env python

import psycopg2

import sys

import os

from os.path import join, expanduser

import subprocess, signal, psutil

import time

def get_conn():

conn_string = "host='localhost' dbname='template1' user='openerp' password='s$p_p@r70'"

try:

# get a connection, if a connect cannot be made an exception will be raised here

conn = psycopg2.connect(conn_string)

cursor = conn.cursor()

# print "successful Connection"

return cursor

except:

exceptionType, exceptionValue, exceptionTraceback = sys.exc_info()

sys.exit("Database connection failed!\n ->%s" % (exceptionValue))

def get_pid():

SQL="select pid, datname, usename from pg_stat_activity where usename = 'openerp' AND query_start < current_timestamp - INTERVAL '15' MINUTE;"

cursor = get_conn()

cursor.execute(SQL)

idle_record = cursor.fetchall()

print "---------------------------------------------------------------------------------------------------"

print "Date:",time.strftime("%d/%m/%Y")

print "idle record list: ", idle_record

print "---------------------------------------------------------------------------------------------------"

for pid in idle_record:

try:

# print "process details",pid

# os.system("kill -9 %s" % (int(pid[0]), ))

os.kill(int(pid[0]), signal.SIGKILL)

except OSError as ex:

continue

get_pid()

I got a script that run every hour why?

Odoo got some issues with IDLE connections, if we don't check our current psql connections after a while the system eat all connections and a lot of them are IDLE and stop answering users, we create a script that runs every hour, this is:

""" Script is used to kill database connection which are idle from last 15 minutes """

#!/usr/bin/env python

import psycopg2

import sys

import os

from os.path import join, expanduser

import subprocess, signal, psutil

import time

def get_conn():

conn_string = "host='localhost' dbname='template1' user='openerp' password='s$p_p@r70'"

try:

# get a connection, if a connect cannot be made an exception will be raised here

conn = psycopg2.connect(conn_string)

cursor = conn.cursor()

# print "successful Connection"

return cursor

except:

exceptionType, exceptionValue, exceptionTraceback = sys.exc_info()

sys.exit("Database connection failed!\n ->%s" % (exceptionValue))

def get_pid():

SQL="select pid, datname, usename from pg_stat_activity where usename = 'openerp' AND query_start < current_timestamp - INTERVAL '15' MINUTE;"

cursor = get_conn()

cursor.execute(SQL)

idle_record = cursor.fetchall()

print "---------------------------------------------------------------------------------------------------"

print "Date:",time.strftime("%d/%m/%Y")

print "idle record list: ", idle_record

print "---------------------------------------------------------------------------------------------------"

for pid in idle_record:

try:

# print "process details",pid

# os.system("kill -9 %s" % (int(pid[0]), ))

os.kill(int(pid[0]), signal.SIGKILL)

except OSError as ex:

continue

get_pid()

I will move this to run not every hour and see the reaction.

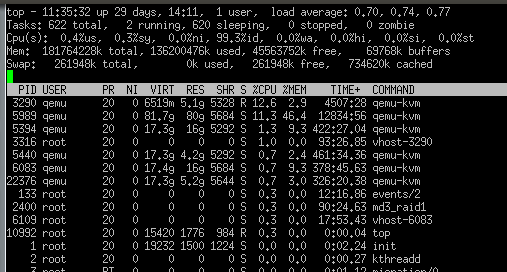

Is a easy move, about Tim, our current KVM server is good for me, see picture please:

free

total used free shared buffers cached

Mem: 181764228 136200312 45563916 468 69904 734652

-/+ buffers/cache: 135395756 46368472

Swap: 261948 0 261948

I got other vm but they are on other raid setup.

Tim u mention that u recommend reduce memory pressure, u mean to lower down my values like shared_buffers or increase memory?

Melvin I try that value before but my server cry, I will add more memory in a few weeks.

Any comment I will appreciated, thanks.

On Mon, Oct 10, 2016 at 11:22 AM, Tom Lane <tgl@sss.pgh.pa.us> wrote:

Periko Support <pheriko.support@gmail.com> writes:

> My current server has 82GB memory.

You said this was running inside a VM, though --- maybe the VM is

resource-constrained?

In any case, turning off memory overcommit would be a good idea if

you're not concerned about running anything but Postgres.

regards, tom lane

Attachment