RE: DB grow overtime with autovacuum (postgres 9.6.9) - Mailing list pgsql-admin

| From | Graham Myers |

|---|---|

| Subject | RE: DB grow overtime with autovacuum (postgres 9.6.9) |

| Date | |

| Msg-id | b261305e78fb6cb5e060b887a173af6b@mail.gmail.com Whole thread Raw |

| In response to | DB grow overtime with autovacuum (postgres 9.6.9) (Avihai Shoham <avihai.shoham@gmail.com>) |

| Responses |

Re: DB grow overtime with autovacuum (postgres 9.6.9)

|

| List | pgsql-admin |

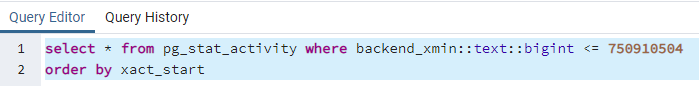

This is what I use

select * from pg_stat_activity where backend_xmin::text::bigint <= 422050991

order by xact_start

where the number (422050991) is the number reported by the vacuum analyze as stopping

first I find the ones that should have been picked up by the auto vauum

Then analyze the table in question

This then should give you the xmin_minimum (shown as oldest xmin)

This vacuum actually worked so removed all the dead rows when ran manually. However we can use that xid in the query above

This doesn’t show anything in my current db as the manual vacuum worked, but this combination should show you what auto vacuum isn’t picking up or is blocking auto vacuum.

My db is pretty clean at the moment so I am not getting any decent examples to show you

|

From: Avihai Shoham <avihai.shoham@gmail.com>

Sent: 14 March 2022 12:50

To: Graham Myers <gmyers@retailexpress.com>

Subject: Re: DB grow overtime with autovacuum (postgres 9.6.9)

Hi Graham,

The autovacuum works . i see the processes/workers

which query looks in pg_stat_activity for any xmin_minimum less than that value.?

Avihai

On Sun, Mar 13, 2022 at 3:57 PM Graham Myers <gmyers@retailexpress.com> wrote:

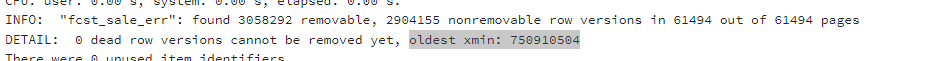

the analyze report for that table shows that it successfully removed dead tuples " DETAIL: 0 dead row versions cannot be removed yet.", which is why you are not getting told the xmin horizon - there isnt one for that table.

So either autovacuum isnt running properly (or at all) or there is another table that is blocking things up.

the last pgsql you post shows the oldest backend xmin - you need to look in pg_stat_activity for any xmin_minimum less than that value. at home today so cant post the sql I use - will do tomorrow when I get into work

Graham Myers

On Sun, 13 Mar 2022 at 13:42, Avihai Shoham <avihai.shoham@gmail.com> wrote:

Thank you Graham,

i ran vacuum verbose analyze to one of the problematic table , also

SELECT pid, datname, usename, state, backend_xmin

FROM pg_stat_activity

WHERE backend_xmin IS NOT NULL

ORDER BY age(backend_xmin) DESC;

1) i dont find xmin details in "vacuum verbose analyze"

2) i have one "session" return from the above select which is our DB

Any thoughts?

Unfortunately we dont have plan to upgrade postgresql

Thank you for all your support !

Avihai

manager=# vacuum verbose analyze cm_file;

INFO: vacuuming "public.cm_file"

INFO: scanned index "cm_file_pkey" to remove 8626 row versions

DETAIL: CPU 0.05s/0.49u sec elapsed 0.54 sec

INFO: scanned index "cm_file_cur_job_lock_holder_type_multi_idx" to remove 8626 row versions

DETAIL: CPU 0.10s/0.41u sec elapsed 0.52 sec

INFO: scanned index "cm_file_file_name_idx" to remove 8626 row versions

DETAIL: CPU 0.14s/0.54u sec elapsed 0.69 sec

INFO: scanned index "cm_file_format_idx" to remove 8626 row versions

DETAIL: CPU 0.13s/0.42u sec elapsed 0.55 sec

INFO: scanned index "cm_file_fs_id_bucket_id_multi_idx" to remove 8626 row versions

DETAIL: CPU 0.14s/0.48u sec elapsed 0.63 sec

INFO: scanned index "cm_file_fs_id_index_multi_idx" to remove 8626 row versions

DETAIL: CPU 0.06s/0.51u sec elapsed 0.57 sec

INFO: scanned index "cm_file_id_idx" to remove 8626 row versions

DETAIL: CPU 0.06s/0.50u sec elapsed 0.57 sec

INFO: scanned index "cm_file_state_idx" to remove 8626 row versions

DETAIL: CPU 0.11s/0.38u sec elapsed 0.50 sec

INFO: scanned index "cm_file_type_idx" to remove 8626 row versions

DETAIL: CPU 0.10s/0.39u sec elapsed 0.49 sec

INFO: "cm_file": removed 8626 row versions in 6054 pages

DETAIL: CPU 0.00s/0.04u sec elapsed 0.04 sec

INFO: index "cm_file_pkey" now contains 3689623 row versions in 31036 pages

DETAIL: 8275 index row versions were removed.

409 index pages have been deleted, 405 are currently reusable.

CPU 0.00s/0.00u sec elapsed 0.00 sec.

INFO: index "cm_file_cur_job_lock_holder_type_multi_idx" now contains 3689624 row versions in 56319 pages

DETAIL: 8626 index row versions were removed.

677 index pages have been deleted, 670 are currently reusable.

CPU 0.00s/0.00u sec elapsed 0.00 sec.

INFO: index "cm_file_file_name_idx" now contains 3689639 row versions in 57169 pages

DETAIL: 7709 index row versions were removed.

1261 index pages have been deleted, 1245 are currently reusable.

CPU 0.00s/0.00u sec elapsed 0.00 sec.

INFO: index "cm_file_format_idx" now contains 3689640 row versions in 67308 pages

DETAIL: 8626 index row versions were removed.

370 index pages have been deleted, 366 are currently reusable.

CPU 0.00s/0.00u sec elapsed 0.00 sec.

INFO: index "cm_file_fs_id_bucket_id_multi_idx" now contains 3689644 row versions in 85170 pages

DETAIL: 8626 index row versions were removed.

510 index pages have been deleted, 503 are currently reusable.

CPU 0.00s/0.00u sec elapsed 0.00 sec.

INFO: index "cm_file_fs_id_index_multi_idx" now contains 3689644 row versions in 43025 pages

DETAIL: 8626 index row versions were removed.

1271 index pages have been deleted, 1257 are currently reusable.

CPU 0.00s/0.00u sec elapsed 0.00 sec.

INFO: index "cm_file_id_idx" now contains 3689646 row versions in 40192 pages

DETAIL: 8561 index row versions were removed.

653 index pages have been deleted, 647 are currently reusable.

CPU 0.00s/0.00u sec elapsed 0.00 sec.

INFO: index "cm_file_state_idx" now contains 3689647 row versions in 57357 pages

DETAIL: 8626 index row versions were removed.

640 index pages have been deleted, 630 are currently reusable.

CPU 0.00s/0.00u sec elapsed 0.00 sec.

INFO: index "cm_file_type_idx" now contains 3689649 row versions in 56323 pages

DETAIL: 8626 index row versions were removed.

683 index pages have been deleted, 673 are currently reusable.

CPU 0.00s/0.00u sec elapsed 0.00 sec.

INFO: "cm_file": found 34184 removable, 2378138 nonremovable row versions in 130243 out of 194141 pages

DETAIL: 585 dead row versions cannot be removed yet.

There were 1595823 unused item pointers.

Skipped 0 pages due to buffer pins.

0 pages are entirely empty.

CPU 1.24s/5.08u sec elapsed 6.35 sec.

INFO: vacuuming "pg_toast.pg_toast_17260"

INFO: index "pg_toast_17260_index" now contains 0 row versions in 1 pages

DETAIL: 0 index row versions were removed.

0 index pages have been deleted, 0 are currently reusable.

CPU 0.00s/0.00u sec elapsed 0.00 sec.

INFO: "pg_toast_17260": found 0 removable, 0 nonremovable row versions in 0 out of 0 pages

DETAIL: 0 dead row versions cannot be removed yet.

There were 0 unused item pointers.

Skipped 0 pages due to buffer pins.

0 pages are entirely empty.

CPU 0.00s/0.00u sec elapsed 0.00 sec.

INFO: analyzing "public.cm_file"

INFO: "cm_file": scanned 30000 of 194141 pages, containing 568983 live rows and 147 dead rows; 30000 rows in sample, 3682098 estimated total rows

VACUUM

manager=# SELECT pid, datname, usename, state, backend_xmin

manager-# FROM pg_stat_activity

manager-# WHERE backend_xmin IS NOT NULL

manager-# ORDER BY age(backend_xmin) DESC;

pid | datname | usename | state | backend_xmin

-------+---------+---------+--------+--------------

33333 | manager | fabrix | active | 698159508

(1 row)

On Tue, Mar 1, 2022 at 3:23 PM Graham Myers <gmyers@retailexpress.com> wrote:

You need to find why the autovacuum is not completing. Use the following to find the tables that have not been updated - its more than likely to be the xmin horizon issue due to long running uncommitted transactions.

WITH constants

AS (SELECT

current_setting('autovacuum_vacuum_scale_factor')::float8 AS autovacuum_vacuum_scale_factor,

current_setting('autovacuum_vacuum_threshold')::float8 AS autovacuum_vacuum_threshold,

1000000 AS autovacuum_vacuum_threshold_trigger

)

SELECT

t.schemaname AS "SchemaName",

t.relname AS "TableName",

TO_CHAR(t.n_tup_ins, 'fm999G999G999G990') AS "Inserts",

TO_CHAR(t.n_tup_upd, 'fm999G999G999G990') AS "Updates",

TO_CHAR(t.n_tup_del, 'fm999G999G999G990') AS "Deletes",

TO_CHAR(c.reltuples, 'fm999G999G999G990') AS "AnalyzedTuples",

CASE WHEN c.reltuples < t.n_live_tup THEN '<' WHEN c.reltuples > t.n_live_tup THEN '>' ELSE '' END

AS "Stale",

TO_CHAR(t.n_live_tup,'fm999G999G999G990') AS "LiveTuples",

TO_CHAR(t.n_dead_tup,'fm999G999G999G990') AS "DeadTuples",

TO_CHAR(LEAST(99.999,n_dead_tup::float4/GREATEST(1,n_live_tup)::float4*100),'fm990D00%')

AS "DeadRatio", --Limit to 1000%

TO_CHAR(c.reltuples * COALESCE(SPLIT_PART(c.reloptions[1],'=',2)::float8,autovacuum_vacuum_scale_factor) + autovacuum_vacuum_threshold,'fm999G999G990')

AS "AutoVacuumTrigger",

CASE WHEN t.n_dead_tup > c.reltuples * COALESCE(SPLIT_PART(c.reloptions[1],'=',2)::float8,autovacuum_vacuum_scale_factor) + autovacuum_vacuum_threshold THEN '*' ELSE '' END

AS "ShouldVacuum",

TO_CHAR(COALESCE(SPLIT_PART(c.reloptions[1],'=',2)::float8,autovacuum_vacuum_scale_factor)*100,'fm999G990D00%')

AS "CurrentScaleFactor",

CASE WHEN c.reltuples * COALESCE(SPLIT_PART(c.reloptions[1],'=',2)::float8,autovacuum_vacuum_scale_factor) + autovacuum_vacuum_threshold > autovacuum_vacuum_threshold_trigger

THEN TO_CHAR(autovacuum_vacuum_threshold_trigger,'fm999G999G990') --threshold instead of scale factor

ELSE TO_CHAR(COALESCE(SPLIT_PART(c.reloptions[1],'=',2)::float8,autovacuum_vacuum_scale_factor)*100,'fm999G990D00%')

END AS "SuggestedScaleFactor",

t.last_analyze,

t.last_autoanalyze,

t.last_vacuum,

t.last_autovacuum,

t.analyze_count AS analyzecount,

t.autoanalyze_count AS autoanalyzecount,

t.vacuum_count AS vacuumcount,

t.autovacuum_count AS autovacuumcount,

pg_size_pretty(pg_total_relation_size(c.oid)) AS totalSize,

pg_size_pretty(pg_indexes_size(c.oid)) AS indexSize,

pg_size_pretty(COALESCE(pg_total_relation_size(c.reltoastrelid),0)) AS ToastSize

FROM pg_stat_user_tables AS t

JOIN pg_class AS c ON c.relname=t.relname

JOIN pg_catalog.pg_namespace AS n ON n.oid = c.relnamespace AND n.nspname=t.schemaname

CROSS JOIN constants

WHERE (t.n_live_tup > 0 AND

t.n_dead_tup > GREATEST(100000,c.reltuples * autovacuum_vacuum_scale_factor + autovacuum_vacuum_threshold) --more than 10k dead tuples or dead tuples exceed threshold

)

-- OR c.reltuples * autovacuum_vacuum_scale_factor + autovacuum_vacuum_threshold > autovacuum_vacuum_threshold_trigger -- the autovacuum threshold is over threshold - might need reducing

ORDER BY 1,2;

If you manually vacuum verbose analyze for one of the tables this reports on, it should then tell you the minimum xmin value (which is the horizon).

If you then look in pg_stat_activity it should tell you the transactions that are preventing the horizon from moving forward

select * from pg_stat_activity where backend_xmin::text::bigint <= <minimum_xmin value here> order by xact_start

eg

select * from pg_stat_activity where backend_xmin::text::bigint <= 422050991 order by xact_start

regards

Captain.Glumbo 😊

Graham Myers

From: Avihai Shoham <avihai.shoham@gmail.com>

Sent: 01 March 2022 12:21

To: pgsql-admin@lists.postgresql.org

Subject: DB grow overtime with autovacuum (postgres 9.6.9)

Hi All,

We use postgres 9.6.9

we set the following autovacuum setting , but still the DB grow overtime

autovacuum = on

work_mem= 50MB

wal_buffers= -1

synchronous_commit=off

log_autovacuum_min_duration = 0

autovacuum_vacuum_scale_factor = 0.1

autovacuum_analyze_scale_factor = 0.05

autovacuum_vacuum_threshold = 25

autovacuum_max_workers= 6

autovacuum_naptime = 10s

autovacuum_vacuum_cost_delay = 10ms

autovacuum_vacuum_cost_limit = -1

autovacuum_freeze_max_age = 1000000000

autovacuum_multixact_freeze_max_age = 400000000

autovacuum_work_mem = -1

autovacuum_analyze_threshold = 10

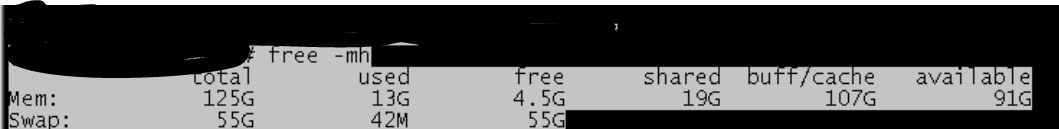

we have

any idea ?

DB size grew from 11G to 25G in 2wks.

I read that we may need to increase the max_fsm_pages setting. not sure if it is needed if we have autovacuum or how to do it if needed?

Thank you all

Avihai

Attachment

pgsql-admin by date: