COPY FROM STDIN BINARY failure - Mailing list pgsql-bugs

| From | Tobey, Paul SOPT 6151 |

|---|---|

| Subject | COPY FROM STDIN BINARY failure |

| Date | |

| Msg-id | IA0PR11MB7935925E44D2A1E814617EF7A9F32@IA0PR11MB7935.namprd11.prod.outlook.com Whole thread Raw |

| List | pgsql-bugs |

Hi,

I’m running "PostgreSQL 16.1, compiled by Visual C++ build 1937, 64-bit" in an AWS EC2 Windows Server 2022 Datacenter, Intel Xeon Platinum 8151@3.4GHz/4/8, 64GB RAM, 2TB disk.

The client software is .NET/Windows based (using Npgsql), running ~40 threads, each using COPY table FROM STDIN (FORMAT BINARY) commands via a separate connection, initializing a table. This all runs fine for about an hour, roughly 9500 COPY operations, before reaching a state where the COPY operations are no longer working (never complete), ending in timeouts (~17min timeout; normal execution time is < 15s). At the failure point 273,558,408 of 313,886,247 total rows have been successfully inserted. The failure point in repeated runs is approximately but not exactly the same. There are no foreign keys in the table and all indices are disabled.

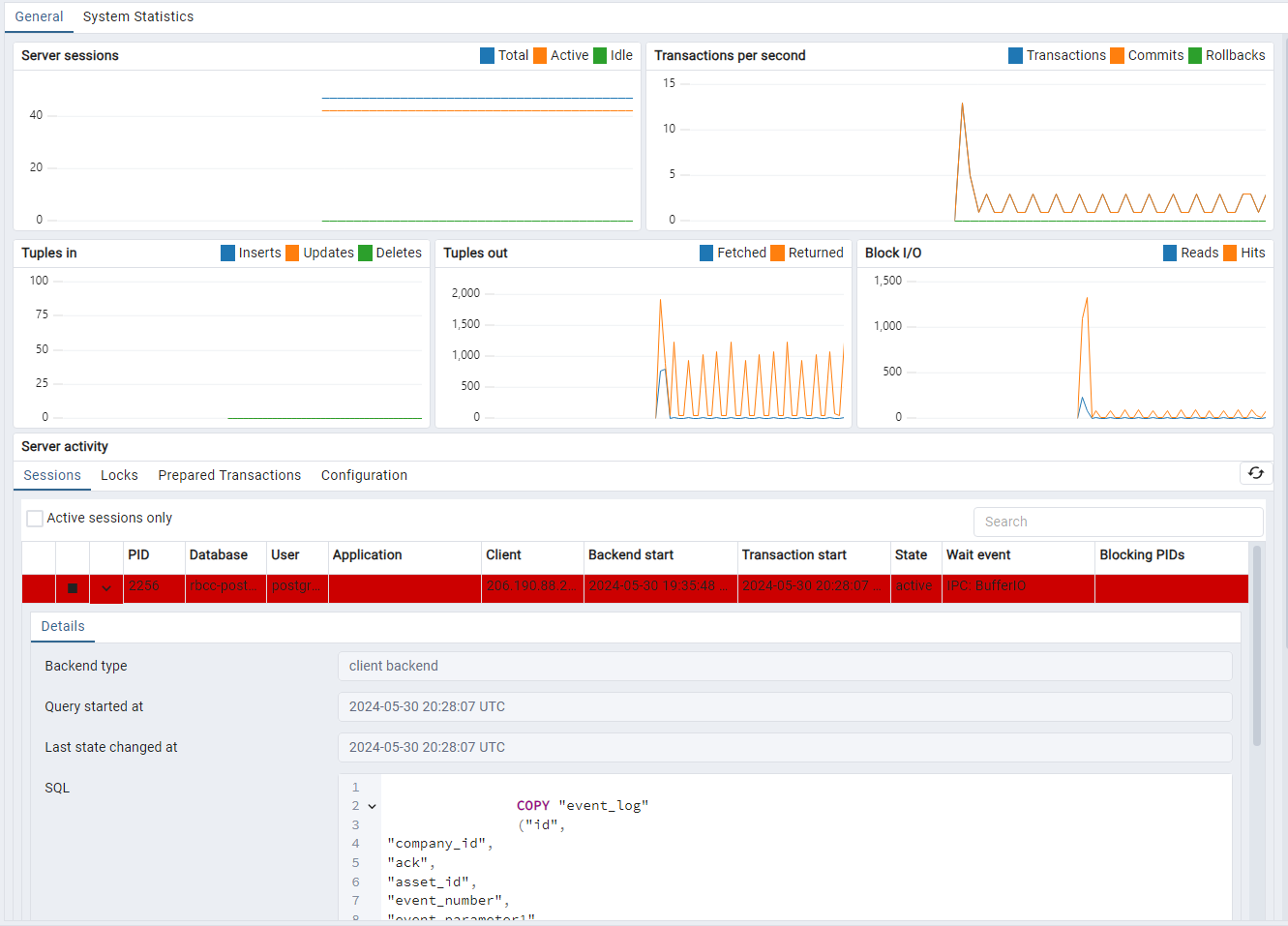

At the failure point, this is the state indicated in pgAdmin 4. There seems to be one IPC:BufferIO for each client with similar content each in the same “waiting” state:

The PostgreSQL log isn’t very revealing, at least to me, but I’ve attached it (LOG). FYI, there are multiple COPY operations starting at,

20:27:30

20:27:35

20:27:37

20:27:42

…

20:28:23

none of which finish successfully before timing out at 20:45:25, et al. The timeout occurs from the NpgsqlBinaryImporter.Complete() method; I think that means when it’s waiting for the data transmission to be completed before sending the end-of-stream/complete marker. For reference, the last 20 successful COPY operations (client side) are shown below:

2024-05-30 13:27:18.478 -07:00 [DBG] thread=18, write completed (id range = 1833675856 - 1833705498), milliseconds=6021

2024-05-30 13:27:18.530 -07:00 [DBG] thread=9, write completed (id range = 1839727448 - 1839757090), milliseconds=3595

2024-05-30 13:27:19.192 -07:00 [DBG] thread=31, write completed (id range = 1834209820 - 1834239462), milliseconds=6921

2024-05-30 13:27:19.282 -07:00 [DBG] thread=24, write completed (id range = 1839905436 - 1839935078), milliseconds=3116

2024-05-30 13:27:20.758 -07:00 [DBG] thread=17, write completed (id range = 1839549460 - 1839579102), milliseconds=4188

2024-05-30 13:27:21.140 -07:00 [DBG] thread=12, write completed (id range = 1837769580 - 1837799222), milliseconds=6123

2024-05-30 13:27:21.391 -07:00 [DBG] thread=25, write completed (id range = 1837235616 - 1837265258), milliseconds=6484

2024-05-30 13:27:21.521 -07:00 [DBG] thread=6, write completed (id range = 1839015496 - 1839045138), milliseconds=5115

2024-05-30 13:27:23.100 -07:00 [DBG] thread=20, write completed (id range = 1837057628 - 1837087270), milliseconds=6161

2024-05-30 13:27:23.100 -07:00 [DBG] thread=38, write completed (id range = 1838837508 - 1838867150), milliseconds=5261

2024-05-30 13:27:23.136 -07:00 [DBG] thread=1, write completed (id range = 1838125556 - 1838155198), milliseconds=4007

2024-05-30 13:27:23.815 -07:00 [DBG] thread=29, write completed (id range = 1838481532 - 1838511174), milliseconds=5716

2024-05-30 13:27:24.484 -07:00 [DBG] thread=30, write completed (id range = 1839371472 - 1839401114), milliseconds=5515

2024-05-30 13:27:24.896 -07:00 [DBG] thread=22, write completed (id range = 1837591592 - 1837621234), milliseconds=5235

2024-05-30 13:27:25.481 -07:00 [DBG] thread=32, write completed (id range = 1837947568 - 1837977210), milliseconds=4661

2024-05-30 13:27:25.982 -07:00 [DBG] thread=33, write completed (id range = 1835455736 - 1835485378), milliseconds=6355

2024-05-30 13:27:26.859 -07:00 [DBG] thread=19, write completed (id range = 1840439400 - 1840469042), milliseconds=3383

2024-05-30 13:27:27.740 -07:00 [DBG] thread=16, write completed (id range = 1840261412 - 1840291054), milliseconds=2299

2024-05-30 13:27:28.376 -07:00 [DBG] thread=10, write completed (id range = 1840083424 - 1840113066), milliseconds=3030

2024-05-30 13:27:30.447 -07:00 [DBG] thread=26, write completed (id range = 1838659520 - 1838689162), milliseconds=4026

I’ve also attached the configuration settings active during this run (CSV).

The expected result is a successful COPY operation in a time roughly consistent with the other recent operations which are writing similar row counts, ~40000. I’m hoping for a suggestion or two about how to localize the error or, if you recognize the symptom, what to correct. I’m sorry it’s not a simpler crash or query failure.

Thanks and regards,

Paul T.

Attachment

pgsql-bugs by date: