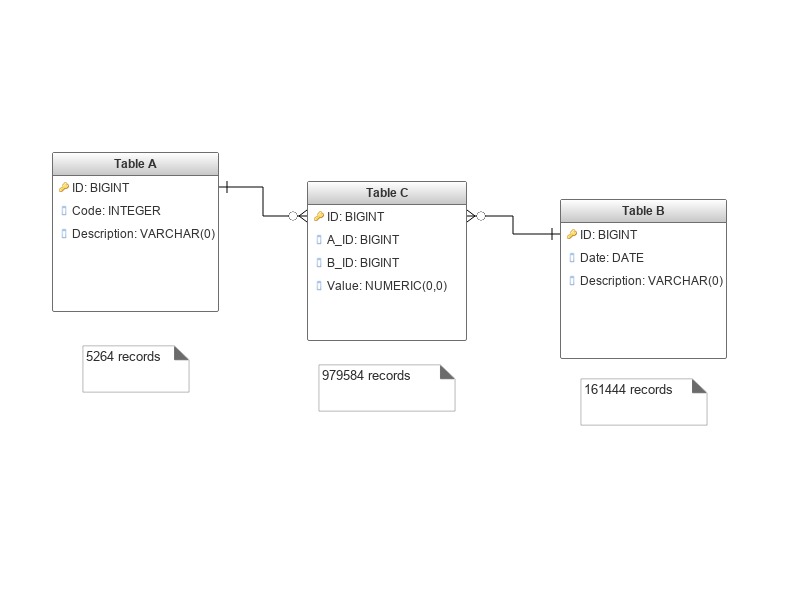

I have a report that takes about 20 minutes to generate. It is generated from 3 tables: according to image.

The report input parameter is a date range. So to generate it I select all records in Table A and run them

in loop-for. For each record in Table A I make a query Table B join with Table C where I filter the records through the date field and make the sum

of the value field.

Given this scenario, I would like your help in finding a solution that can reduce the generation time of this report. System developed in PHP / Laravel.

PostgreSQL

max_connections = 50

shared_buffers = 4GB

effective_cache_size = 12GB

maintenance_work_mem = 1GB

checkpoint_completion_target = 0.7

wal_buffers = 16MB

default_statistics_target = 100

random_page_cost = 4

effective_io_concurrency = 2

work_mem = 83886kB

min_wal_size = 1GB

max_wal_size = 2GB

Linux Server CentOS 7, Single Xeon 4-Core E3-1230 v5 3.4Ghz w / HT, 16GB RAM.

I've already created indexes in the fields that are involved in the queries.

Database schema

Report result

--

Atenciosamente,

Evandro Abreu.

Engenheiro de Sistemas at STS Informática Ltda.

Google Talk: evandro.abreu

Skype: evandro_abreu

Phone: +55 86 98835-0468