PostgreSQL 16: part 4 or CommitFest 2023-01

We continue to follow the news of the PostgreSQL 16 release, and today, the results of the fourth CommitFest are on the table. Let’s have a look.

If you missed the previous CommitFests, check out our reviews for 2022-07, 2022-09 and 2022-11.

Here are the patches I want to talk about this time:

- New function: random_normal

- Input formats for integer literals

- Goodbye, postmaster

- Parallel execution for string_agg and array_agg

- New parameter: enable_presorted_aggregate

- Planner support function for working with window functions

- Optimized grouping of repeating columns in GROUP BY and DISTINCT

- VACUUM parameters: SKIP_DATABASE_STATS and ONLY_DATABASE_STATS

- pg_dump: lock tables in batches

- PL/pgSQL: cursor variable initialization

- Roles with the CREATEROLE attribute

- Setting parameter values at the database and user level

- New parameter: reserved_connections

- postgres_fdw: analyzing foreign tables with TABLESAMPLE

- postgres_fdw: batch insert records during partition key updates

- pg_ident.conf: new ways to identify users in PostgreSQL

- Query jumbling for DDL and utility statements

- New function: bt_multi_page_stats

- New function: pg_split_walfile_name

- pg_walinspect, pg_waldump: collecting page images from WAL

New function: random_normal

commit: 38d81760

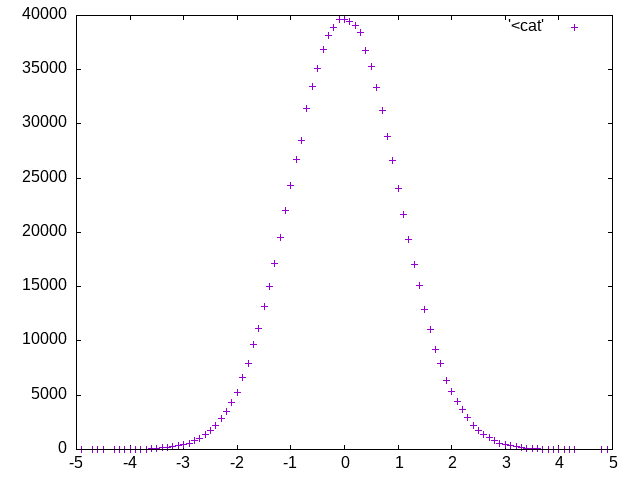

The function random returns a number between 0 and 1, and any number within the scope has an equal chance to be selected, i.e., the numbers are distributed uniformly. The patch introduces a new function random_normal as a way to get random numbers from a normal distribution. The function has two parameters: the mean and the standard deviation.

Let’s generate 1,000,000 numbers and see if the 68-95-99.7 rule applies. The rule states that 68% of normally distributed random numbers are within no more than one standard deviation (the second parameter) from the mean (the first parameter), 95% are within two standard deviations, and 99.7% are within three.

WITH samples AS (

SELECT random_normal(0.0, 1.0) AS x FROM generate_series(1,1000000)

)

SELECT count(x) FILTER (WHERE x BETWEEN -1 AND 1)/1000000.0 AS stddev_1,

count(x) FILTER (WHERE x BETWEEN -2 AND 2)/1000000.0 AS stddev_2,

count(x) FILTER (WHERE x BETWEEN -3 AND 3)/1000000.0 AS stddev_3

FROM samples;

stddev_1 | stddev_2 | stddev_3

------------------------+------------------------+------------------------

0.68391100000000000000 | 0.95473800000000000000 | 0.99724900000000000000

We can send the output of psql to gnuplot to display the resulting distribution visually:

WITH samples AS (

SELECT random_normal(0.0, 1.0) AS x FROM generate_series(1,1000000)

)

SELECT round(x::numeric,1) point, count(*) AS density

FROM samples

GROUP BY point

ORDER BY point

\g (format=unaligned tuples_only=on fieldsep='\t') | gnuplot -e "set term png; set output 'data.png'; plot '<cat'"

I separated the values into groups in increments of 0.1 to get the distribution density. As a result, in the data.png file we get the famous bell curve:

Note that the tablefunc extension already has a function normal_rand for obtaining random numbers from a normal distribution. Now, however, the functionality is available out-of-the-box, without the need for any extensions.

See also:

Waiting for PostgreSQL 16 – Invent random_normal() to provide normally-distributed random numbers (Hubert 'depesz' Lubaczewski)

Input formats for integer literals

commit: 6fcda9ab, 6dfacbf7, faff8f8e

The latest changes to the SQL standard allow declaring integer literals not only in decimal, but also in hexadecimal, octal and binary formats.

SELECT 0x2A hex_int, 0o52 oct_int, 0b101010 bin_int;

hex_int | oct_int | bin_int

---------+---------+---------

42 | 42 | 42

If the resulting number doesn’t fit into bigint, it is transformed into numeric type (the second commit). In addition, for large integer values, an underscore can be used to visually separate groups of digits (the third commit):

SELECT count(*) FROM generate_series(1, 1_000_000);

count

---------

1000000

See also:

Waiting for PostgreSQL 16 – Non-decimal integer literals (Hubert 'depesz' Lubaczewski)

Waiting for PostgreSQL 16 – Allow underscores in integer and numeric constants (Hubert 'depesz' Lubaczewski)

hex, oct, bin integers in PostgreSQL 16 (Pavlo Golub)

Goodbye, postmaster

On startup, the server starts a process traditionally referred to as postmaster...

Many a course on PostgreSQL architecture begin with a variation of this sentence. But in today’s Postgres, postmaster is just a 15-years-old moniker of the postgres process. You can still find the name in the documentation, and the bin catalog contains a postmaster file with a symlink to postgres.

The PostgreSQL 16 release finally says goodbye to postmaster, wiping every mention of it.

Parallel execution for string_agg and array_agg

commit: 16fd03e9

Prior to PostgreSQL 16, all queries containing string_agg and array_agg always executed sequentially:

15=# EXPLAIN (costs off)

SELECT fare_conditions, array_agg(flight_id), string_agg(ticket_no, ',')

FROM ticket_flights

GROUP BY fare_conditions;

QUERY PLAN

----------------------------------

HashAggregate

Group Key: fare_conditions

-> Seq Scan on ticket_flights

Now, parallel execution is also possible:

16=# EXPLAIN (costs off)

SELECT fare_conditions, array_agg(flight_id), string_agg(ticket_no, ',')

FROM ticket_flights

GROUP BY fare_conditions;

QUERY PLAN

-------------------------------------------------------------

Finalize GroupAggregate

Group Key: fare_conditions

-> Gather Merge

Workers Planned: 2

-> Sort

Sort Key: fare_conditions

-> Partial HashAggregate

Group Key: fare_conditions

-> Parallel Seq Scan on ticket_flights

New parameter: enable_presorted_aggregate

The first commit reduces the incremental sort cost estimate. This makes the planner choose it more often, specifically for optimizing ORDER BY and DISTINCT aggregates.

However, in some cases of non-uniform distribution of data in groups, ORDER BY and DISTINCT aggregates will perform slower with incremental sort. Disabling the new parameter enable_presorted_aggregate (the second commit) will use the old version planning behavior.

Planner support functions for working with window functions

commit: ed1a88dd

Unless a window function frame is defined explicitly, RANGE UNBOUNDED PRECEDING is used by default.

15=# EXPLAIN (costs off, analyze, timing off)

SELECT row_number() OVER (ORDER BY ticket_no)

FROM tickets;

QUERY PLAN

-----------------------------------------------------------------------------------

WindowAgg (actual rows=2949857 loops=1)

-> Index Only Scan using tickets_pkey on tickets (actual rows=2949857 loops=1)

Heap Fetches: 0

Planning Time: 0.098 ms

Execution Time: 682.466 ms

For this query, another frame can be specified: ROWS UNBOUNDED PRECEDING. This will not change the result, but will boost performance:

15=# EXPLAIN (costs off, analyze, timing off)

SELECT row_number() OVER (ORDER BY ticket_no ROWS UNBOUNDED PRECEDING)

FROM tickets;

QUERY PLAN

-----------------------------------------------------------------------------------

WindowAgg (actual rows=2949857 loops=1)

-> Index Only Scan using tickets_pkey on tickets (actual rows=2949857 loops=1)

Heap Fetches: 0

Planning Time: 0.100 ms

Execution Time: 483.560 ms

It’s faster because with RANGE you have to check all peer rows while with ROWS you don’t. Therefore, the default behavior can end up being slower.

In the 16th release, planner support functions were added for window functions row_number, rank, dense_rank, percent_rank, cume_dist and ntile. With their help, the planner will always use the most optimal way to define a window frame.

Optimized grouping of repeating columns in GROUP BY and DISTINCT

commit: 8d83a5d0

If multiple columns containing the same values are put into a group, it is sufficient to group by any one of them.

The optimization is illustrated well by the following example:

SET max_parallel_workers_per_gather = 0;

SET jit = off;

15=# EXPLAIN (costs off, analyze)

SELECT b.seat_no, s.seat_no

FROM boarding_passes b

JOIN seats s ON b.seat_no = s.seat_no

GROUP BY b.seat_no, s.seat_no;

QUERY PLAN

---------------------------------------------------------------------------------------------------------

Group (actual time=3537.559..10758.307 rows=461 loops=1)

Group Key: b.seat_no, s.seat_no

...

The rest of the plan is omitted as irrelevant.

Here are the first two lines of the plan of the same query after the patch:

QUERY PLAN

-----------------------------------------------------------------------------------------------------------

HashAggregate (actual time=8201.412..8201.440 rows=461 loops=1)

Group Key: b.seat_no

...

Note the Group Key line. In PostgreSQL 16, the planner knows that grouping by one column is enough and selects one plan based on that. In the older version, the grouping is performed on both columns, with a worse plan overall (see the execution time).

The same optimization is done for DISTINCT.

The idea of grouping by two columns containing identical values may seem strange at first, but I assume that automatic query generation systems (like various ORMs) may do that and may benefit from this bit of optimization.

VACUUM parameters: SKIP_DATABASE_STATS and ONLY_DATABASE_STATS

commit: a46a7011

After each vacuuming, the vacuuming process updates database stats: the frozen transactions counter (pg_database.datfrozenxid) and the multitransaction counter (pg_database.datminmxid). These operations require a full scan of pg_class in search of minimal relfrozenxid and relminmxid values. If either value is found that is greater than the current database value, the stats are updated. The more there are tables in the database, the longer this step takes.

When multiple VACUUM commands for multiple tables are executed in sequence, each one will update the counters, while it would be sufficient to only update them after the last vacuum in the sequence.

The vacuumdb utility (in PostgreSQL 12 through 15) runs VACUUM for each table in the database. With a high enough table count (thousands, tens of thousands) it becomes extremely inefficient due to the issues mentioned above. Even parallelization (-j key) doesn’t save the day, because only one process can update the database counters at a given time.

PostgreSQL 16 supplements the VACUUM command with two logical parameters:

- SKIP_DATABASE_STATS ― vacuum as usual but do not update the counters,

- ONLY_DATABASE_STATS ― do not vacuum at all, just update the counters.

Now vacuumdb vacuums tables first without updating the stats, and when it’s done, it runs vacuum once to update the stats:

VACUUM(ONLY_DATABASE_STATS)

pg_dump: lock tables in batches

commit: 5f53b42c

When called, pg_dump first creates a list of tables to dump and locks them one by one with LOCK TABLE in ACCESS SHARE mode. With thousands or tens of thousands of tables, and with pg_dump running on a remote machine, the total network latency will add up.

With the new patch, pg_dump locks all tables at once by calling LOCK TABLE with a list of tables. (It will split into multiple commands to fit the limit of 100,000 characters per command, if necessary.)

PL/pgSQL: cursor variable initialization

commit: d747dc85

Bound cursors are initialized by string-type variables that match the cursor names. Unbound cursors (refcursors), on the other hand, remain undefined until opened. When a refcursor is opened, it gets a unique name generated for it.

15=# DO $$ DECLARE

c CURSOR FOR SELECT 1; -- bound cursor variable

rc refcursor; -- unbound cursor variable

BEGIN

RAISE NOTICE 'c: %, rc: %', c, rc;

OPEN c;

OPEN rc FOR SELECT 2;

RAISE NOTICE 'c: %, rc: %', c, rc;

END;$$;

NOTICE: c: c, rc:

NOTICE: c: c, rc:

DO

If multiple nested code blocks or function calls use bound cursors with the same name, the initialization of bound cursors may lead to a name collision.

Consider this example. Here, two procedures use the same cursor name.

CREATE PROCEDURE proc_1() AS

$$DECLARE

c CURSOR FOR SELECT 1;

BEGIN

FOR r IN c LOOP

RAISE NOTICE 'r: %, c: %', r, c;

END LOOP;

END;$$ LANGUAGE plpgsql;

CREATE PROCEDURE proc_2() AS

$$DECLARE

c CURSOR FOR SELECT 2;

BEGIN

FOR r IN c LOOP

RAISE NOTICE 'r: %, c: %', r, c;

CALL proc_1();

END LOOP;

END;$$ LANGUAGE plpgsql;

Despite the fact that the cursor in both procedures is declared locally, the second procedure call will return an error:

15=# CALL proc_2();

NOTICE: r: (2), c: c

ERROR: cursor "c" already in use

CONTEXT: PL/pgSQL function proc_1() line 4 at FOR over cursor

SQL statement "CALL proc_1()"

PL/pgSQL function proc_2() line 6 at CALL

The new patch makes bound cursors behave like refcursors in this sense: they will not initialize until opened and they will have unique names generated for them (unless explicitly named in advance).

16=# CALL proc_2();

NOTICE: r: (2), c:

NOTICE: r: (1), c:

CALL

Roles with the CREATEROLE attribute

commit: cf5eb37c, f1358ca5, e5b8a4c0

One common but not very secure way of managing database roles without superuser privileges is using a regular role with the CREATEROLE attribute. This role can perform all necessary tasks but cannot grant the SUPERUSER attribute unless it has it itself.

Or, rather, it shouldn’t be able to, but it can – with a few extra steps:

15-postgres=# CREATE ROLE admin LOGIN CREATEROLE;

CREATE ROLE

15-postgres=# \c - admin

You are now connected to database "postgres" as user "admin".

15-admin=> CREATE ROLE bob LOGIN;

CREATE ROLE

15-admin=> GRANT pg_execute_server_program TO bob;

GRANT ROLE

Done. Now bob can run OS programs as the server owner.

Let’s see how the new release handles that.

16-postgres=# CREATE ROLE admin LOGIN CREATEROLE;

CREATE ROLE

16-postgres=# \c - admin

You are now connected to database "postgres" as user "admin".

16-admin=> CREATE ROLE bob LOGIN;

CREATE ROLE

16-admin=> \du

List of roles

Role name | Attributes | Member of

-----------+------------------------------------------------------------+-----------

admin | Create role | {bob}

bob | | {}

postgres | Superuser, Create role, Create DB, Replication, Bypass RLS | {}

Note that the admin role is automatically included into the bob role immediately after being created, and that it can manage the role (WITH ADMIN OPTION). This is done so that only the role that created the bob role can manage it. Suppose we have a second administrator with a dedicated role and with the CREATEROLE attribute.

16-postgres=# CREATE ROLE admin2 LOGIN CREATEROLE;

CREATE ROLE

16-postgres=# \c - admin2

You are now connected to database "postgres" as user "admin2".

16-admin2=> ALTER ROLE bob CONNECTION LIMIT 1;

ERROR: permission denied

The CREATEROLE attribute alone no longer grants the ability to manage other roles.

Let’s go back to the first admin and include bob into pg_execute_server_program.

16-admin=> GRANT pg_execute_server_program TO bob;

ERROR: must have admin option on role "pg_execute_server_program"

To execute the GRANT command, the admin role itself must be included in pg_execute_server_program and also have the admin option.

16-postgres=# GRANT pg_execute_server_program TO admin WITH ADMIN OPTION;

GRANT ROLE

16-admin=> GRANT pg_execute_server_program TO bob;

GRANT ROLE

The governing principle here is that you should be able to grant only what you are granted already. These are the changes presented in the first commit.

Now, let’s see if admin can switch to bob.

16-admin=> SET ROLE bob;

ERROR: permission denied to set role "bob"

No, they cannot. SET ROLE requires the SET option to be enabled when the role is initially granted. Let’s make sure it’s not there:

16-admin=> SELECT roleid::regrole, member::regrole, grantor::regrole,

admin_option, inherit_option, set_option

FROM pg_auth_members

WHERE roleid = 'bob'::regrole\gx

-[ RECORD 1 ]--+---------

roleid | bob

member | admin

grantor | postgres

admin_option | t

inherit_option | f

set_option | f

As you can see, only the ADMIN option is enabled, which allows you to manage the role. The disabled INHERIT option means that the admin role will not inherit the privileges of the bob role. And the disabled SET option will not allow the admin role to switch to bob.

With that said, admin can grant bob to itself one more time with the necessary options enabled:

16-admin=> GRANT bob TO admin WITH INHERIT TRUE, SET TRUE;

GRANT ROLE

16-admin=> SET ROLE bob;

SET

If you want your admin to regularly create new roles with the SET and/or INHERIT options, then you can automate the process using the new createrole_self_grant parameter (the second commit).

16-admin=> SET createrole_self_grant = 'INHERIT, SET';

SET

16-admin=> CREATE ROLE alice LOGIN;

CREATE ROLE

16-admin=> SELECT roleid::regrole, member::regrole, grantor::regrole,

admin_option, inherit_option, set_option

FROM pg_auth_members

WHERE roleid = 'alice'::regrole AND grantor = 'admin'::regrole\gx

-[ RECORD 1 ]--+------

roleid | alice

member | admin

grantor | admin

admin_option | f

inherit_option | t

set_option | t

alice has all the necessary membership options enabled.

The third commit allows the REPLICATION, BYPASSRLS and CREATEDB attributes to be set to other roles, provided that the admin role itself has them.

16-postgres=# ALTER ROLE admin CREATEDB;

ALTER ROLE

16-postgres=# \c - admin

You are now connected to database "postgres" as user "admin".

16-admin=> ALTER ROLE bob CREATEDB;

ALTER ROLE

16-admin=> ALTER ROLE bob REPLICATION;

ERROR: must have replication privilege to change replication attribute

See also:

Surviving Without A Superuser - Coming to v16 (Robert Haas)

Setting parameter values at the database and user level

commit: 096dd80f

In the demo database, you can switch the language using the custom parameter bookings.lang. Suppose that a user Bob creates a role called bob with no superuser privileges. He then connects to the database and decides that he wants to use the English version.

postgres=# \c demo bob

You are now connected to database "demo" as user "bob".

bob=> ALTER ROLE bob IN DATABASE demo SET bookings.lang = 'en';

ERROR: permission denied to set parameter "bookings.lang"

To set parameters with the ALTER ROLE|DATABASE .. SET command, bob has to be a superuser. (In PostgreSQL 15 and higher, you can grant specific parameters, including custom ones, with the GRANT .. ON PARAMETER command, but this doesn’t seem like the intended use here.)

In PostgreSQL 16, it became possible to explicitly specify that the parameter should be granted on behalf of a regular role without superuser rights:

bob=> ALTER ROLE bob IN DATABASE demo SET bookings.lang = 'en' USER SET;

ALTER ROLE

bob=> \c demo bob

You are now connected to database "demo" as user "bob".

bob=> SHOW bookings.lang;

bookings.lang

---------------

en

The pg_db_role_setting has a new flag to represent that. We can use the \drds command to have a look at it:

bob=> \drds

List of settings

Role | Database | Settings | User set

------+----------+------------------------------+----------

bob | demo | bookings.lang=en | t

| demo | search_path=bookings, public+| f +

| | bookings.lang=ru | f

New parameter: reserved_connections

commit: 6e2775e4

The parameter superuser_reserved_connections defines a number of connection slots (3 by default) out of total max_connections that are kept in reserve so that superusers could always connect to the server.

However, many maintenance, monitoring, and backup tasks are performed by regular, non-superuser roles. PostgreSQL 16 provides a way to secure connection slots for these tasks in the form of a new parameter reserved_connections (0 by default) and a new predefined role pg_use_reserved_connections. You can set a number of reserved_connections and grant pg_use_reserved_connections to maintenance roles, restart the server, and have a number of connections reserved for these roles.

See also:

Reserve connections for the pg_use_reserved_connections group in PostgreSQL 16 (Pavlo Golub)

postgres_fdw: analyzing foreign tables with TABLESAMPLE

commit: 8ad51b5f

Collecting statistics on large foreign tables isn’t done very efficiently. The ANALYZE command gets all the rows from a table and immediately discards most of them, because it only needs a small sample to produce statistics (300 × default_statistics_target).

In the new release, the TABLESAMPLE clause will be used by default to fetch the desired amount of rows. For older server versions that do not support TABLESAMPLE (9.5 and prior), the random function will be used to limit the number of rows instead.

Let’s test it. First, in the postgres database create a foreign table tickets (~3 million rows) for the tickets table from the demo database within the same cluster.

CREATE EXTENSION postgres_fdw;

CREATE SERVER srv

FOREIGN DATA WRAPPER postgres_fdw

OPTIONS (dbname 'demo');

CREATE USER MAPPING FOR postgres

SERVER srv

OPTIONS (user 'postgres');

IMPORT FOREIGN SCHEMA bookings

LIMIT TO (tickets)

FROM SERVER srv

INTO public;

Sampling for statistics collection is enabled by default:

\timing on

ANALYZE tickets;

ANALYZE

Time: 322,737 ms

By disabling sampling, we can see how much time it takes to collect statistics in previous PostgreSQL versions.

ALTER FOREIGN TABLE tickets OPTIONS (analyze_sampling 'off');

ANALYZE tickets;

ANALYZE

Time: 2068,078 ms (00:02,068)

And this is for databases in the same cluster. If the foreign table was located on a remote server, the collection time would've included network latency, and the overall time would've been even greater.

postgres_fdw: batch insert records during partition key updates

commit: 594f8d37

The postgres_fdw extension supports batch insertion of records since PostgreSQL 14. In the last CommitFest review, we mentioned that one of the restrictions on using batch mode for the COPY command is being removed. But there are others.

Batch mode is also prohibited if records are inserted with commands other than INSERT and COPY. What are other ways to insert records? One is using UPDATE on a partitioned table key. In this case, records are deleted from one partition and inserted into another. If the target partition is a foreign table, then batch mode is not used for it.

In the new release, this restriction is removed.

Note that so far we are talking only about inserting records. Batch mode for updating and deleting records has not yet been implemented.

pg_ident.conf: new ways to identify users in PostgreSQL

commit: efb6f4a4

In the pg_ident.conf file, the PostgreSQL username (PG-USERNAME field) is now processed according to the same rules as in pg_hba.conf. Allowed keywords are:

- the special “all” keyword (corresponds to any user),

- keywords starting with the “/” character (considered as regular expressions),

- keywords starting with the “+” character (initiate a role membership check).

Query jumbling for DDL and utility statements

commit: 3db72ebc

Query jumbling is performed when calculating the hash code that the pg_stat_statements extension uses to group identical queries. For DML commands, the hash code is calculated based on the parsed query, not the command text itself. This lets the system ignore things like character case and whitespaces and replace constants with parameters.

But besides DML, there are also DDL commands and utility statements, and for those the hash code was calculated based on the exact query text. For example, the following two commands are identical in every way except for the case of characters and the number of spaces between words, but in PostgreSQL 15, pg_stat_statements will consider them to be different statements:

DROP TABLE IF EXISTS t;

drop table if exists t;

Thanks to the new patch, this is no longer the case, and pg_stat_statements will read them as identical:

SELECT queryid, query, calls

FROM pg_stat_statements

WHERE query ILIKE 'drop table%';

queryid | query | calls

---------------------+------------------------+-------

8953406224830875875 | DROP TABLE IF EXISTS t | 2

There is room for improvement yet, though. Jumbling still cannot group together CALLs of procedures with different parameter values, nor can it group multiple uses of the SET command setting different values of the same parameter.

New function: bt_multi_page_stats

commit: 1fd3dd20

The pageinspect extension now features a new function bt_multi_page_stats, an easy way to collect statistics for multiple B-tree index pages at once. Stats for a single page were already obtainable with the bt_page_stats function. The new function accepts the number of pages as an argument and returns information about that number of pages. If the number is negative, it scans and returns stats for all the pages up until the end of the index.

SELECT blkno, type, live_items

FROM bt_multi_page_stats('pg_class_oid_index', 2, -1);

blkno | type | live_items

-------+------+------------

2 | l | 145

3 | r | 2

(2 rows)

New function: pg_split_walfile_name

Some WAL file read/write errors include the file name and an offset. The new function pg_split_walfile_name can turn that information into an LSN position.

To illustrate, let’s take the current wal file and an offset of 42 bytes.

SELECT pg_walfile_name(pg_current_wal_lsn()) AS walfile,

42 AS offset

\gset

pg_split_walfile_name returns the WAL segment number and the timeline ID.

SELECT :'walfile' AS walfile, * FROM pg_split_walfile_name(:'walfile');

walfile | segment_number | timeline_id

--------------------------+----------------+-------------

000000010000000100000040 | 320 | 1

The segment number and its size and the offset can be transformed into the LSN.

SELECT :'walfile' AS walfile,

:offset AS offset,

'0/0'::pg_lsn + w.segment_number*s.setting::int + :offset AS lsn

FROM pg_split_walfile_name(:'walfile') w, pg_settings s

WHERE s.name = 'wal_segment_size';

walfile | offset | lsn

--------------------------+--------+------------

000000010000000100000040 | 42 | 1/4000002A

pg_walinspect, pg_waldump: collecting page images from WAL

Both the WAL inspection tools can now extract images of pages from WAL.

Page images get into into WAL whenever a page is changed compared to its last checkpoint value.

Let’s note down the WAL positions before and after the UPDATE command:

CREATE TABLE t AS SELECT x FROM generate_series(1,100) x;

CHECKPOINT;

SELECT pg_current_wal_lsn() AS start_lsn;

start_lsn

------------

1/6DF4D250

UPDATE t SET x = x + 1;

SELECT pg_current_wal_lsn() AS end_lsn;

end_lsn

------------

1/6DF51A18

Let’s use the new function pg_get_wal_fpi_info from pg_walinspect (the first commit) to get all the page images written into WAL between these two LSNs.

CREATE EXTENSION pg_walinspect;

SELECT lsn, reltablespace, reldatabase, relfilenode, relblocknumber, forkname,

substr(fpi, 1, 8) AS fpi_trimmed

FROM pg_get_wal_fpi_info('1/6DF4D250', '1/6DF51A18');

lsn | reltablespace | reldatabase | relfilenode | relblocknumber | forkname | fpi_trimmed

------------+---------------+-------------+-------------+----------------+----------+--------------------

1/6DF4D250 | 1663 | 16384 | 1259 | 1 | main | \x01000000002ef46d

1/6DF4E9A8 | 1663 | 16384 | 1249 | 60 | main | \x01000000c0b6f46d

1/6DF4EF58 | 1663 | 16384 | 16678 | 0 | main | \x0100000008d0f46d

(3 rows)

The same can be done with pg_waldump. The new parameter save-fullpage (the second commit) takes a directory name and creates a separate file for each page there.

$ pg_waldump --start=1/6DF4D250 --end=1/6DF51A18 --quiet --save-fullpage=./waldump

$ ls -1 -s -h ./waldump

total 24K

8,0K 00000001-6DF4D250.1663.16384.1259.1_main

8,0K 00000001-6DF4E9A8.1663.16384.1249.60_main

8,0K 00000001-6DF4EF58.1663.16384.16678.0_main

That’s all for today. Now, let’s look forward to the results of the March CommitFest, the last one for the 16th release.